User login

Childhood cancer increases material hardship

Photo by Bill Branson

Results of a small study reveal the material hardships families experience when a child is undergoing cancer treatment.

Researchers surveyed 99 families of children with cancer.

Six months after the child’s diagnosis, 29% of the families reported having at least one household material hardship, such as food, housing, or energy insecurity.

Twenty percent of the families had reported having such hardships at the time of the child’s diagnosis.

Kira Bona, MD, of Dana-Farber/Boston Children’s Cancer and Blood Disorders Center in Massachusetts, and her colleagues reported results from this survey in Pediatric Blood & Cancer.

The researchers surveyed 99 families of pediatric cancer patients treated at Dana-Farber/Boston Children’s, first within a month of diagnosis and then 6 months later.

At diagnosis, 20% of the families were low-income, which was defined as 200% of the federal poverty level. Six months later, an additional 12% suffered income losses that pushed them into the low-income group.

At 6 months, 25% of the families said they had lost more than 40% of their household income due to treatment-related work disruptions. A total of 56% of adults who supported their families experienced a disruption of their work.

This included 15% of parents who either quit their jobs or were laid off as a result of their child’s illness, as well as 37% of respondents who cut their hours or took a leave of absence. Thirty-four percent of these individuals were paid during their leave.

At 6 months, 29% of families said they had at least one material hardship. Twenty percent reported food insecurity, 17% reported energy insecurity, and 8% reported housing insecurity.*

These findings surprised researchers, who said they expected lower levels of need at their center because it provides psychosocial support for patients and has resource specialists to help families facing financial difficulties.

“What it says is that even at a well-resourced, large referral center, about a third of families are reporting food, housing, or energy insecurity 6 months into treatment,” Dr Bona said. “If anything, the numbers in our study are an underestimate of what might be seen at less well-resourced institutions, which was somewhat surprising to us.”

By focusing on specific material hardships, which can be addressed through governmental or philanthropic support, the researchers hope they have identified variables that are easier for clinicians to ameliorate than overall income.

Dr Bona said subsequent research will examine whether material hardship has the same effect on patient outcomes as low-income status.

“If household material hardship is linked to poorer outcomes in pediatric oncology, just like income is, then we can design interventions to fix food, housing, and energy insecurity,” she said. “It’s not clear what you do about income in a clinical setting.” ![]()

*Definitions for household material hardships were as follows.

Food insecurity was measured via the US Household Food Security Survey Module: Six-Item Short Form, which includes questions to asses if respondents:

- sometimes/often do not have enough food to eat

- sometimes/often cannot afford to eat balanced meals

- sometimes/often worry about having enough money to buy food, etc.

Families met the definition for housing insecurity if they reported any of the following:

- crowding (defined as >2 people per bedroom in the home)

- multiple moves (>1 move in the prior year)

- doubling up (having to live with other people, even temporarily, because of financial difficulties in the past 6 months).

Families met the definition for energy insecurity if, in the prior 6 months, they had experienced any of the following:

- received a letter threatening to shut off the gas/electricity/oil to their house because they had not paid the bills

- had the gas/electric/oil company shut off electricity or refused to deliver oil/gas because they had not paid the bills

- had any days that their home was not heated/cooled because they couldn’t pay the bills

- had ever used a cooking stove to heat their home because they couldn’t pay the bills.

Photo by Bill Branson

Results of a small study reveal the material hardships families experience when a child is undergoing cancer treatment.

Researchers surveyed 99 families of children with cancer.

Six months after the child’s diagnosis, 29% of the families reported having at least one household material hardship, such as food, housing, or energy insecurity.

Twenty percent of the families had reported having such hardships at the time of the child’s diagnosis.

Kira Bona, MD, of Dana-Farber/Boston Children’s Cancer and Blood Disorders Center in Massachusetts, and her colleagues reported results from this survey in Pediatric Blood & Cancer.

The researchers surveyed 99 families of pediatric cancer patients treated at Dana-Farber/Boston Children’s, first within a month of diagnosis and then 6 months later.

At diagnosis, 20% of the families were low-income, which was defined as 200% of the federal poverty level. Six months later, an additional 12% suffered income losses that pushed them into the low-income group.

At 6 months, 25% of the families said they had lost more than 40% of their household income due to treatment-related work disruptions. A total of 56% of adults who supported their families experienced a disruption of their work.

This included 15% of parents who either quit their jobs or were laid off as a result of their child’s illness, as well as 37% of respondents who cut their hours or took a leave of absence. Thirty-four percent of these individuals were paid during their leave.

At 6 months, 29% of families said they had at least one material hardship. Twenty percent reported food insecurity, 17% reported energy insecurity, and 8% reported housing insecurity.*

These findings surprised researchers, who said they expected lower levels of need at their center because it provides psychosocial support for patients and has resource specialists to help families facing financial difficulties.

“What it says is that even at a well-resourced, large referral center, about a third of families are reporting food, housing, or energy insecurity 6 months into treatment,” Dr Bona said. “If anything, the numbers in our study are an underestimate of what might be seen at less well-resourced institutions, which was somewhat surprising to us.”

By focusing on specific material hardships, which can be addressed through governmental or philanthropic support, the researchers hope they have identified variables that are easier for clinicians to ameliorate than overall income.

Dr Bona said subsequent research will examine whether material hardship has the same effect on patient outcomes as low-income status.

“If household material hardship is linked to poorer outcomes in pediatric oncology, just like income is, then we can design interventions to fix food, housing, and energy insecurity,” she said. “It’s not clear what you do about income in a clinical setting.” ![]()

*Definitions for household material hardships were as follows.

Food insecurity was measured via the US Household Food Security Survey Module: Six-Item Short Form, which includes questions to asses if respondents:

- sometimes/often do not have enough food to eat

- sometimes/often cannot afford to eat balanced meals

- sometimes/often worry about having enough money to buy food, etc.

Families met the definition for housing insecurity if they reported any of the following:

- crowding (defined as >2 people per bedroom in the home)

- multiple moves (>1 move in the prior year)

- doubling up (having to live with other people, even temporarily, because of financial difficulties in the past 6 months).

Families met the definition for energy insecurity if, in the prior 6 months, they had experienced any of the following:

- received a letter threatening to shut off the gas/electricity/oil to their house because they had not paid the bills

- had the gas/electric/oil company shut off electricity or refused to deliver oil/gas because they had not paid the bills

- had any days that their home was not heated/cooled because they couldn’t pay the bills

- had ever used a cooking stove to heat their home because they couldn’t pay the bills.

Photo by Bill Branson

Results of a small study reveal the material hardships families experience when a child is undergoing cancer treatment.

Researchers surveyed 99 families of children with cancer.

Six months after the child’s diagnosis, 29% of the families reported having at least one household material hardship, such as food, housing, or energy insecurity.

Twenty percent of the families had reported having such hardships at the time of the child’s diagnosis.

Kira Bona, MD, of Dana-Farber/Boston Children’s Cancer and Blood Disorders Center in Massachusetts, and her colleagues reported results from this survey in Pediatric Blood & Cancer.

The researchers surveyed 99 families of pediatric cancer patients treated at Dana-Farber/Boston Children’s, first within a month of diagnosis and then 6 months later.

At diagnosis, 20% of the families were low-income, which was defined as 200% of the federal poverty level. Six months later, an additional 12% suffered income losses that pushed them into the low-income group.

At 6 months, 25% of the families said they had lost more than 40% of their household income due to treatment-related work disruptions. A total of 56% of adults who supported their families experienced a disruption of their work.

This included 15% of parents who either quit their jobs or were laid off as a result of their child’s illness, as well as 37% of respondents who cut their hours or took a leave of absence. Thirty-four percent of these individuals were paid during their leave.

At 6 months, 29% of families said they had at least one material hardship. Twenty percent reported food insecurity, 17% reported energy insecurity, and 8% reported housing insecurity.*

These findings surprised researchers, who said they expected lower levels of need at their center because it provides psychosocial support for patients and has resource specialists to help families facing financial difficulties.

“What it says is that even at a well-resourced, large referral center, about a third of families are reporting food, housing, or energy insecurity 6 months into treatment,” Dr Bona said. “If anything, the numbers in our study are an underestimate of what might be seen at less well-resourced institutions, which was somewhat surprising to us.”

By focusing on specific material hardships, which can be addressed through governmental or philanthropic support, the researchers hope they have identified variables that are easier for clinicians to ameliorate than overall income.

Dr Bona said subsequent research will examine whether material hardship has the same effect on patient outcomes as low-income status.

“If household material hardship is linked to poorer outcomes in pediatric oncology, just like income is, then we can design interventions to fix food, housing, and energy insecurity,” she said. “It’s not clear what you do about income in a clinical setting.” ![]()

*Definitions for household material hardships were as follows.

Food insecurity was measured via the US Household Food Security Survey Module: Six-Item Short Form, which includes questions to asses if respondents:

- sometimes/often do not have enough food to eat

- sometimes/often cannot afford to eat balanced meals

- sometimes/often worry about having enough money to buy food, etc.

Families met the definition for housing insecurity if they reported any of the following:

- crowding (defined as >2 people per bedroom in the home)

- multiple moves (>1 move in the prior year)

- doubling up (having to live with other people, even temporarily, because of financial difficulties in the past 6 months).

Families met the definition for energy insecurity if, in the prior 6 months, they had experienced any of the following:

- received a letter threatening to shut off the gas/electricity/oil to their house because they had not paid the bills

- had the gas/electric/oil company shut off electricity or refused to deliver oil/gas because they had not paid the bills

- had any days that their home was not heated/cooled because they couldn’t pay the bills

- had ever used a cooking stove to heat their home because they couldn’t pay the bills.

Team identifies new virus in blood supply

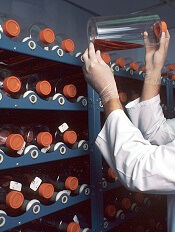

Photo courtesy of UAB Hospital

Scientists say they have discovered a new virus that can be transmitted through the blood supply.

It is currently unclear whether the virus is harmful or not, but researchers found that it shares genetic features with hepatitis C virus (HCV) and human

pegivirus (HPgV), which was formerly known as hepatitis G virus.

The new virus, which the researchers have named human hepegivirus-1 (HHpgV-1), is described in the journal mBio.

“HHpgV-1 is unique because it shares genetic similarity with both highly pathogenic HCV and the apparently non-pathogenic HPgV,” said study author Amit Kapoor, PhD, of Columbia University in New York, New York. “People need to be aware of this new infection in humans.”

To identify HHpgV-1, Dr Kapoor and his colleagues performed high-throughput sequencing on blood samples from 46 individuals in the Transfusion-Transmitted Viruses Study. The samples were collected between July 1974 and June 1980.

The researchers analyzed samples both pre- and post-transfusion and, along with a variety of known viruses, they identified HHpgV-1 in 2 individuals.

The virus was only present in post-transfusion samples, and additional tests showed that both patients were able to clear HHpgV-1.

Genetic analysis revealed that the virus was related to HCV and HPgV. Genomic testing of 70 additional individuals in the Transfusion-Transmitted Viruses Study did not detect further cases of HHpgV-1.

The researchers also performed high-throughput sequencing on samples from 106 individuals in the Multicenter Hemophilia Cohort Study who received plasma-derived clotting factor concentrates.

The team identified HHpgV-1 in 2 individuals, one of whom had persistent long-term infection (5.4 years).

“We just don’t know how many viruses are transmitted through the blood supply,” Dr Kapoor said. “There are so many viruses out there, and they need to be characterized in order to ensure that transfusions are safe.”

He said the next steps are to determine the prevalence of HHpgV-1 and whether it causes disease. If it causes disease, screening the blood supply for the virus will be appropriate.

“Ultimately, once we know more about this, we will look for the presence of this virus in people with certain diseases,” Dr Kapoor said.

“The unusually high infection prevalence of HCV, HBV, and HIV in hemophilia patients and other transfusion recipients could have been prevented by earlier identification of these viruses and development of accurate diagnostic assays.” ![]()

Photo courtesy of UAB Hospital

Scientists say they have discovered a new virus that can be transmitted through the blood supply.

It is currently unclear whether the virus is harmful or not, but researchers found that it shares genetic features with hepatitis C virus (HCV) and human

pegivirus (HPgV), which was formerly known as hepatitis G virus.

The new virus, which the researchers have named human hepegivirus-1 (HHpgV-1), is described in the journal mBio.

“HHpgV-1 is unique because it shares genetic similarity with both highly pathogenic HCV and the apparently non-pathogenic HPgV,” said study author Amit Kapoor, PhD, of Columbia University in New York, New York. “People need to be aware of this new infection in humans.”

To identify HHpgV-1, Dr Kapoor and his colleagues performed high-throughput sequencing on blood samples from 46 individuals in the Transfusion-Transmitted Viruses Study. The samples were collected between July 1974 and June 1980.

The researchers analyzed samples both pre- and post-transfusion and, along with a variety of known viruses, they identified HHpgV-1 in 2 individuals.

The virus was only present in post-transfusion samples, and additional tests showed that both patients were able to clear HHpgV-1.

Genetic analysis revealed that the virus was related to HCV and HPgV. Genomic testing of 70 additional individuals in the Transfusion-Transmitted Viruses Study did not detect further cases of HHpgV-1.

The researchers also performed high-throughput sequencing on samples from 106 individuals in the Multicenter Hemophilia Cohort Study who received plasma-derived clotting factor concentrates.

The team identified HHpgV-1 in 2 individuals, one of whom had persistent long-term infection (5.4 years).

“We just don’t know how many viruses are transmitted through the blood supply,” Dr Kapoor said. “There are so many viruses out there, and they need to be characterized in order to ensure that transfusions are safe.”

He said the next steps are to determine the prevalence of HHpgV-1 and whether it causes disease. If it causes disease, screening the blood supply for the virus will be appropriate.

“Ultimately, once we know more about this, we will look for the presence of this virus in people with certain diseases,” Dr Kapoor said.

“The unusually high infection prevalence of HCV, HBV, and HIV in hemophilia patients and other transfusion recipients could have been prevented by earlier identification of these viruses and development of accurate diagnostic assays.” ![]()

Photo courtesy of UAB Hospital

Scientists say they have discovered a new virus that can be transmitted through the blood supply.

It is currently unclear whether the virus is harmful or not, but researchers found that it shares genetic features with hepatitis C virus (HCV) and human

pegivirus (HPgV), which was formerly known as hepatitis G virus.

The new virus, which the researchers have named human hepegivirus-1 (HHpgV-1), is described in the journal mBio.

“HHpgV-1 is unique because it shares genetic similarity with both highly pathogenic HCV and the apparently non-pathogenic HPgV,” said study author Amit Kapoor, PhD, of Columbia University in New York, New York. “People need to be aware of this new infection in humans.”

To identify HHpgV-1, Dr Kapoor and his colleagues performed high-throughput sequencing on blood samples from 46 individuals in the Transfusion-Transmitted Viruses Study. The samples were collected between July 1974 and June 1980.

The researchers analyzed samples both pre- and post-transfusion and, along with a variety of known viruses, they identified HHpgV-1 in 2 individuals.

The virus was only present in post-transfusion samples, and additional tests showed that both patients were able to clear HHpgV-1.

Genetic analysis revealed that the virus was related to HCV and HPgV. Genomic testing of 70 additional individuals in the Transfusion-Transmitted Viruses Study did not detect further cases of HHpgV-1.

The researchers also performed high-throughput sequencing on samples from 106 individuals in the Multicenter Hemophilia Cohort Study who received plasma-derived clotting factor concentrates.

The team identified HHpgV-1 in 2 individuals, one of whom had persistent long-term infection (5.4 years).

“We just don’t know how many viruses are transmitted through the blood supply,” Dr Kapoor said. “There are so many viruses out there, and they need to be characterized in order to ensure that transfusions are safe.”

He said the next steps are to determine the prevalence of HHpgV-1 and whether it causes disease. If it causes disease, screening the blood supply for the virus will be appropriate.

“Ultimately, once we know more about this, we will look for the presence of this virus in people with certain diseases,” Dr Kapoor said.

“The unusually high infection prevalence of HCV, HBV, and HIV in hemophilia patients and other transfusion recipients could have been prevented by earlier identification of these viruses and development of accurate diagnostic assays.” ![]()

Imaging provides clearer picture of HSCs

in the bone marrow

By imaging the bone marrow of mice, researchers have uncovered new details about hematopoietic stem cells (HSCs).

The team’s deep imaging technique confirmed some previous findings and unearthed new information about where HSCs are located and how they are maintained.

The researchers said these findings, published in Nature, provide a significant advance toward understanding the microenvironment in which HSCs reside.

“The bone marrow and [HSCs] are like a haystack with needles inside,” said study author Sean Morrison, PhD, of the University of Texas Southwestern Medical Center in Dallas.

“Researchers in the past have been able to find a few stem cells, but they’ve only seen a small percentage of the stem cells that are there, so there has been some controversy about where exactly they’re located.”

“We developed a technique that allows us to digitally reconstruct the entire haystack and see all the needles—all the [HSCs] that are present in the bone marrow—and to know exactly where they are and how far they are from every other cell type.”

The team began by identifying a genetic marker that is almost exclusively expressed in HSCs. They then took green fluorescent protein from jellyfish and inserted it into the genetic marker, Ctnnal1, so they could identify the HSCs.

“Using a tissue-clearing technique that makes the bone and bone marrow see-through, and employing a high-resolution, confocal microscope to scan the entire bone marrow compartment, we were able to image large segments of bone marrow to locate every [HSC] and its relation to other cells,” said Melih Acar, PhD, also of the University of Texas Southwestern Medical Center.

The team’s work yielded new findings and confirmed others. They found that HSCs tend to be clustered in the center of the bone marrow, not closer to bone surfaces as some researchers previously thought.

They also found that HSCs are indeed associated with sinusoidal blood vessels, and there are no spatially distinct niches for dividing and non-dividing HSCs.

“With this improved understanding of the microenvironment and mechanisms that maintain [HSCs], we are closer to being able to replicate the environment for [HSCs] in culture,” Dr Morrison said.

“That achievement would significantly improve the safety and effectiveness of bone marrow transplants and potentially save thousands of additional lives each year.” ![]()

in the bone marrow

By imaging the bone marrow of mice, researchers have uncovered new details about hematopoietic stem cells (HSCs).

The team’s deep imaging technique confirmed some previous findings and unearthed new information about where HSCs are located and how they are maintained.

The researchers said these findings, published in Nature, provide a significant advance toward understanding the microenvironment in which HSCs reside.

“The bone marrow and [HSCs] are like a haystack with needles inside,” said study author Sean Morrison, PhD, of the University of Texas Southwestern Medical Center in Dallas.

“Researchers in the past have been able to find a few stem cells, but they’ve only seen a small percentage of the stem cells that are there, so there has been some controversy about where exactly they’re located.”

“We developed a technique that allows us to digitally reconstruct the entire haystack and see all the needles—all the [HSCs] that are present in the bone marrow—and to know exactly where they are and how far they are from every other cell type.”

The team began by identifying a genetic marker that is almost exclusively expressed in HSCs. They then took green fluorescent protein from jellyfish and inserted it into the genetic marker, Ctnnal1, so they could identify the HSCs.

“Using a tissue-clearing technique that makes the bone and bone marrow see-through, and employing a high-resolution, confocal microscope to scan the entire bone marrow compartment, we were able to image large segments of bone marrow to locate every [HSC] and its relation to other cells,” said Melih Acar, PhD, also of the University of Texas Southwestern Medical Center.

The team’s work yielded new findings and confirmed others. They found that HSCs tend to be clustered in the center of the bone marrow, not closer to bone surfaces as some researchers previously thought.

They also found that HSCs are indeed associated with sinusoidal blood vessels, and there are no spatially distinct niches for dividing and non-dividing HSCs.

“With this improved understanding of the microenvironment and mechanisms that maintain [HSCs], we are closer to being able to replicate the environment for [HSCs] in culture,” Dr Morrison said.

“That achievement would significantly improve the safety and effectiveness of bone marrow transplants and potentially save thousands of additional lives each year.” ![]()

in the bone marrow

By imaging the bone marrow of mice, researchers have uncovered new details about hematopoietic stem cells (HSCs).

The team’s deep imaging technique confirmed some previous findings and unearthed new information about where HSCs are located and how they are maintained.

The researchers said these findings, published in Nature, provide a significant advance toward understanding the microenvironment in which HSCs reside.

“The bone marrow and [HSCs] are like a haystack with needles inside,” said study author Sean Morrison, PhD, of the University of Texas Southwestern Medical Center in Dallas.

“Researchers in the past have been able to find a few stem cells, but they’ve only seen a small percentage of the stem cells that are there, so there has been some controversy about where exactly they’re located.”

“We developed a technique that allows us to digitally reconstruct the entire haystack and see all the needles—all the [HSCs] that are present in the bone marrow—and to know exactly where they are and how far they are from every other cell type.”

The team began by identifying a genetic marker that is almost exclusively expressed in HSCs. They then took green fluorescent protein from jellyfish and inserted it into the genetic marker, Ctnnal1, so they could identify the HSCs.

“Using a tissue-clearing technique that makes the bone and bone marrow see-through, and employing a high-resolution, confocal microscope to scan the entire bone marrow compartment, we were able to image large segments of bone marrow to locate every [HSC] and its relation to other cells,” said Melih Acar, PhD, also of the University of Texas Southwestern Medical Center.

The team’s work yielded new findings and confirmed others. They found that HSCs tend to be clustered in the center of the bone marrow, not closer to bone surfaces as some researchers previously thought.

They also found that HSCs are indeed associated with sinusoidal blood vessels, and there are no spatially distinct niches for dividing and non-dividing HSCs.

“With this improved understanding of the microenvironment and mechanisms that maintain [HSCs], we are closer to being able to replicate the environment for [HSCs] in culture,” Dr Morrison said.

“That achievement would significantly improve the safety and effectiveness of bone marrow transplants and potentially save thousands of additional lives each year.” ![]()

Inflammation, coagulation may contribute to CM pathogenesis

Image by Bruce Blaus

Cells associated with inflammation and coagulation accumulate in the brains of children with cerebral malaria (CM), according to research published in mBio.

The researchers studied autopsied brain tissue from more than 100 African children and found that children with CM had a more than 9-fold greater number of intravascular monocytes and platelets than children who did not have malaria.

In addition, HIV-positive children had more than twice the amount of intravascular monocytes and platelets than was observed in children who were not HIV-positive.

“Our study clearly shows that HIV exacerbates the disease process in cerebral malaria and also leads to some really interesting insights into what may be going on with children who are dying of cerebral malaria, which has been very controversial,” said study author Kami Kim, MD, of the Albert Einstein College of Medicine in the Bronx, New York.

“Children who are HIV-positive and at risk for malaria may benefit from targeted antimalaria drugs, and adjunctive therapies that target inflammation or blood clotting may improve outcomes from CM.”

CM is one of the most severe complications of malaria and can lead to behavioral problems, seizures, coma, or death. It is mainly seen in children younger than 5 living in sub-Saharan Africa.

CM is fairly rare, affecting about 2% of children with malaria, but CM is also thought to be responsible for half of malaria deaths.

“So it’s a big deal,” Dr Kim said. “The more we know about CM, the more that we can theoretically do something either to better treat or prevent it.”

With this goal in mind, Dr Kim and her colleagues analyzed children in an ongoing study of pediatric CM in Blantyre, Malawi. The researchers enrolled more than 3000 participants and completed 103 autopsies in those who died from either CM or other causes of coma.

HIV prevalence was higher than expected and led to higher mortality in CM patients. The prevalence of HIV was 14.5% in children enrolled in the study, compared to 2% in the general Malawi pediatric population.

Twenty-three percent of HIV-positive children died, while 17% of those without HIV died. Twenty percent of autopsy cases were HIV-positive.

The researchers noted that HIV-infected children with CM were older than children without HIV (an average of 99 months vs 32 months) and were not severely immunocompromised.

In addition, monocytes and platelets were significantly more prevalent in HIV-positive children with CM than neutrophils.

“We identified a unique and pervasive pathology pattern in pediatric CM, marked by monocytes and platelets, which is more severe in HIV-positive children,” Dr Kim said.

“It doesn’t prove that these cells cause clinical disease, but the fact that they’re there in huge abundance when there’s a lot of parasites is pretty strongly suggestive evidence that they’re doing something. We never see that in healthy brain tissue.”

Additional studies of children with varying severities of malaria are necessary in order to design better treatment algorithms, Dr Kim added. ![]()

Image by Bruce Blaus

Cells associated with inflammation and coagulation accumulate in the brains of children with cerebral malaria (CM), according to research published in mBio.

The researchers studied autopsied brain tissue from more than 100 African children and found that children with CM had a more than 9-fold greater number of intravascular monocytes and platelets than children who did not have malaria.

In addition, HIV-positive children had more than twice the amount of intravascular monocytes and platelets than was observed in children who were not HIV-positive.

“Our study clearly shows that HIV exacerbates the disease process in cerebral malaria and also leads to some really interesting insights into what may be going on with children who are dying of cerebral malaria, which has been very controversial,” said study author Kami Kim, MD, of the Albert Einstein College of Medicine in the Bronx, New York.

“Children who are HIV-positive and at risk for malaria may benefit from targeted antimalaria drugs, and adjunctive therapies that target inflammation or blood clotting may improve outcomes from CM.”

CM is one of the most severe complications of malaria and can lead to behavioral problems, seizures, coma, or death. It is mainly seen in children younger than 5 living in sub-Saharan Africa.

CM is fairly rare, affecting about 2% of children with malaria, but CM is also thought to be responsible for half of malaria deaths.

“So it’s a big deal,” Dr Kim said. “The more we know about CM, the more that we can theoretically do something either to better treat or prevent it.”

With this goal in mind, Dr Kim and her colleagues analyzed children in an ongoing study of pediatric CM in Blantyre, Malawi. The researchers enrolled more than 3000 participants and completed 103 autopsies in those who died from either CM or other causes of coma.

HIV prevalence was higher than expected and led to higher mortality in CM patients. The prevalence of HIV was 14.5% in children enrolled in the study, compared to 2% in the general Malawi pediatric population.

Twenty-three percent of HIV-positive children died, while 17% of those without HIV died. Twenty percent of autopsy cases were HIV-positive.

The researchers noted that HIV-infected children with CM were older than children without HIV (an average of 99 months vs 32 months) and were not severely immunocompromised.

In addition, monocytes and platelets were significantly more prevalent in HIV-positive children with CM than neutrophils.

“We identified a unique and pervasive pathology pattern in pediatric CM, marked by monocytes and platelets, which is more severe in HIV-positive children,” Dr Kim said.

“It doesn’t prove that these cells cause clinical disease, but the fact that they’re there in huge abundance when there’s a lot of parasites is pretty strongly suggestive evidence that they’re doing something. We never see that in healthy brain tissue.”

Additional studies of children with varying severities of malaria are necessary in order to design better treatment algorithms, Dr Kim added. ![]()

Image by Bruce Blaus

Cells associated with inflammation and coagulation accumulate in the brains of children with cerebral malaria (CM), according to research published in mBio.

The researchers studied autopsied brain tissue from more than 100 African children and found that children with CM had a more than 9-fold greater number of intravascular monocytes and platelets than children who did not have malaria.

In addition, HIV-positive children had more than twice the amount of intravascular monocytes and platelets than was observed in children who were not HIV-positive.

“Our study clearly shows that HIV exacerbates the disease process in cerebral malaria and also leads to some really interesting insights into what may be going on with children who are dying of cerebral malaria, which has been very controversial,” said study author Kami Kim, MD, of the Albert Einstein College of Medicine in the Bronx, New York.

“Children who are HIV-positive and at risk for malaria may benefit from targeted antimalaria drugs, and adjunctive therapies that target inflammation or blood clotting may improve outcomes from CM.”

CM is one of the most severe complications of malaria and can lead to behavioral problems, seizures, coma, or death. It is mainly seen in children younger than 5 living in sub-Saharan Africa.

CM is fairly rare, affecting about 2% of children with malaria, but CM is also thought to be responsible for half of malaria deaths.

“So it’s a big deal,” Dr Kim said. “The more we know about CM, the more that we can theoretically do something either to better treat or prevent it.”

With this goal in mind, Dr Kim and her colleagues analyzed children in an ongoing study of pediatric CM in Blantyre, Malawi. The researchers enrolled more than 3000 participants and completed 103 autopsies in those who died from either CM or other causes of coma.

HIV prevalence was higher than expected and led to higher mortality in CM patients. The prevalence of HIV was 14.5% in children enrolled in the study, compared to 2% in the general Malawi pediatric population.

Twenty-three percent of HIV-positive children died, while 17% of those without HIV died. Twenty percent of autopsy cases were HIV-positive.

The researchers noted that HIV-infected children with CM were older than children without HIV (an average of 99 months vs 32 months) and were not severely immunocompromised.

In addition, monocytes and platelets were significantly more prevalent in HIV-positive children with CM than neutrophils.

“We identified a unique and pervasive pathology pattern in pediatric CM, marked by monocytes and platelets, which is more severe in HIV-positive children,” Dr Kim said.

“It doesn’t prove that these cells cause clinical disease, but the fact that they’re there in huge abundance when there’s a lot of parasites is pretty strongly suggestive evidence that they’re doing something. We never see that in healthy brain tissue.”

Additional studies of children with varying severities of malaria are necessary in order to design better treatment algorithms, Dr Kim added. ![]()

Monitoring microbiome may help reduce infection in AML

Photo by Rhoda Baer

SAN DIEGO—Monitoring the microbiome during chemotherapy might help reduce infections in leukemia patients, according to research presented at ICAAC/ICC 2015.

The researchers studied buccal and fecal samples from patients with acute myeloid leukemia (AML) who were undergoing induction chemotherapy.

This revealed that decreased microbial diversity was associated with an increased risk of infection.

Jessica Galloway-Peña, PhD, of The University of Texas MD Anderson Cancer Center in Houston, and her colleagues described this work in a poster presentation at the meeting (poster B-993).

The team analyzed samples from 34 AML patients. All of the patients received prophylactic antimicrobials, and 91% received systemic antibiotics. The patients received an average of 5.4 different antibiotics for an average duration of 6.5 days.

The researchers collected buccal and fecal specimens from the patients every 96 hours over the course of induction chemotherapy. This yielded 276 buccal and 202 fecal samples—an average of 8 oral and 6 stool samples per patient.

The team used 16S rRNA V4 region sequencing to assign bacterial taxa and calculate α- and β-diversities. They had a total of 16,082,550 high-quality reads.

Analyzing these data, the researchers found that decreased microbial diversity, both at baseline and throughout induction, was associated with an increased risk of infection.

“We found the baseline microbial diversities from stool samples were significantly lower in patients that developed infections during chemotherapy compared to those that did not [P=0.006],” Dr Galloway-Peña said.

She and her colleagues also found that, overall, there was a significant decrease in oral (P=0.006) and intestinal (P<0.001) microbial diversity over the course of chemotherapy, although not all patients experienced decreases. There was a linear correlation between oral and stool microbiome changes (P=0.004).

In addition, over the course of induction, there was a significant increase (P=0.02) in the rates of bacterial domination (>30% of the microbiome dominated by 1 organism) by common causes of bacteremia, such as Streptococcus, Bacteriodes, Rothia, and Staphylococcus.

However, if patients were able to maintain a healthy microbiome overall or if they experienced an increase in microbial diversity over the induction course, they remained infection-free in the 90 days after induction.

Dr Galloway-Peña and her colleagues also assessed the role common antibiotics play in microbial diversity. And they found that carbapenems significantly decreased diversity.

There was a significant difference in oral and stool diversity when patients received carbapenems for at least 72 hours and when they did not (P=0.03). But there was no significant difference for piperacillin-tazobactam (P=1.0) or cefepime (P=0.48).

“This study shows that, in the future, doctors could use microbiome sampling in order to predict the chance of infectious complications during chemotherapy and that monitoring of a patient’s microbiome during induction chemotherapy could also predict their risk for microbial-related illness during subsequent treatments,” Dr Galloway-Peña said.

In addition, monitoring the microbiome could potentially mitigate the overuse of antimicrobials by allowing physicians to stratify patients according to their risk of developing an infection. ![]()

Photo by Rhoda Baer

SAN DIEGO—Monitoring the microbiome during chemotherapy might help reduce infections in leukemia patients, according to research presented at ICAAC/ICC 2015.

The researchers studied buccal and fecal samples from patients with acute myeloid leukemia (AML) who were undergoing induction chemotherapy.

This revealed that decreased microbial diversity was associated with an increased risk of infection.

Jessica Galloway-Peña, PhD, of The University of Texas MD Anderson Cancer Center in Houston, and her colleagues described this work in a poster presentation at the meeting (poster B-993).

The team analyzed samples from 34 AML patients. All of the patients received prophylactic antimicrobials, and 91% received systemic antibiotics. The patients received an average of 5.4 different antibiotics for an average duration of 6.5 days.

The researchers collected buccal and fecal specimens from the patients every 96 hours over the course of induction chemotherapy. This yielded 276 buccal and 202 fecal samples—an average of 8 oral and 6 stool samples per patient.

The team used 16S rRNA V4 region sequencing to assign bacterial taxa and calculate α- and β-diversities. They had a total of 16,082,550 high-quality reads.

Analyzing these data, the researchers found that decreased microbial diversity, both at baseline and throughout induction, was associated with an increased risk of infection.

“We found the baseline microbial diversities from stool samples were significantly lower in patients that developed infections during chemotherapy compared to those that did not [P=0.006],” Dr Galloway-Peña said.

She and her colleagues also found that, overall, there was a significant decrease in oral (P=0.006) and intestinal (P<0.001) microbial diversity over the course of chemotherapy, although not all patients experienced decreases. There was a linear correlation between oral and stool microbiome changes (P=0.004).

In addition, over the course of induction, there was a significant increase (P=0.02) in the rates of bacterial domination (>30% of the microbiome dominated by 1 organism) by common causes of bacteremia, such as Streptococcus, Bacteriodes, Rothia, and Staphylococcus.

However, if patients were able to maintain a healthy microbiome overall or if they experienced an increase in microbial diversity over the induction course, they remained infection-free in the 90 days after induction.

Dr Galloway-Peña and her colleagues also assessed the role common antibiotics play in microbial diversity. And they found that carbapenems significantly decreased diversity.

There was a significant difference in oral and stool diversity when patients received carbapenems for at least 72 hours and when they did not (P=0.03). But there was no significant difference for piperacillin-tazobactam (P=1.0) or cefepime (P=0.48).

“This study shows that, in the future, doctors could use microbiome sampling in order to predict the chance of infectious complications during chemotherapy and that monitoring of a patient’s microbiome during induction chemotherapy could also predict their risk for microbial-related illness during subsequent treatments,” Dr Galloway-Peña said.

In addition, monitoring the microbiome could potentially mitigate the overuse of antimicrobials by allowing physicians to stratify patients according to their risk of developing an infection. ![]()

Photo by Rhoda Baer

SAN DIEGO—Monitoring the microbiome during chemotherapy might help reduce infections in leukemia patients, according to research presented at ICAAC/ICC 2015.

The researchers studied buccal and fecal samples from patients with acute myeloid leukemia (AML) who were undergoing induction chemotherapy.

This revealed that decreased microbial diversity was associated with an increased risk of infection.

Jessica Galloway-Peña, PhD, of The University of Texas MD Anderson Cancer Center in Houston, and her colleagues described this work in a poster presentation at the meeting (poster B-993).

The team analyzed samples from 34 AML patients. All of the patients received prophylactic antimicrobials, and 91% received systemic antibiotics. The patients received an average of 5.4 different antibiotics for an average duration of 6.5 days.

The researchers collected buccal and fecal specimens from the patients every 96 hours over the course of induction chemotherapy. This yielded 276 buccal and 202 fecal samples—an average of 8 oral and 6 stool samples per patient.

The team used 16S rRNA V4 region sequencing to assign bacterial taxa and calculate α- and β-diversities. They had a total of 16,082,550 high-quality reads.

Analyzing these data, the researchers found that decreased microbial diversity, both at baseline and throughout induction, was associated with an increased risk of infection.

“We found the baseline microbial diversities from stool samples were significantly lower in patients that developed infections during chemotherapy compared to those that did not [P=0.006],” Dr Galloway-Peña said.

She and her colleagues also found that, overall, there was a significant decrease in oral (P=0.006) and intestinal (P<0.001) microbial diversity over the course of chemotherapy, although not all patients experienced decreases. There was a linear correlation between oral and stool microbiome changes (P=0.004).

In addition, over the course of induction, there was a significant increase (P=0.02) in the rates of bacterial domination (>30% of the microbiome dominated by 1 organism) by common causes of bacteremia, such as Streptococcus, Bacteriodes, Rothia, and Staphylococcus.

However, if patients were able to maintain a healthy microbiome overall or if they experienced an increase in microbial diversity over the induction course, they remained infection-free in the 90 days after induction.

Dr Galloway-Peña and her colleagues also assessed the role common antibiotics play in microbial diversity. And they found that carbapenems significantly decreased diversity.

There was a significant difference in oral and stool diversity when patients received carbapenems for at least 72 hours and when they did not (P=0.03). But there was no significant difference for piperacillin-tazobactam (P=1.0) or cefepime (P=0.48).

“This study shows that, in the future, doctors could use microbiome sampling in order to predict the chance of infectious complications during chemotherapy and that monitoring of a patient’s microbiome during induction chemotherapy could also predict their risk for microbial-related illness during subsequent treatments,” Dr Galloway-Peña said.

In addition, monitoring the microbiome could potentially mitigate the overuse of antimicrobials by allowing physicians to stratify patients according to their risk of developing an infection. ![]()

Study reveals mechanism of multidrug resistance in malaria

Image by Peter H. Seeberger

Researchers say they have identified a mechanism of multidrug resistance in malaria that represents a major threat to antimalarial drug policy.

The team exposed a strain of malaria parasites to artemisinin (the base compound for standard therapy) long-term and found the parasites developed widespread resistance to most other antimalarial drugs.

The group also discovered that this type of resistance cannot be detected by current assays.

Françoise Benoit-Vical, PhD, of Université de Toulouse in France, and his colleagues described this work in Emerging Infectious Diseases.

The researchers set out to study resistance mechanisms in Plasmodium falciparum parasites. So they exposed the parasites to artemisinin in vitro for 5 years.

Parasites that survived in the presence of artemisinin developed resistance to most other artemisinin-based or non-artemisinin-based antimalarial therapies, including partner molecules present in combination therapies used in endemic areas.

These parasites did not exhibit any known mutation in resistance genes. However, the researchers found the parasites could circumvent the toxic effect of the drugs by quiescence.

The parasites were able to suspend their development during exposure to antimalarial agents. As soon as they were no longer subjected to antimalarial therapy, they “woke up” and began to proliferate again.

The researchers also found that multidrug resistance based on this quiescence phenomenon cannot be detected by tests currently used to analyze parasitic resistance.

“In vitro tests carried out using the patient’s blood predict high sensitivity and, therefore, the treatment’s effectiveness, while parasites are resistant because they are quiescent,” Dr Benoit-Vical said.

“As such, it is essential to conduct research with relevant and appropriate tests in the field if the multi[drug]-resistant phenomenon that we identified in vitro is also present, in order to design therapeutic strategies accordingly.” ![]()

Image by Peter H. Seeberger

Researchers say they have identified a mechanism of multidrug resistance in malaria that represents a major threat to antimalarial drug policy.

The team exposed a strain of malaria parasites to artemisinin (the base compound for standard therapy) long-term and found the parasites developed widespread resistance to most other antimalarial drugs.

The group also discovered that this type of resistance cannot be detected by current assays.

Françoise Benoit-Vical, PhD, of Université de Toulouse in France, and his colleagues described this work in Emerging Infectious Diseases.

The researchers set out to study resistance mechanisms in Plasmodium falciparum parasites. So they exposed the parasites to artemisinin in vitro for 5 years.

Parasites that survived in the presence of artemisinin developed resistance to most other artemisinin-based or non-artemisinin-based antimalarial therapies, including partner molecules present in combination therapies used in endemic areas.

These parasites did not exhibit any known mutation in resistance genes. However, the researchers found the parasites could circumvent the toxic effect of the drugs by quiescence.

The parasites were able to suspend their development during exposure to antimalarial agents. As soon as they were no longer subjected to antimalarial therapy, they “woke up” and began to proliferate again.

The researchers also found that multidrug resistance based on this quiescence phenomenon cannot be detected by tests currently used to analyze parasitic resistance.

“In vitro tests carried out using the patient’s blood predict high sensitivity and, therefore, the treatment’s effectiveness, while parasites are resistant because they are quiescent,” Dr Benoit-Vical said.

“As such, it is essential to conduct research with relevant and appropriate tests in the field if the multi[drug]-resistant phenomenon that we identified in vitro is also present, in order to design therapeutic strategies accordingly.” ![]()

Image by Peter H. Seeberger

Researchers say they have identified a mechanism of multidrug resistance in malaria that represents a major threat to antimalarial drug policy.

The team exposed a strain of malaria parasites to artemisinin (the base compound for standard therapy) long-term and found the parasites developed widespread resistance to most other antimalarial drugs.

The group also discovered that this type of resistance cannot be detected by current assays.

Françoise Benoit-Vical, PhD, of Université de Toulouse in France, and his colleagues described this work in Emerging Infectious Diseases.

The researchers set out to study resistance mechanisms in Plasmodium falciparum parasites. So they exposed the parasites to artemisinin in vitro for 5 years.

Parasites that survived in the presence of artemisinin developed resistance to most other artemisinin-based or non-artemisinin-based antimalarial therapies, including partner molecules present in combination therapies used in endemic areas.

These parasites did not exhibit any known mutation in resistance genes. However, the researchers found the parasites could circumvent the toxic effect of the drugs by quiescence.

The parasites were able to suspend their development during exposure to antimalarial agents. As soon as they were no longer subjected to antimalarial therapy, they “woke up” and began to proliferate again.

The researchers also found that multidrug resistance based on this quiescence phenomenon cannot be detected by tests currently used to analyze parasitic resistance.

“In vitro tests carried out using the patient’s blood predict high sensitivity and, therefore, the treatment’s effectiveness, while parasites are resistant because they are quiescent,” Dr Benoit-Vical said.

“As such, it is essential to conduct research with relevant and appropriate tests in the field if the multi[drug]-resistant phenomenon that we identified in vitro is also present, in order to design therapeutic strategies accordingly.” ![]()

Optimizing investment in US research

Photo by Daniel Sone

A new report suggests a need to change regulations for federally funded research in the US.

According to the report, the current regulatory burden is diminishing the effectiveness of the US scientific enterprise and lowering the return on

the federal investment in research by directing investigators’ time away from research and toward administrative matters.

The report, Optimizing the Nation’s Investment in Academic Research: A New Regulatory Framework for the 21st Century: Part 1 (2015), was

compiled by the National Academies of Sciences, Engineering, and Medicine.

“Federal regulations and reporting requirements, which began as a way to exercise responsible oversight, have increased dramatically in recent

decades and are now unduly encumbering the very research enterprise they were intended to facilitate,” said Larry Faulkner, chair of the committee that conducted the study and wrote the report, and president emeritus of the University of Texas, Austin.

“A significant amount of investigators’ time is now spent complying with regulations, taking valuable time from research, teaching, and scholarship.”

The report lists specific actions the government and research institutions should take to reduce the regulatory burden.

New framework needed

The report says a new framework is needed to approach regulation in a holistic, rather than piecemeal, way. Regulatory requirements should be harmonized across funding agencies, and we need a more effective and efficient partnership between funding agencies and research institutions.

Congress should create a Research Policy Board to serve as a public-private forum for discussions related to regulation of federally funded research programs. The board should be a government-enabled, private-sector entity that will foster more effective conception, development, and synchronization of research policies.

The board should be formally connected to government through a new associate director position at the White House Office of Science and Technology Policy (OSTP) and through the Office of Information and Regulatory Affairs at the White House Office of Management and Budget (OMB).

Strengthening the research partnership also requires that universities demand the highest standards in institutional and individual behavior, foster a culture of integrity, and mete out appropriate sanctions when behavior deviates from ethical and professional norms, the report says.

The Research Policy Board should collaborate with research institutions to develop a policy that holds universities accountable, sanctioning institutions that fail to enforce standards.

The report also recommends a number of specific actions—a sample of which are listed below—that are intended to improve the efficiency of federal regulation and reduce duplication.

Congress should:

- Work with OMB to conduct a review of agency research grant proposal documents for the purpose of developing a uniform format to be used by all funding agencies

- Work with OSTP and research institutions to develop a single financial conflict-of-interest policy to be used by all research funding agencies

- Task a single agency with overseeing and unifying efforts to develop a central database of investigators and their professional output

- Direct agencies to align and harmonize their regulations and definitions concerning the protection of human subjects

- Instruct OSTP to convene representatives from federal agencies that fund animal research and from the research community to assess and report back to Congress on the feasibility and usefulness of a unified federal approach to policies and regulations pertaining to the care and use of research animals.

The White House OMB should:

- Require that research funding agencies use a uniform format for research progress reporting

- Amend the new Uniform Guidance to improve the efficiency and consistency of procurement standards, financial reporting, and cost accounting.

Federal agencies should:

- Limit research proposals to the minimum information necessary to permit peer evaluation of the merit of the scientific questions being asked, the feasibility of answering those questions, and the ability of the investigator to carry out that research. Any supplementary information—internal review board approval, conflict-of-interest disclosures, detailed budgets, etc.—should be provided “just in time,” after the research proposal is deemed likely to be funded

- Reduce and streamline reporting, assurances, and verifications. Agencies should also develop a central repository to house assurances.

Universities should:

- Conduct a review of institutional policies developed to comply with federal regulations of research to determine whether the institution itself has created excessive or unnecessary self-imposed burden

- Revise self-imposed burdensome institutional policies that go beyond those necessary and sufficient to comply with federal, state, and local requirements.

The release of the report completes the first phase of the committee’s study, which was expedited at the request of Congress.

The committee will now continue its assessment and issue a spring 2016 addendum report addressing issues it has been unable to address in the first phase. ![]()

Photo by Daniel Sone

A new report suggests a need to change regulations for federally funded research in the US.

According to the report, the current regulatory burden is diminishing the effectiveness of the US scientific enterprise and lowering the return on

the federal investment in research by directing investigators’ time away from research and toward administrative matters.

The report, Optimizing the Nation’s Investment in Academic Research: A New Regulatory Framework for the 21st Century: Part 1 (2015), was

compiled by the National Academies of Sciences, Engineering, and Medicine.

“Federal regulations and reporting requirements, which began as a way to exercise responsible oversight, have increased dramatically in recent

decades and are now unduly encumbering the very research enterprise they were intended to facilitate,” said Larry Faulkner, chair of the committee that conducted the study and wrote the report, and president emeritus of the University of Texas, Austin.

“A significant amount of investigators’ time is now spent complying with regulations, taking valuable time from research, teaching, and scholarship.”

The report lists specific actions the government and research institutions should take to reduce the regulatory burden.

New framework needed

The report says a new framework is needed to approach regulation in a holistic, rather than piecemeal, way. Regulatory requirements should be harmonized across funding agencies, and we need a more effective and efficient partnership between funding agencies and research institutions.

Congress should create a Research Policy Board to serve as a public-private forum for discussions related to regulation of federally funded research programs. The board should be a government-enabled, private-sector entity that will foster more effective conception, development, and synchronization of research policies.

The board should be formally connected to government through a new associate director position at the White House Office of Science and Technology Policy (OSTP) and through the Office of Information and Regulatory Affairs at the White House Office of Management and Budget (OMB).

Strengthening the research partnership also requires that universities demand the highest standards in institutional and individual behavior, foster a culture of integrity, and mete out appropriate sanctions when behavior deviates from ethical and professional norms, the report says.

The Research Policy Board should collaborate with research institutions to develop a policy that holds universities accountable, sanctioning institutions that fail to enforce standards.

The report also recommends a number of specific actions—a sample of which are listed below—that are intended to improve the efficiency of federal regulation and reduce duplication.

Congress should:

- Work with OMB to conduct a review of agency research grant proposal documents for the purpose of developing a uniform format to be used by all funding agencies

- Work with OSTP and research institutions to develop a single financial conflict-of-interest policy to be used by all research funding agencies

- Task a single agency with overseeing and unifying efforts to develop a central database of investigators and their professional output

- Direct agencies to align and harmonize their regulations and definitions concerning the protection of human subjects

- Instruct OSTP to convene representatives from federal agencies that fund animal research and from the research community to assess and report back to Congress on the feasibility and usefulness of a unified federal approach to policies and regulations pertaining to the care and use of research animals.

The White House OMB should:

- Require that research funding agencies use a uniform format for research progress reporting

- Amend the new Uniform Guidance to improve the efficiency and consistency of procurement standards, financial reporting, and cost accounting.

Federal agencies should:

- Limit research proposals to the minimum information necessary to permit peer evaluation of the merit of the scientific questions being asked, the feasibility of answering those questions, and the ability of the investigator to carry out that research. Any supplementary information—internal review board approval, conflict-of-interest disclosures, detailed budgets, etc.—should be provided “just in time,” after the research proposal is deemed likely to be funded

- Reduce and streamline reporting, assurances, and verifications. Agencies should also develop a central repository to house assurances.

Universities should:

- Conduct a review of institutional policies developed to comply with federal regulations of research to determine whether the institution itself has created excessive or unnecessary self-imposed burden

- Revise self-imposed burdensome institutional policies that go beyond those necessary and sufficient to comply with federal, state, and local requirements.

The release of the report completes the first phase of the committee’s study, which was expedited at the request of Congress.

The committee will now continue its assessment and issue a spring 2016 addendum report addressing issues it has been unable to address in the first phase. ![]()

Photo by Daniel Sone

A new report suggests a need to change regulations for federally funded research in the US.

According to the report, the current regulatory burden is diminishing the effectiveness of the US scientific enterprise and lowering the return on

the federal investment in research by directing investigators’ time away from research and toward administrative matters.

The report, Optimizing the Nation’s Investment in Academic Research: A New Regulatory Framework for the 21st Century: Part 1 (2015), was

compiled by the National Academies of Sciences, Engineering, and Medicine.

“Federal regulations and reporting requirements, which began as a way to exercise responsible oversight, have increased dramatically in recent

decades and are now unduly encumbering the very research enterprise they were intended to facilitate,” said Larry Faulkner, chair of the committee that conducted the study and wrote the report, and president emeritus of the University of Texas, Austin.

“A significant amount of investigators’ time is now spent complying with regulations, taking valuable time from research, teaching, and scholarship.”

The report lists specific actions the government and research institutions should take to reduce the regulatory burden.

New framework needed

The report says a new framework is needed to approach regulation in a holistic, rather than piecemeal, way. Regulatory requirements should be harmonized across funding agencies, and we need a more effective and efficient partnership between funding agencies and research institutions.

Congress should create a Research Policy Board to serve as a public-private forum for discussions related to regulation of federally funded research programs. The board should be a government-enabled, private-sector entity that will foster more effective conception, development, and synchronization of research policies.

The board should be formally connected to government through a new associate director position at the White House Office of Science and Technology Policy (OSTP) and through the Office of Information and Regulatory Affairs at the White House Office of Management and Budget (OMB).

Strengthening the research partnership also requires that universities demand the highest standards in institutional and individual behavior, foster a culture of integrity, and mete out appropriate sanctions when behavior deviates from ethical and professional norms, the report says.

The Research Policy Board should collaborate with research institutions to develop a policy that holds universities accountable, sanctioning institutions that fail to enforce standards.

The report also recommends a number of specific actions—a sample of which are listed below—that are intended to improve the efficiency of federal regulation and reduce duplication.

Congress should:

- Work with OMB to conduct a review of agency research grant proposal documents for the purpose of developing a uniform format to be used by all funding agencies

- Work with OSTP and research institutions to develop a single financial conflict-of-interest policy to be used by all research funding agencies

- Task a single agency with overseeing and unifying efforts to develop a central database of investigators and their professional output

- Direct agencies to align and harmonize their regulations and definitions concerning the protection of human subjects

- Instruct OSTP to convene representatives from federal agencies that fund animal research and from the research community to assess and report back to Congress on the feasibility and usefulness of a unified federal approach to policies and regulations pertaining to the care and use of research animals.

The White House OMB should:

- Require that research funding agencies use a uniform format for research progress reporting

- Amend the new Uniform Guidance to improve the efficiency and consistency of procurement standards, financial reporting, and cost accounting.

Federal agencies should:

- Limit research proposals to the minimum information necessary to permit peer evaluation of the merit of the scientific questions being asked, the feasibility of answering those questions, and the ability of the investigator to carry out that research. Any supplementary information—internal review board approval, conflict-of-interest disclosures, detailed budgets, etc.—should be provided “just in time,” after the research proposal is deemed likely to be funded

- Reduce and streamline reporting, assurances, and verifications. Agencies should also develop a central repository to house assurances.

Universities should:

- Conduct a review of institutional policies developed to comply with federal regulations of research to determine whether the institution itself has created excessive or unnecessary self-imposed burden

- Revise self-imposed burdensome institutional policies that go beyond those necessary and sufficient to comply with federal, state, and local requirements.

The release of the report completes the first phase of the committee’s study, which was expedited at the request of Congress.

The committee will now continue its assessment and issue a spring 2016 addendum report addressing issues it has been unable to address in the first phase.

First-line BV can produce high response rate in older HL patients

Photo from Business Wire

First-line treatment with brentuximab vedotin (BV) can produce a high response rate in older Hodgkin lymphoma (HL) patients who are unfit for chemotherapy, according to research published in Blood.

In this small study, single-agent BV produced an overall response rate of 92% and a complete response rate of 73%.

However, the drug also produced a high rate of peripheral sensory neuropathy (78%), which was the most common adverse event.

This phase 2 trial is the first to assess BV as front-line treatment. The study was funded by Seattle Genetics, Inc., which is developing BV in collaboration with Takeda Pharmaceutical Company.

Andres Forero-Torres, MD, of the University of Alabama at Birmingham, and his colleagues conducted the research, enrolling 27 HL patients (ages 64 to 92) in the trial.

The patients were either ineligible for conventional chemotherapy or declined treatment after receiving information about its risks.

They received 1.8 mg/kg of intravenous BV every 3 weeks for up to 16 doses. Those who benefitted from the drug could continue beyond this time period until disease progression, unacceptable toxicity, or study closure.

Patients received a median of 8 cycles, with 4 patients completing 16 cycles and 1 patient completing 23 cycles.

Peripheral neuropathy was the primary adverse event leading to dose modifications. Fourteen patients (52%) had dose delays, typically lasting a week (range, 1 to 3). But 11 patients (41%) had permanent dose reductions to 1.2 mg/kg.

Safety

All 27 patients were evaluable for safety, and all experienced at least 1 adverse event. The most commonly reported events were peripheral sensory neuropathy (n=21, 78%), fatigue (n=12, 44%), and nausea (n=12, 44%).

Treatment-emergent grade 3 adverse events included peripheral sensory neuropathy (n=7, 26%), rash (n=2, 7%), urinary tract infection (n=1, 4%), and maculopapular rash (n=1, 4%)

There were 2 grade 4 events—hyperuricemia and drug hypersensitivity to anesthesia—considered unrelated to BV.

Efficacy

Twenty-six patients were evaluable for efficacy. One patient was found to have nodular lymphocyte predominant HL and was therefore excluded.

The overall response rate was 92%. Nineteen patients had a complete response, 5 had a partial response, and 2 had stable disease.

The median duration of response was about 9.1 months (range, 2.8 to 20.9+ months).

The median progression-free survival was 10.5 months (range, 2.6+ to 22.3+ months), and the median overall survival had not been reached at the time of analysis (range, 4.6+ to 24.9+ months).

“While we observed promising responses,” Dr Forero-Torres said, “the next step is to evaluate this drug in combination with additional chemotherapy or immunotherapies that might allow us to prolong the response without relapse.”

Photo from Business Wire

First-line treatment with brentuximab vedotin (BV) can produce a high response rate in older Hodgkin lymphoma (HL) patients who are unfit for chemotherapy, according to research published in Blood.

In this small study, single-agent BV produced an overall response rate of 92% and a complete response rate of 73%.

However, the drug also produced a high rate of peripheral sensory neuropathy (78%), which was the most common adverse event.

This phase 2 trial is the first to assess BV as front-line treatment. The study was funded by Seattle Genetics, Inc., which is developing BV in collaboration with Takeda Pharmaceutical Company.

Andres Forero-Torres, MD, of the University of Alabama at Birmingham, and his colleagues conducted the research, enrolling 27 HL patients (ages 64 to 92) in the trial.

The patients were either ineligible for conventional chemotherapy or declined treatment after receiving information about its risks.

They received 1.8 mg/kg of intravenous BV every 3 weeks for up to 16 doses. Those who benefitted from the drug could continue beyond this time period until disease progression, unacceptable toxicity, or study closure.

Patients received a median of 8 cycles, with 4 patients completing 16 cycles and 1 patient completing 23 cycles.

Peripheral neuropathy was the primary adverse event leading to dose modifications. Fourteen patients (52%) had dose delays, typically lasting a week (range, 1 to 3). But 11 patients (41%) had permanent dose reductions to 1.2 mg/kg.

Safety

All 27 patients were evaluable for safety, and all experienced at least 1 adverse event. The most commonly reported events were peripheral sensory neuropathy (n=21, 78%), fatigue (n=12, 44%), and nausea (n=12, 44%).

Treatment-emergent grade 3 adverse events included peripheral sensory neuropathy (n=7, 26%), rash (n=2, 7%), urinary tract infection (n=1, 4%), and maculopapular rash (n=1, 4%)

There were 2 grade 4 events—hyperuricemia and drug hypersensitivity to anesthesia—considered unrelated to BV.

Efficacy

Twenty-six patients were evaluable for efficacy. One patient was found to have nodular lymphocyte predominant HL and was therefore excluded.

The overall response rate was 92%. Nineteen patients had a complete response, 5 had a partial response, and 2 had stable disease.

The median duration of response was about 9.1 months (range, 2.8 to 20.9+ months).

The median progression-free survival was 10.5 months (range, 2.6+ to 22.3+ months), and the median overall survival had not been reached at the time of analysis (range, 4.6+ to 24.9+ months).

“While we observed promising responses,” Dr Forero-Torres said, “the next step is to evaluate this drug in combination with additional chemotherapy or immunotherapies that might allow us to prolong the response without relapse.”

Photo from Business Wire

First-line treatment with brentuximab vedotin (BV) can produce a high response rate in older Hodgkin lymphoma (HL) patients who are unfit for chemotherapy, according to research published in Blood.

In this small study, single-agent BV produced an overall response rate of 92% and a complete response rate of 73%.

However, the drug also produced a high rate of peripheral sensory neuropathy (78%), which was the most common adverse event.

This phase 2 trial is the first to assess BV as front-line treatment. The study was funded by Seattle Genetics, Inc., which is developing BV in collaboration with Takeda Pharmaceutical Company.

Andres Forero-Torres, MD, of the University of Alabama at Birmingham, and his colleagues conducted the research, enrolling 27 HL patients (ages 64 to 92) in the trial.

The patients were either ineligible for conventional chemotherapy or declined treatment after receiving information about its risks.

They received 1.8 mg/kg of intravenous BV every 3 weeks for up to 16 doses. Those who benefitted from the drug could continue beyond this time period until disease progression, unacceptable toxicity, or study closure.

Patients received a median of 8 cycles, with 4 patients completing 16 cycles and 1 patient completing 23 cycles.

Peripheral neuropathy was the primary adverse event leading to dose modifications. Fourteen patients (52%) had dose delays, typically lasting a week (range, 1 to 3). But 11 patients (41%) had permanent dose reductions to 1.2 mg/kg.

Safety

All 27 patients were evaluable for safety, and all experienced at least 1 adverse event. The most commonly reported events were peripheral sensory neuropathy (n=21, 78%), fatigue (n=12, 44%), and nausea (n=12, 44%).

Treatment-emergent grade 3 adverse events included peripheral sensory neuropathy (n=7, 26%), rash (n=2, 7%), urinary tract infection (n=1, 4%), and maculopapular rash (n=1, 4%)

There were 2 grade 4 events—hyperuricemia and drug hypersensitivity to anesthesia—considered unrelated to BV.

Efficacy

Twenty-six patients were evaluable for efficacy. One patient was found to have nodular lymphocyte predominant HL and was therefore excluded.

The overall response rate was 92%. Nineteen patients had a complete response, 5 had a partial response, and 2 had stable disease.

The median duration of response was about 9.1 months (range, 2.8 to 20.9+ months).

The median progression-free survival was 10.5 months (range, 2.6+ to 22.3+ months), and the median overall survival had not been reached at the time of analysis (range, 4.6+ to 24.9+ months).