User login

The Cause of All That Stress: Tonsillectomy?

This transcript has been edited for clarity.

You know those times in your life when you’re just feeling ... stressed? You’re on the edge; you have no chill; everything just sort of gets to you. If you can step away from the anxiety for a moment, you might ask yourself where it’s all coming from. Is it really the stuff in your inbox at work or is it money issues at home? Is it something with your relationship, or maybe it’s your sleep quality or your diet? One thing you probably won’t blame for those acute stress reactions is the tonsillectomy you had as a kid. But according to new research, maybe you should.

Tonsillectomy and adenoidectomy are among the most common surgical procedures young people in the United States undergo, with about 300,000 cases a year, according to recent numbers. That’s down a bit from numbers a decade or so ago, but suffice it to say, a good chunk of the population is walking around right now without their tonsils.

The data supporting tonsillectomy have never been great. The two big indications for the surgery are recurrent sore throat — data show that tonsillectomy reduces this by about 0.7 sore throats per year— and obstructive sleep apnea (OSA). The data for improvement of OSA are a bit better than the data for sore throats.

Also, tonsillectomy is a relatively quick, relatively well-reimbursed surgery with indications that are — let’s be honest — somewhat subjective, and so variation is high. One study found that in a single Vermont town, nearly 60% of the population had had their tonsils removed by the time they turned 18. A few towns over, the rate was 20%.

A few factors have led to the decline of tonsillectomy in recent years. Reimbursement rates have gone down a bit. Additionally, better data collection and statistical analysis have shown that the benefits of the procedure are relatively modest.

And then there is a body of medical literature that at first struck me as surprising and almost bizarre: data linking tonsillectomy to subsequent physical and psychiatric disorders.

I teach a course on interpretation of the medical literature, and one of the first things I teach my students is to check their gut when they see the conclusion of a study.

Basically, even before you read the data, have a sense in your own mind if the hypothesis seems reasonable. If a paper is going to conclude that smoking leads to increased risk for bone cancer, I’d say that seems like a reasonable thing to study. If a paper purports to show a link between eating poultry and bone cancer, I’m going to be reading it with quite a bit more skepticism.

The technical term for that process is assessing “biologic plausibility.” If we’re talking tonsils, we have to ask ourselves: Is it plausible that removing someone’s tonsils when they are young should lead to major problems in the future?

At first blush, it didn’t seem very plausible to me.

But the truth is, there are quite a few studies out there demonstrating links like this: links between tonsillectomy and irritable bowel syndrome; links between tonsillectomy and cancer; links between tonsillectomy and depression.

And this week, appearing in JAMA Network Open, is a study linking tonsillectomy with stress disorders.

Researchers leveraged Sweden’s health database, which contains longitudinal data on basically every person who has lived in Sweden since 1981. This database let them know who had a tonsillectomy or adenoidectomy, and when, and what happened to them later in life.

I think the best way to present these data is to show you what they found, and then challenge that finding, and then show you what they did in anticipation of the challenges we would have to their findings. It’s a pretty thorough study.

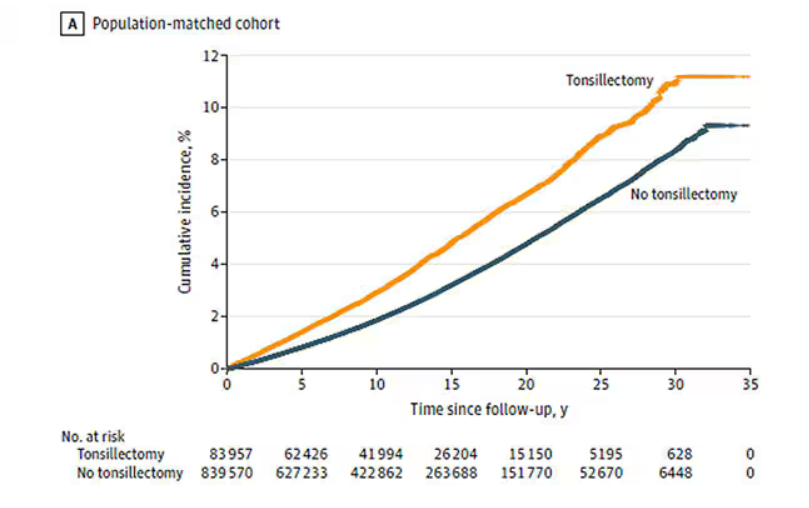

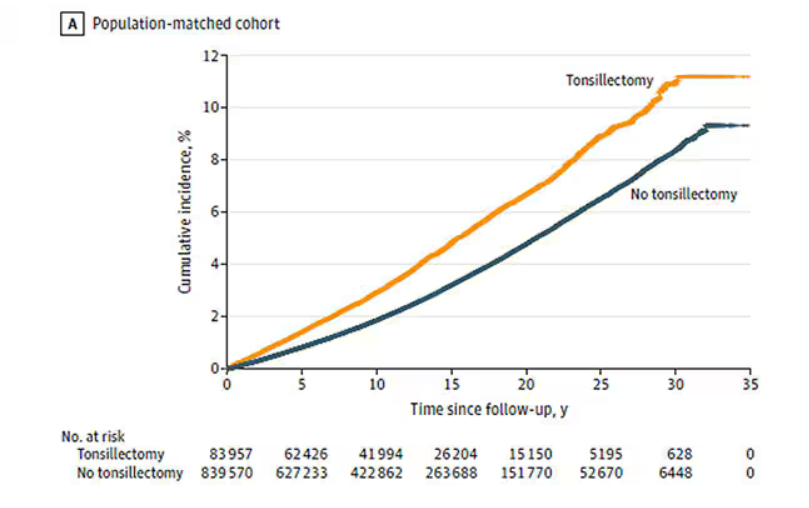

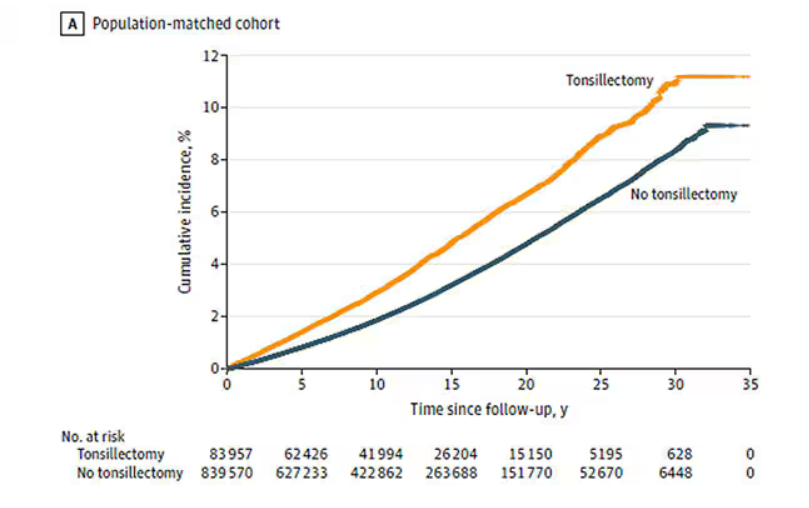

So, topline results here. The researchers first identified 83,957 individuals who had their tonsils removed. They matched each of them with 10 controls who did not have their tonsils removed but were the same age and sex.

Over around 30 years of follow-up, those people who had their tonsils removed were 43% more likely to develop a stress-related disorder. Among the specific disorders, the risk for PTSD was substantially higher: 55% higher in the tonsillectomy group.

That’s pretty surprising, but I bet you already want to push back against this. Sure, the control group was the same age and sex, but other factors might be different between the two groups. You’d be right to think so. People who got their tonsils out were more likely to have parents with a history of stress-related disorders and who had lower educational attainment. But the primary results were adjusted for those factors.

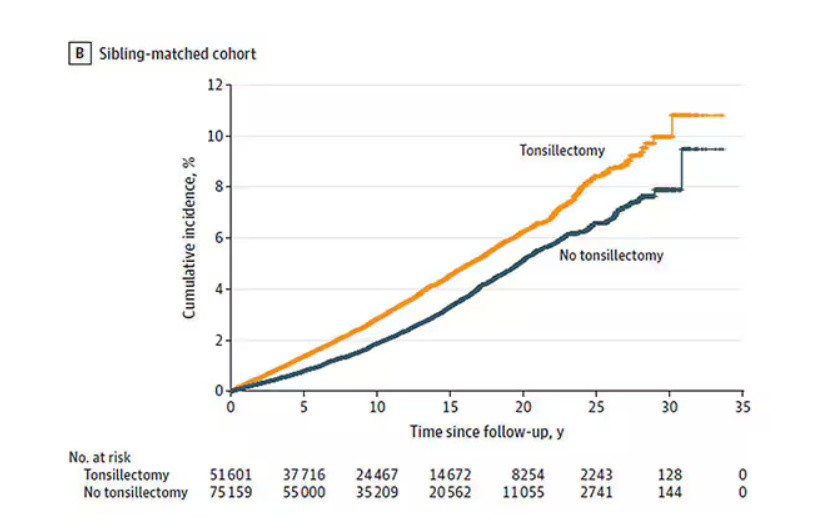

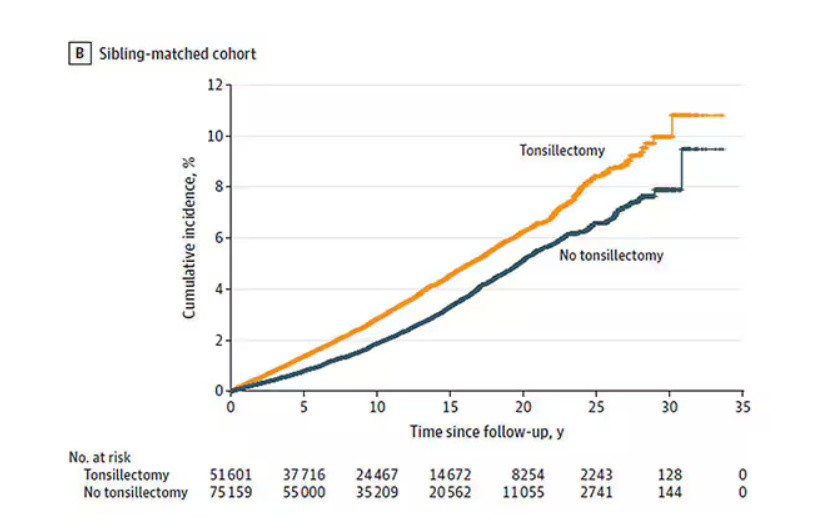

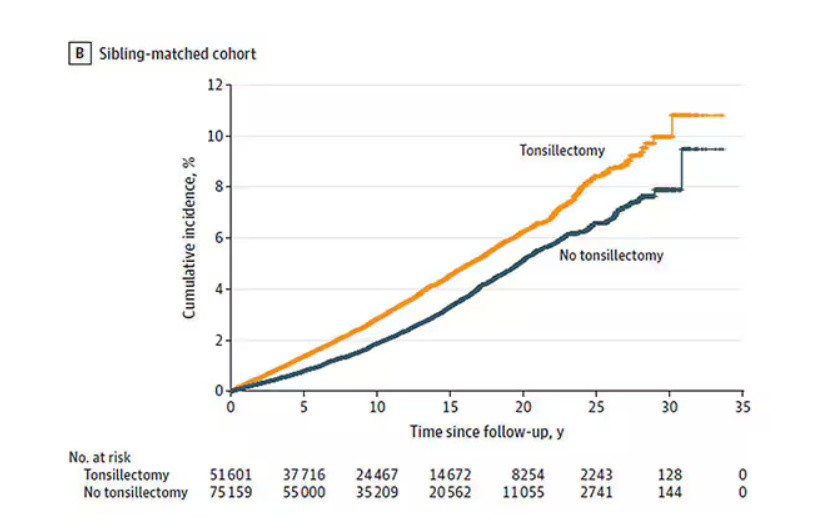

There’s more to a family than parental educational attainment, of course. To account for household factors that might be harder to measure, the researchers created a second control group, this one comprising the siblings of people who had their tonsils removed but who hadn’t themselves had their tonsils removed.

The relationship between tonsillectomy and stress disorders in this population was not quite as robust but still present: a 34% increase in any stress disorder and a 41% increase in the risk for PTSD.

Maybe kids who get their tonsils out are just followed more closely thereafter, so doctors might notice a stress disorder and document it in the medical record; whereas with other kids it might go unnoticed. This is known as ascertainment bias. The researchers addressed this in a sensitivity analysis where they excluded new diagnoses of stress disorders that occurred in the first 3 years after tonsillectomy. The results were largely unchanged.

So how do we explain these data? We observe a correlation between tonsillectomy in youth and stress disorders in later life. But correlation is not causation. One possibility, perhaps even the most likely possibility, is that tonsillectomy is a marker of some other problem. Maybe these kids are more prone to infections and are therefore more likely to need their tonsils removed. Then, after a lifetime of more infections than average, their stress responses are higher. Or maybe kids with a higher BMI are more likely to have their tonsils removed due to sleep apnea concerns, and it’s that elevated BMI that leads to higher stress in later life.

Or maybe this is causal. Maybe there actually is biological plausibility here. The authors suggest that removal of tonsils might lead to broader changes in the immune system; after all, tonsillar tissue is on the front line of our defense against pathogens that might enter our bodies through our mouths or noses. Immunologic changes lead to greater inflammation over time, and there is decent evidence to link chronic inflammation to a variety of physical and psychological disorders.

In support of this, the authors show that the kids with tonsillectomy were more likely to be hospitalized for an infectious disease in the future as well, in magnitudes similar to the increased risk for stress. But they don’t actually show that the relationship between tonsillectomy and stress is mediated by that increased risk for infectious disease.

In the end, I find these data really intriguing. Before I dug into the literature, it seemed highly unlikely that removal of these small lumps of tissue would have much of an effect on anything. Now I’m not so sure. A few things can be removed from the human body without any consequences, but it can be hard to know exactly what those consequences are.

That said, given the rather marginal benefits of tonsillectomy and the growing number of studies expanding on the risks, I expect that we’ll see the rates of the surgery decline even further in the future.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

You know those times in your life when you’re just feeling ... stressed? You’re on the edge; you have no chill; everything just sort of gets to you. If you can step away from the anxiety for a moment, you might ask yourself where it’s all coming from. Is it really the stuff in your inbox at work or is it money issues at home? Is it something with your relationship, or maybe it’s your sleep quality or your diet? One thing you probably won’t blame for those acute stress reactions is the tonsillectomy you had as a kid. But according to new research, maybe you should.

Tonsillectomy and adenoidectomy are among the most common surgical procedures young people in the United States undergo, with about 300,000 cases a year, according to recent numbers. That’s down a bit from numbers a decade or so ago, but suffice it to say, a good chunk of the population is walking around right now without their tonsils.

The data supporting tonsillectomy have never been great. The two big indications for the surgery are recurrent sore throat — data show that tonsillectomy reduces this by about 0.7 sore throats per year— and obstructive sleep apnea (OSA). The data for improvement of OSA are a bit better than the data for sore throats.

Also, tonsillectomy is a relatively quick, relatively well-reimbursed surgery with indications that are — let’s be honest — somewhat subjective, and so variation is high. One study found that in a single Vermont town, nearly 60% of the population had had their tonsils removed by the time they turned 18. A few towns over, the rate was 20%.

A few factors have led to the decline of tonsillectomy in recent years. Reimbursement rates have gone down a bit. Additionally, better data collection and statistical analysis have shown that the benefits of the procedure are relatively modest.

And then there is a body of medical literature that at first struck me as surprising and almost bizarre: data linking tonsillectomy to subsequent physical and psychiatric disorders.

I teach a course on interpretation of the medical literature, and one of the first things I teach my students is to check their gut when they see the conclusion of a study.

Basically, even before you read the data, have a sense in your own mind if the hypothesis seems reasonable. If a paper is going to conclude that smoking leads to increased risk for bone cancer, I’d say that seems like a reasonable thing to study. If a paper purports to show a link between eating poultry and bone cancer, I’m going to be reading it with quite a bit more skepticism.

The technical term for that process is assessing “biologic plausibility.” If we’re talking tonsils, we have to ask ourselves: Is it plausible that removing someone’s tonsils when they are young should lead to major problems in the future?

At first blush, it didn’t seem very plausible to me.

But the truth is, there are quite a few studies out there demonstrating links like this: links between tonsillectomy and irritable bowel syndrome; links between tonsillectomy and cancer; links between tonsillectomy and depression.

And this week, appearing in JAMA Network Open, is a study linking tonsillectomy with stress disorders.

Researchers leveraged Sweden’s health database, which contains longitudinal data on basically every person who has lived in Sweden since 1981. This database let them know who had a tonsillectomy or adenoidectomy, and when, and what happened to them later in life.

I think the best way to present these data is to show you what they found, and then challenge that finding, and then show you what they did in anticipation of the challenges we would have to their findings. It’s a pretty thorough study.

So, topline results here. The researchers first identified 83,957 individuals who had their tonsils removed. They matched each of them with 10 controls who did not have their tonsils removed but were the same age and sex.

Over around 30 years of follow-up, those people who had their tonsils removed were 43% more likely to develop a stress-related disorder. Among the specific disorders, the risk for PTSD was substantially higher: 55% higher in the tonsillectomy group.

That’s pretty surprising, but I bet you already want to push back against this. Sure, the control group was the same age and sex, but other factors might be different between the two groups. You’d be right to think so. People who got their tonsils out were more likely to have parents with a history of stress-related disorders and who had lower educational attainment. But the primary results were adjusted for those factors.

There’s more to a family than parental educational attainment, of course. To account for household factors that might be harder to measure, the researchers created a second control group, this one comprising the siblings of people who had their tonsils removed but who hadn’t themselves had their tonsils removed.

The relationship between tonsillectomy and stress disorders in this population was not quite as robust but still present: a 34% increase in any stress disorder and a 41% increase in the risk for PTSD.

Maybe kids who get their tonsils out are just followed more closely thereafter, so doctors might notice a stress disorder and document it in the medical record; whereas with other kids it might go unnoticed. This is known as ascertainment bias. The researchers addressed this in a sensitivity analysis where they excluded new diagnoses of stress disorders that occurred in the first 3 years after tonsillectomy. The results were largely unchanged.

So how do we explain these data? We observe a correlation between tonsillectomy in youth and stress disorders in later life. But correlation is not causation. One possibility, perhaps even the most likely possibility, is that tonsillectomy is a marker of some other problem. Maybe these kids are more prone to infections and are therefore more likely to need their tonsils removed. Then, after a lifetime of more infections than average, their stress responses are higher. Or maybe kids with a higher BMI are more likely to have their tonsils removed due to sleep apnea concerns, and it’s that elevated BMI that leads to higher stress in later life.

Or maybe this is causal. Maybe there actually is biological plausibility here. The authors suggest that removal of tonsils might lead to broader changes in the immune system; after all, tonsillar tissue is on the front line of our defense against pathogens that might enter our bodies through our mouths or noses. Immunologic changes lead to greater inflammation over time, and there is decent evidence to link chronic inflammation to a variety of physical and psychological disorders.

In support of this, the authors show that the kids with tonsillectomy were more likely to be hospitalized for an infectious disease in the future as well, in magnitudes similar to the increased risk for stress. But they don’t actually show that the relationship between tonsillectomy and stress is mediated by that increased risk for infectious disease.

In the end, I find these data really intriguing. Before I dug into the literature, it seemed highly unlikely that removal of these small lumps of tissue would have much of an effect on anything. Now I’m not so sure. A few things can be removed from the human body without any consequences, but it can be hard to know exactly what those consequences are.

That said, given the rather marginal benefits of tonsillectomy and the growing number of studies expanding on the risks, I expect that we’ll see the rates of the surgery decline even further in the future.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

You know those times in your life when you’re just feeling ... stressed? You’re on the edge; you have no chill; everything just sort of gets to you. If you can step away from the anxiety for a moment, you might ask yourself where it’s all coming from. Is it really the stuff in your inbox at work or is it money issues at home? Is it something with your relationship, or maybe it’s your sleep quality or your diet? One thing you probably won’t blame for those acute stress reactions is the tonsillectomy you had as a kid. But according to new research, maybe you should.

Tonsillectomy and adenoidectomy are among the most common surgical procedures young people in the United States undergo, with about 300,000 cases a year, according to recent numbers. That’s down a bit from numbers a decade or so ago, but suffice it to say, a good chunk of the population is walking around right now without their tonsils.

The data supporting tonsillectomy have never been great. The two big indications for the surgery are recurrent sore throat — data show that tonsillectomy reduces this by about 0.7 sore throats per year— and obstructive sleep apnea (OSA). The data for improvement of OSA are a bit better than the data for sore throats.

Also, tonsillectomy is a relatively quick, relatively well-reimbursed surgery with indications that are — let’s be honest — somewhat subjective, and so variation is high. One study found that in a single Vermont town, nearly 60% of the population had had their tonsils removed by the time they turned 18. A few towns over, the rate was 20%.

A few factors have led to the decline of tonsillectomy in recent years. Reimbursement rates have gone down a bit. Additionally, better data collection and statistical analysis have shown that the benefits of the procedure are relatively modest.

And then there is a body of medical literature that at first struck me as surprising and almost bizarre: data linking tonsillectomy to subsequent physical and psychiatric disorders.

I teach a course on interpretation of the medical literature, and one of the first things I teach my students is to check their gut when they see the conclusion of a study.

Basically, even before you read the data, have a sense in your own mind if the hypothesis seems reasonable. If a paper is going to conclude that smoking leads to increased risk for bone cancer, I’d say that seems like a reasonable thing to study. If a paper purports to show a link between eating poultry and bone cancer, I’m going to be reading it with quite a bit more skepticism.

The technical term for that process is assessing “biologic plausibility.” If we’re talking tonsils, we have to ask ourselves: Is it plausible that removing someone’s tonsils when they are young should lead to major problems in the future?

At first blush, it didn’t seem very plausible to me.

But the truth is, there are quite a few studies out there demonstrating links like this: links between tonsillectomy and irritable bowel syndrome; links between tonsillectomy and cancer; links between tonsillectomy and depression.

And this week, appearing in JAMA Network Open, is a study linking tonsillectomy with stress disorders.

Researchers leveraged Sweden’s health database, which contains longitudinal data on basically every person who has lived in Sweden since 1981. This database let them know who had a tonsillectomy or adenoidectomy, and when, and what happened to them later in life.

I think the best way to present these data is to show you what they found, and then challenge that finding, and then show you what they did in anticipation of the challenges we would have to their findings. It’s a pretty thorough study.

So, topline results here. The researchers first identified 83,957 individuals who had their tonsils removed. They matched each of them with 10 controls who did not have their tonsils removed but were the same age and sex.

Over around 30 years of follow-up, those people who had their tonsils removed were 43% more likely to develop a stress-related disorder. Among the specific disorders, the risk for PTSD was substantially higher: 55% higher in the tonsillectomy group.

That’s pretty surprising, but I bet you already want to push back against this. Sure, the control group was the same age and sex, but other factors might be different between the two groups. You’d be right to think so. People who got their tonsils out were more likely to have parents with a history of stress-related disorders and who had lower educational attainment. But the primary results were adjusted for those factors.

There’s more to a family than parental educational attainment, of course. To account for household factors that might be harder to measure, the researchers created a second control group, this one comprising the siblings of people who had their tonsils removed but who hadn’t themselves had their tonsils removed.

The relationship between tonsillectomy and stress disorders in this population was not quite as robust but still present: a 34% increase in any stress disorder and a 41% increase in the risk for PTSD.

Maybe kids who get their tonsils out are just followed more closely thereafter, so doctors might notice a stress disorder and document it in the medical record; whereas with other kids it might go unnoticed. This is known as ascertainment bias. The researchers addressed this in a sensitivity analysis where they excluded new diagnoses of stress disorders that occurred in the first 3 years after tonsillectomy. The results were largely unchanged.

So how do we explain these data? We observe a correlation between tonsillectomy in youth and stress disorders in later life. But correlation is not causation. One possibility, perhaps even the most likely possibility, is that tonsillectomy is a marker of some other problem. Maybe these kids are more prone to infections and are therefore more likely to need their tonsils removed. Then, after a lifetime of more infections than average, their stress responses are higher. Or maybe kids with a higher BMI are more likely to have their tonsils removed due to sleep apnea concerns, and it’s that elevated BMI that leads to higher stress in later life.

Or maybe this is causal. Maybe there actually is biological plausibility here. The authors suggest that removal of tonsils might lead to broader changes in the immune system; after all, tonsillar tissue is on the front line of our defense against pathogens that might enter our bodies through our mouths or noses. Immunologic changes lead to greater inflammation over time, and there is decent evidence to link chronic inflammation to a variety of physical and psychological disorders.

In support of this, the authors show that the kids with tonsillectomy were more likely to be hospitalized for an infectious disease in the future as well, in magnitudes similar to the increased risk for stress. But they don’t actually show that the relationship between tonsillectomy and stress is mediated by that increased risk for infectious disease.

In the end, I find these data really intriguing. Before I dug into the literature, it seemed highly unlikely that removal of these small lumps of tissue would have much of an effect on anything. Now I’m not so sure. A few things can be removed from the human body without any consequences, but it can be hard to know exactly what those consequences are.

That said, given the rather marginal benefits of tonsillectomy and the growing number of studies expanding on the risks, I expect that we’ll see the rates of the surgery decline even further in the future.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

How Metals Affect the Brain

This transcript has been edited for clarity.

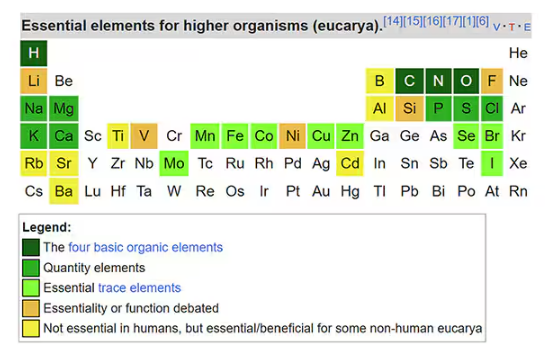

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

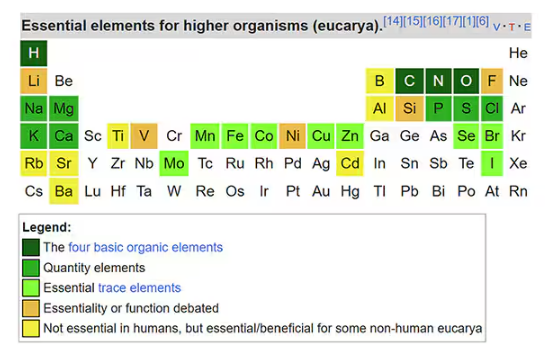

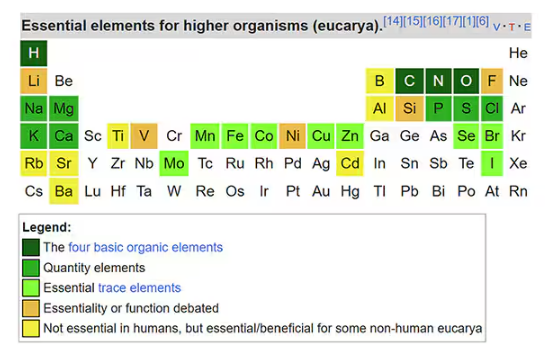

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.

It’s not a stretch to think that metal intake could have weird effects on our nervous system. Lead exposure, primarily due to leaded gasoline, has been blamed for an average reduction of about 3 points in our national IQ, for example . But not all metals are created equal. Researchers set out to find out which might be more strongly associated with performance on cognitive tests and dementia, and reported their results in this study in JAMA Network Open.

To do this, they leveraged the MESA cohort study. This is a longitudinal study of a relatively diverse group of 6300 adults who were enrolled from 2000 to 2002 around the United States. At enrollment, they gave a urine sample and took a variety of cognitive tests. Important for this study was the digit symbol substitution test, where participants are provided a code and need to replace a list of numbers with symbols as per that code. Performance on this test worsens with age, depression, and cognitive impairment.

Participants were followed for more than a decade, and over that time, 559 (about 9%) were diagnosed with dementia.

Those baseline urine samples were assayed for a variety of metals — some essential, some very much not, as you can see in Figure 2.

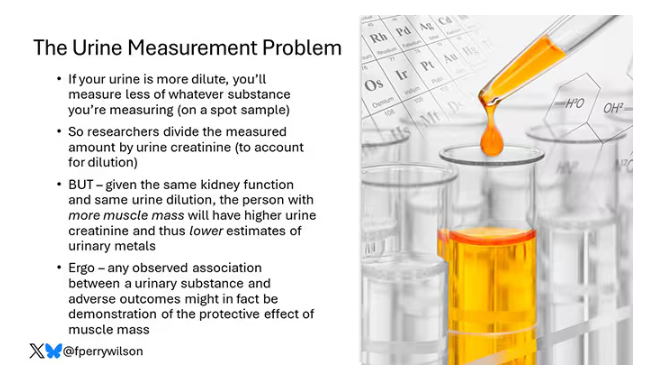

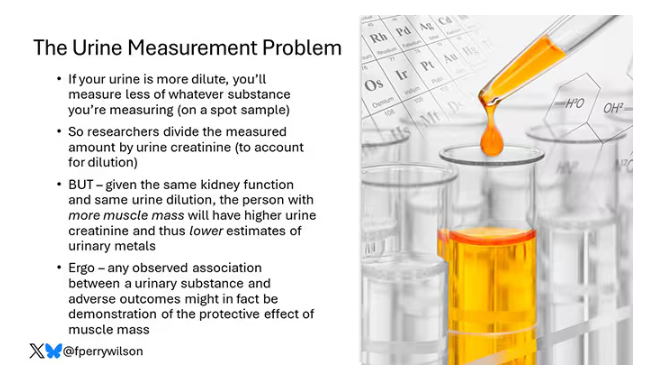

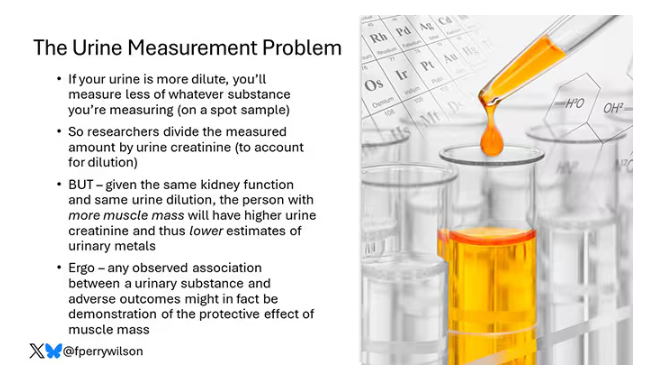

Now, I have to put my kidney doctor hat on for a second and talk about urine measurement ... of anything. The problem with urine is that the concentration can change a lot — by more than 10-fold, in fact — based on how much water you drank recently. Researchers correct for this, and in the case of this study, they do what a lot of researchers do: divide the measured concentration by the urine creatinine level.

This introduces a bit of a problem. Take two people with exactly the same kidney function, who drank exactly the same water, whose urine is exactly the same concentration. The person with more muscle mass will have more creatinine in that urine sample, since creatinine is a byproduct of muscle metabolism. Because people with more muscle mass are generally healthier, when you divide your metal concentration by urine creatinine, you get a lower number, which might lead you to believe that lower levels of the metal in the urine are protective. But in fact, what you’re seeing is that higher levels of creatinine are protective. I see this issue all the time and it will always color results of studies like this.

Okay, I am doffing my kidney doctor hat now to show you the results.

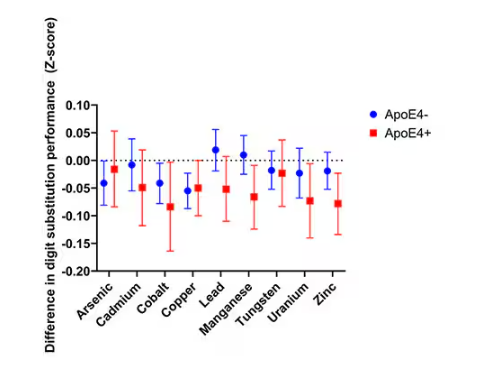

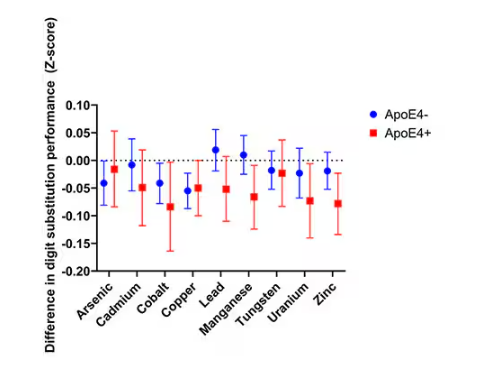

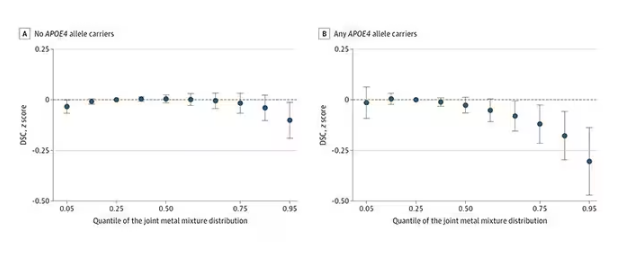

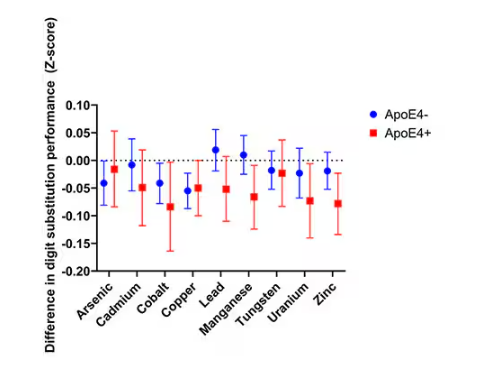

The researchers first looked at the relationship between metal concentrations in the urine and performance on cognitive tests. The results were fairly equivocal, save for that digit substitution test which is shown in Figure 4.

Even these results don’t ring major alarm bells for me. What you’re seeing here is the change in scores on the digit substitution test for each 25-percentile increase in urinary metal level — a pretty big change. And yet, you see really minor changes in the performance on the test. The digit substitution test is not an IQ test; but to give you a feeling for the magnitude of this change, if we looked at copper level, moving from the 25th to the 50th percentile would be associated with a loss of nine tenths of an IQ point.

You see two colors on the Figure 4 graph, by the way. That’s because the researchers stratified their findings based on whether the individual carried the ApoE4 gene allele, which is a risk factor for the development of dementia. There are reasons to believe that neurotoxic metals might be worse in this population, and I suppose you do see generally more adverse effects on scores in the red lines compared with the blue lines. But still, we’re not talking about a huge effect size here.

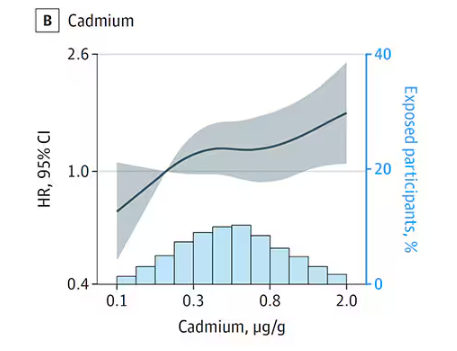

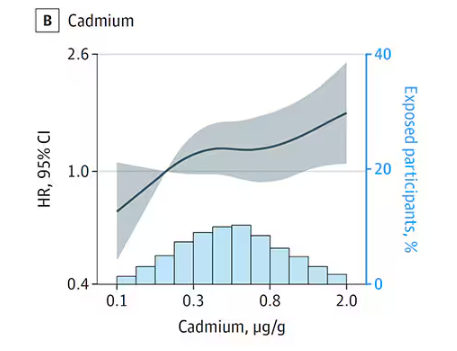

Let’s look at the relationship between these metals and the development of dementia itself, a clearly more important outcome than how well you can replace numeric digits with symbols. I’ll highlight a few of the results that are particularly telling.

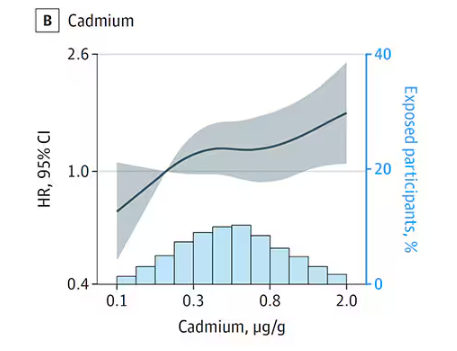

First, the nonessential mineral cadmium, which displays the type of relationship we would expect if the metal were neurotoxic: a clear, roughly linear increase in risk for dementia as urinary concentration increases.

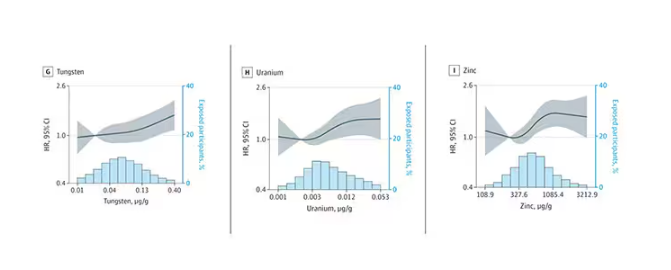

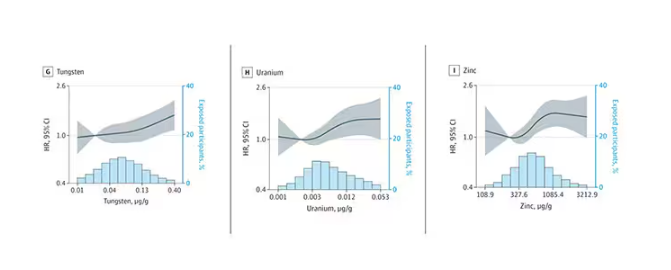

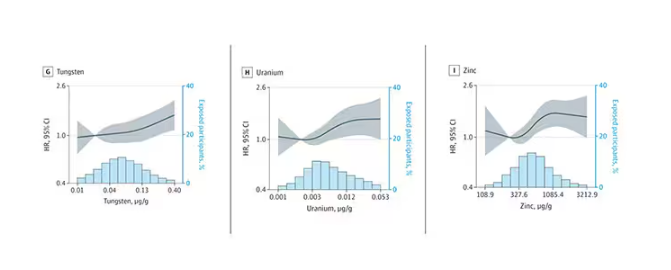

We see roughly similar patterns with the nonessential minerals tungsten and uranium, and the essential mineral zinc (beloved of respiratory-virus avoiders everywhere).

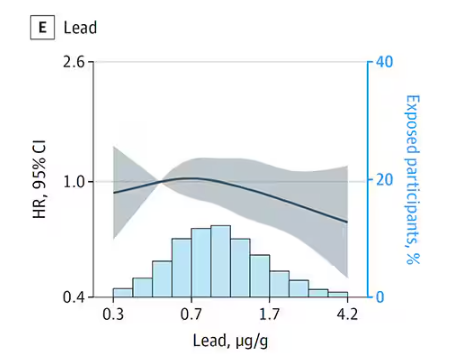

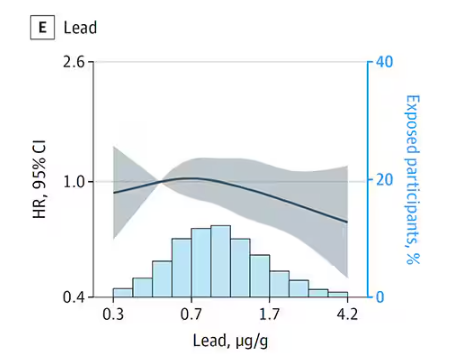

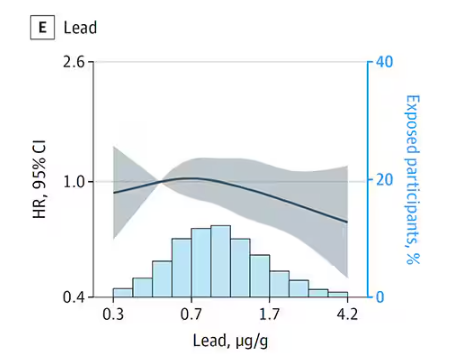

But it is very much not what we see for all metals. Strangest of all, look at lead, which shows basically no relationship with dementia.

This concerns me a bit. Earlier, I discussed the issue of measuring stuff in urine and how standardizing levels to the urine creatinine level introduces a bias due to muscle mass. One way around this is to standardize urine levels to some other marker of urine dilution, like osmolality. But more fundamental than that, I like to see positive and negative controls in studies like this. For example, lead strikes me as a good positive control here. If the experimental framework were valid, I would think we’d see a relationship between lead level and dementia.

For a negative control? Well, something we are quite sure is not neurotoxic — something like sulfur, which is relatively ubiquitous, used in a variety of biological processes, and efficiently eliminated. We don’t have that in this study.

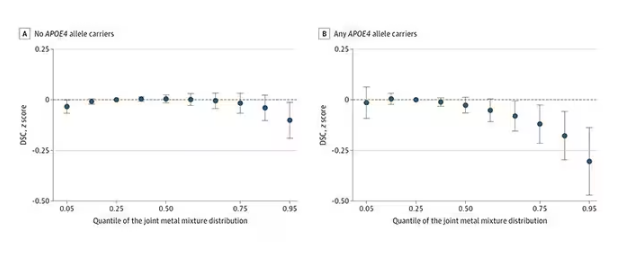

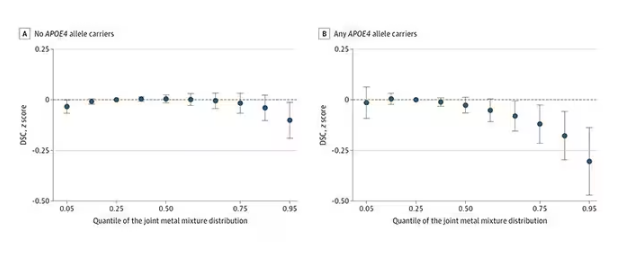

The authors close their case by creating a model that combines all the metal levels, asking the question of whether higher levels of metals in the urine in general worsen cognitive scores. And they find that the relationship exists, as you can see in Figure 8, both in carriers and noncarriers of ApoE4. But, to me, this is even more argument for the creatinine problem. If it’s not a specific metal but just the sort of general concentration of all metals, the risk for confounding by muscle mass is even higher.

So should we worry about ingesting metals? I suppose the answer is ... kind of.

I am sure we should be avoiding lead, despite the results of this study. It’s probably best to stay away from uranium too.

As for the essential metals, I’m sure there is some toxic dose; there’s a toxic dose for everything at some point. But I don’t see evidence in this study to make me worry that a significant chunk of the population is anywhere close to that.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.

It’s not a stretch to think that metal intake could have weird effects on our nervous system. Lead exposure, primarily due to leaded gasoline, has been blamed for an average reduction of about 3 points in our national IQ, for example . But not all metals are created equal. Researchers set out to find out which might be more strongly associated with performance on cognitive tests and dementia, and reported their results in this study in JAMA Network Open.

To do this, they leveraged the MESA cohort study. This is a longitudinal study of a relatively diverse group of 6300 adults who were enrolled from 2000 to 2002 around the United States. At enrollment, they gave a urine sample and took a variety of cognitive tests. Important for this study was the digit symbol substitution test, where participants are provided a code and need to replace a list of numbers with symbols as per that code. Performance on this test worsens with age, depression, and cognitive impairment.

Participants were followed for more than a decade, and over that time, 559 (about 9%) were diagnosed with dementia.

Those baseline urine samples were assayed for a variety of metals — some essential, some very much not, as you can see in Figure 2.

Now, I have to put my kidney doctor hat on for a second and talk about urine measurement ... of anything. The problem with urine is that the concentration can change a lot — by more than 10-fold, in fact — based on how much water you drank recently. Researchers correct for this, and in the case of this study, they do what a lot of researchers do: divide the measured concentration by the urine creatinine level.

This introduces a bit of a problem. Take two people with exactly the same kidney function, who drank exactly the same water, whose urine is exactly the same concentration. The person with more muscle mass will have more creatinine in that urine sample, since creatinine is a byproduct of muscle metabolism. Because people with more muscle mass are generally healthier, when you divide your metal concentration by urine creatinine, you get a lower number, which might lead you to believe that lower levels of the metal in the urine are protective. But in fact, what you’re seeing is that higher levels of creatinine are protective. I see this issue all the time and it will always color results of studies like this.

Okay, I am doffing my kidney doctor hat now to show you the results.

The researchers first looked at the relationship between metal concentrations in the urine and performance on cognitive tests. The results were fairly equivocal, save for that digit substitution test which is shown in Figure 4.

Even these results don’t ring major alarm bells for me. What you’re seeing here is the change in scores on the digit substitution test for each 25-percentile increase in urinary metal level — a pretty big change. And yet, you see really minor changes in the performance on the test. The digit substitution test is not an IQ test; but to give you a feeling for the magnitude of this change, if we looked at copper level, moving from the 25th to the 50th percentile would be associated with a loss of nine tenths of an IQ point.

You see two colors on the Figure 4 graph, by the way. That’s because the researchers stratified their findings based on whether the individual carried the ApoE4 gene allele, which is a risk factor for the development of dementia. There are reasons to believe that neurotoxic metals might be worse in this population, and I suppose you do see generally more adverse effects on scores in the red lines compared with the blue lines. But still, we’re not talking about a huge effect size here.

Let’s look at the relationship between these metals and the development of dementia itself, a clearly more important outcome than how well you can replace numeric digits with symbols. I’ll highlight a few of the results that are particularly telling.

First, the nonessential mineral cadmium, which displays the type of relationship we would expect if the metal were neurotoxic: a clear, roughly linear increase in risk for dementia as urinary concentration increases.

We see roughly similar patterns with the nonessential minerals tungsten and uranium, and the essential mineral zinc (beloved of respiratory-virus avoiders everywhere).

But it is very much not what we see for all metals. Strangest of all, look at lead, which shows basically no relationship with dementia.

This concerns me a bit. Earlier, I discussed the issue of measuring stuff in urine and how standardizing levels to the urine creatinine level introduces a bias due to muscle mass. One way around this is to standardize urine levels to some other marker of urine dilution, like osmolality. But more fundamental than that, I like to see positive and negative controls in studies like this. For example, lead strikes me as a good positive control here. If the experimental framework were valid, I would think we’d see a relationship between lead level and dementia.

For a negative control? Well, something we are quite sure is not neurotoxic — something like sulfur, which is relatively ubiquitous, used in a variety of biological processes, and efficiently eliminated. We don’t have that in this study.

The authors close their case by creating a model that combines all the metal levels, asking the question of whether higher levels of metals in the urine in general worsen cognitive scores. And they find that the relationship exists, as you can see in Figure 8, both in carriers and noncarriers of ApoE4. But, to me, this is even more argument for the creatinine problem. If it’s not a specific metal but just the sort of general concentration of all metals, the risk for confounding by muscle mass is even higher.

So should we worry about ingesting metals? I suppose the answer is ... kind of.

I am sure we should be avoiding lead, despite the results of this study. It’s probably best to stay away from uranium too.

As for the essential metals, I’m sure there is some toxic dose; there’s a toxic dose for everything at some point. But I don’t see evidence in this study to make me worry that a significant chunk of the population is anywhere close to that.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

It has always amazed me that our bodies require these tiny amounts of incredibly rare substances to function. Sure, we need oxygen. We need water. But we also need molybdenum, which makes up just 1.2 parts per million of the Earth’s crust.

Without adequate molybdenum intake, we develop seizures, developmental delays, death. Fortunately, we need so little molybdenum that true molybdenum deficiency is incredibly rare — seen only in people on total parenteral nutrition without supplementation or those with certain rare genetic conditions. But still, molybdenum is necessary for life.

Many metals are. Figure 1 colors the essential minerals on the periodic table. You can see that to stay alive, we humans need not only things like sodium, but selenium, bromine, zinc, copper, and cobalt.

Some metals are very clearly not essential; we can all do without lead and mercury, and probably should.

But just because something is essential for life does not mean that more is better. The dose is the poison, as they say. And this week, we explore whether metals — even essential metals — might be adversely affecting our brains.

It’s not a stretch to think that metal intake could have weird effects on our nervous system. Lead exposure, primarily due to leaded gasoline, has been blamed for an average reduction of about 3 points in our national IQ, for example . But not all metals are created equal. Researchers set out to find out which might be more strongly associated with performance on cognitive tests and dementia, and reported their results in this study in JAMA Network Open.

To do this, they leveraged the MESA cohort study. This is a longitudinal study of a relatively diverse group of 6300 adults who were enrolled from 2000 to 2002 around the United States. At enrollment, they gave a urine sample and took a variety of cognitive tests. Important for this study was the digit symbol substitution test, where participants are provided a code and need to replace a list of numbers with symbols as per that code. Performance on this test worsens with age, depression, and cognitive impairment.

Participants were followed for more than a decade, and over that time, 559 (about 9%) were diagnosed with dementia.

Those baseline urine samples were assayed for a variety of metals — some essential, some very much not, as you can see in Figure 2.

Now, I have to put my kidney doctor hat on for a second and talk about urine measurement ... of anything. The problem with urine is that the concentration can change a lot — by more than 10-fold, in fact — based on how much water you drank recently. Researchers correct for this, and in the case of this study, they do what a lot of researchers do: divide the measured concentration by the urine creatinine level.

This introduces a bit of a problem. Take two people with exactly the same kidney function, who drank exactly the same water, whose urine is exactly the same concentration. The person with more muscle mass will have more creatinine in that urine sample, since creatinine is a byproduct of muscle metabolism. Because people with more muscle mass are generally healthier, when you divide your metal concentration by urine creatinine, you get a lower number, which might lead you to believe that lower levels of the metal in the urine are protective. But in fact, what you’re seeing is that higher levels of creatinine are protective. I see this issue all the time and it will always color results of studies like this.

Okay, I am doffing my kidney doctor hat now to show you the results.

The researchers first looked at the relationship between metal concentrations in the urine and performance on cognitive tests. The results were fairly equivocal, save for that digit substitution test which is shown in Figure 4.

Even these results don’t ring major alarm bells for me. What you’re seeing here is the change in scores on the digit substitution test for each 25-percentile increase in urinary metal level — a pretty big change. And yet, you see really minor changes in the performance on the test. The digit substitution test is not an IQ test; but to give you a feeling for the magnitude of this change, if we looked at copper level, moving from the 25th to the 50th percentile would be associated with a loss of nine tenths of an IQ point.

You see two colors on the Figure 4 graph, by the way. That’s because the researchers stratified their findings based on whether the individual carried the ApoE4 gene allele, which is a risk factor for the development of dementia. There are reasons to believe that neurotoxic metals might be worse in this population, and I suppose you do see generally more adverse effects on scores in the red lines compared with the blue lines. But still, we’re not talking about a huge effect size here.

Let’s look at the relationship between these metals and the development of dementia itself, a clearly more important outcome than how well you can replace numeric digits with symbols. I’ll highlight a few of the results that are particularly telling.

First, the nonessential mineral cadmium, which displays the type of relationship we would expect if the metal were neurotoxic: a clear, roughly linear increase in risk for dementia as urinary concentration increases.

We see roughly similar patterns with the nonessential minerals tungsten and uranium, and the essential mineral zinc (beloved of respiratory-virus avoiders everywhere).

But it is very much not what we see for all metals. Strangest of all, look at lead, which shows basically no relationship with dementia.

This concerns me a bit. Earlier, I discussed the issue of measuring stuff in urine and how standardizing levels to the urine creatinine level introduces a bias due to muscle mass. One way around this is to standardize urine levels to some other marker of urine dilution, like osmolality. But more fundamental than that, I like to see positive and negative controls in studies like this. For example, lead strikes me as a good positive control here. If the experimental framework were valid, I would think we’d see a relationship between lead level and dementia.

For a negative control? Well, something we are quite sure is not neurotoxic — something like sulfur, which is relatively ubiquitous, used in a variety of biological processes, and efficiently eliminated. We don’t have that in this study.

The authors close their case by creating a model that combines all the metal levels, asking the question of whether higher levels of metals in the urine in general worsen cognitive scores. And they find that the relationship exists, as you can see in Figure 8, both in carriers and noncarriers of ApoE4. But, to me, this is even more argument for the creatinine problem. If it’s not a specific metal but just the sort of general concentration of all metals, the risk for confounding by muscle mass is even higher.

So should we worry about ingesting metals? I suppose the answer is ... kind of.

I am sure we should be avoiding lead, despite the results of this study. It’s probably best to stay away from uranium too.

As for the essential metals, I’m sure there is some toxic dose; there’s a toxic dose for everything at some point. But I don’t see evidence in this study to make me worry that a significant chunk of the population is anywhere close to that.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

How Much Water Should We Drink in a Day?

This transcript has been edited for clarity.

It’s just about the easiest, safest medical advice you can give: “Drink more water.” You have a headache? Drink more water. Tired? Drink more water. Cold coming on? Drink more water. Tom Brady famously attributed his QB longevity to water drinking, among some other less ordinary practices.

I’m a nephrologist — a kidney doctor. I think about water all the time. I can tell you how your brain senses how much water is in your body and exactly how it communicates that information to your kidneys to control how dilute your urine is. I can explain the miraculous ability of the kidney to concentrate urine across a range from 50 mOsm/L to 1200 mOsm/L and the physiology that makes it all work.

But I can’t really tell you how much water you’re supposed to drink. And believe me, I get asked all the time.

I’m sure of a couple of things when it comes to water: You need to drink some. Though some animals, such as kangaroo rats, can get virtually all the water they need from the food they eat, we are not such animals. Without water, we die. I’m also sure that you can die from drinking too much water. Drinking excessive amounts of water dilutes the sodium in your blood, which messes with the electrical system in your brain and heart. I actually had a patient who went on a “water cleanse” and gave herself a seizure.

But, to be fair, assuming your kidneys are working reasonably well and you’re otherwise healthy, you’d need to drink around 20 liters of water a day to get into mortal trouble. The dose is the poison, as they say.

So, somewhere between zero and 20 liters of water is the amount you should be drinking in a day. That much I’m sure of.

But the evidence on where in that range you should target is actually pretty skimpy. You wouldn’t think so if you look at the online wellness influencers, with their Stanleys and their strict water intake regimens. You’d think the evidence for the benefits of drinking extra water is overwhelming.

The venerated National Academy of Medicine suggests that men drink thirteen 8 oz cups a day (that’s about 3 liters) and women drink nine 8 oz cups a day (a bit more than 2 liters). From what I can tell, this recommendation — like the old “8 cups of water per day” recommendation — is pulled out of thin air.

I’m not arguing that we shouldn’t drink water. Of course, water is important. I’m just wondering what data there are to really prove that drinking more water is better.

Fortunately, a team from UCSF has finally done the legwork for us. They break down the actual evidence in this paper, appearing in JAMA Network Open.

The team scoured the medical literature for randomized controlled trials of water intake. This is critical; we don’t want anecdotes about how clear someone’s skin became after they increased their water intake. We want icy cold, clear data. Randomized trials take a group of people and, at random, assign some to the intervention — in this case, drinking more water — and others to keep doing what they would normally do.

The team reviewed nearly 1500 papers but only 18 (!) met the rigorous criteria to be included in the analysis, as you can see from this flow chart.

This is the first important finding; not many high-quality studies have investigated how much water we should drink. Of course, water isn’t a prescription product, so funding is likely hard to come by. Can we do a trial of Dasani?

In any case, these 18 trials all looked at different outcomes of interest. Four studies looked at the impact of drinking more water on weight loss, two on fasting blood glucose, two on headache, two on urinary tract infection, two on kidney stones, and six studies on various other outcomes. None of the studies looked at energy, skin tone, or overall wellness, though one did measure a quality-of-life score.

And if I could sum up all these studies in a word, that word would be “meh.”

One of four weight loss studies showed that increasing water intake had no effect on weight loss. Two studies showed an effect, but drinking extra water was combined with a low-calorie diet, so that feels a bit like cheating to me. One study randomized participants to drink half a liter of water before meals, and that group did lose more weight than the control group — about a kilogram more over 12 weeks. That’s not exactly Ozempic.

For fasting blood glucose, although one trial suggested that higher premeal water intake lowered glucose levels, the other study (which looked just at increasing water overall) didn’t.

For headache — and, cards on the table here, I’m a big believer in water for headaches — one study showed nothing. The other showed that increasing water intake by 1.5 liters per day improved migraine-related quality of life but didn’t change the number of headache days per month.

For urinary tract infections, one positive trial and one negative one.

The best evidence comes from the kidney stone trials. Increasing water intake to achieve more than two liters of urine a day was associated with a significant reduction in kidney stone recurrence. I consider this a positive finding, more or less. You would be hard-pressed to find a kidney doctor who doesn’t think that people with a history of kidney stones should drink more water.

What about that quality-of-life study? They randomized participants to either drink 1.5 liters of extra water per day (intervention group) or not (control group). Six months later, the scores on the quality-of-life survey were no different between those two groups.

Thirsty yet?

So, what’s going on here? There are a few possibilities.

First, I need to point out that clinical trials are really hard. All the studies in this review were relatively small, with most enrolling fewer than 100 people. The effect of extra water would need to be pretty potent to detect it with those small samples.

I can’t help but point out that our bodies are actually exquisitely tuned to manage how much water we carry. As we lose water throughout the day from sweat and exhalation, our blood becomes a tiny bit more concentrated — the sodium level goes up. Our brains detect that and create a sensation we call thirst. Thirst is one of the most powerful drives we have. Animals, including humans, when thirsty, will choose water over food, over drugs, and over sex. It is incredibly hard to resist, and assuming that we have ready access to water, there is no need to resist it. We drink when we are thirsty. And that may be enough.

Of course, pushing beyond thirst is possible. We are sapient beings who can drink more than we want to. But what we can’t do, assuming our kidneys work, is hold onto that water. It passes right through us. In the case of preventing kidney stones, this is a good thing. Putting more water into your body leads to more water coming out — more dilute urine — which means it’s harder for stones to form.

But for all that other stuff? The wellness, the skin tone, and so on? It just doesn’t make much sense. If you drink an extra liter of water, you pee an extra liter of water. Net net? Zero.

Some folks will argue that the extra pee gets rid of extra toxins or something like that, but — sorry, kidney doctor Perry here again — that’s not how pee works. The clearance of toxins from the blood happens way upstream of where your urine is diluted or concentrated.

If you drink more, the same toxins come out, just with more water around them. In fact, one of the largest studies in this JAMA Network Open review assessed whether increasing water consumption in people with chronic kidney disease would improve kidney function. It didn’t.

I am left, then, with only a bit more confidence than when I began. Beyond that, it seems reasonable to trust the millions of years of evolution that have made water homeostasis central to life itself. Give yourself access to water. Drink when you’re thirsty. Drink a bit more if you’d like. But no need to push it. Your kidneys won’t let you anyway.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

It’s just about the easiest, safest medical advice you can give: “Drink more water.” You have a headache? Drink more water. Tired? Drink more water. Cold coming on? Drink more water. Tom Brady famously attributed his QB longevity to water drinking, among some other less ordinary practices.

I’m a nephrologist — a kidney doctor. I think about water all the time. I can tell you how your brain senses how much water is in your body and exactly how it communicates that information to your kidneys to control how dilute your urine is. I can explain the miraculous ability of the kidney to concentrate urine across a range from 50 mOsm/L to 1200 mOsm/L and the physiology that makes it all work.

But I can’t really tell you how much water you’re supposed to drink. And believe me, I get asked all the time.

I’m sure of a couple of things when it comes to water: You need to drink some. Though some animals, such as kangaroo rats, can get virtually all the water they need from the food they eat, we are not such animals. Without water, we die. I’m also sure that you can die from drinking too much water. Drinking excessive amounts of water dilutes the sodium in your blood, which messes with the electrical system in your brain and heart. I actually had a patient who went on a “water cleanse” and gave herself a seizure.

But, to be fair, assuming your kidneys are working reasonably well and you’re otherwise healthy, you’d need to drink around 20 liters of water a day to get into mortal trouble. The dose is the poison, as they say.

So, somewhere between zero and 20 liters of water is the amount you should be drinking in a day. That much I’m sure of.

But the evidence on where in that range you should target is actually pretty skimpy. You wouldn’t think so if you look at the online wellness influencers, with their Stanleys and their strict water intake regimens. You’d think the evidence for the benefits of drinking extra water is overwhelming.

The venerated National Academy of Medicine suggests that men drink thirteen 8 oz cups a day (that’s about 3 liters) and women drink nine 8 oz cups a day (a bit more than 2 liters). From what I can tell, this recommendation — like the old “8 cups of water per day” recommendation — is pulled out of thin air.

I’m not arguing that we shouldn’t drink water. Of course, water is important. I’m just wondering what data there are to really prove that drinking more water is better.

Fortunately, a team from UCSF has finally done the legwork for us. They break down the actual evidence in this paper, appearing in JAMA Network Open.

The team scoured the medical literature for randomized controlled trials of water intake. This is critical; we don’t want anecdotes about how clear someone’s skin became after they increased their water intake. We want icy cold, clear data. Randomized trials take a group of people and, at random, assign some to the intervention — in this case, drinking more water — and others to keep doing what they would normally do.

The team reviewed nearly 1500 papers but only 18 (!) met the rigorous criteria to be included in the analysis, as you can see from this flow chart.

This is the first important finding; not many high-quality studies have investigated how much water we should drink. Of course, water isn’t a prescription product, so funding is likely hard to come by. Can we do a trial of Dasani?

In any case, these 18 trials all looked at different outcomes of interest. Four studies looked at the impact of drinking more water on weight loss, two on fasting blood glucose, two on headache, two on urinary tract infection, two on kidney stones, and six studies on various other outcomes. None of the studies looked at energy, skin tone, or overall wellness, though one did measure a quality-of-life score.

And if I could sum up all these studies in a word, that word would be “meh.”

One of four weight loss studies showed that increasing water intake had no effect on weight loss. Two studies showed an effect, but drinking extra water was combined with a low-calorie diet, so that feels a bit like cheating to me. One study randomized participants to drink half a liter of water before meals, and that group did lose more weight than the control group — about a kilogram more over 12 weeks. That’s not exactly Ozempic.

For fasting blood glucose, although one trial suggested that higher premeal water intake lowered glucose levels, the other study (which looked just at increasing water overall) didn’t.

For headache — and, cards on the table here, I’m a big believer in water for headaches — one study showed nothing. The other showed that increasing water intake by 1.5 liters per day improved migraine-related quality of life but didn’t change the number of headache days per month.

For urinary tract infections, one positive trial and one negative one.

The best evidence comes from the kidney stone trials. Increasing water intake to achieve more than two liters of urine a day was associated with a significant reduction in kidney stone recurrence. I consider this a positive finding, more or less. You would be hard-pressed to find a kidney doctor who doesn’t think that people with a history of kidney stones should drink more water.

What about that quality-of-life study? They randomized participants to either drink 1.5 liters of extra water per day (intervention group) or not (control group). Six months later, the scores on the quality-of-life survey were no different between those two groups.

Thirsty yet?

So, what’s going on here? There are a few possibilities.

First, I need to point out that clinical trials are really hard. All the studies in this review were relatively small, with most enrolling fewer than 100 people. The effect of extra water would need to be pretty potent to detect it with those small samples.

I can’t help but point out that our bodies are actually exquisitely tuned to manage how much water we carry. As we lose water throughout the day from sweat and exhalation, our blood becomes a tiny bit more concentrated — the sodium level goes up. Our brains detect that and create a sensation we call thirst. Thirst is one of the most powerful drives we have. Animals, including humans, when thirsty, will choose water over food, over drugs, and over sex. It is incredibly hard to resist, and assuming that we have ready access to water, there is no need to resist it. We drink when we are thirsty. And that may be enough.

Of course, pushing beyond thirst is possible. We are sapient beings who can drink more than we want to. But what we can’t do, assuming our kidneys work, is hold onto that water. It passes right through us. In the case of preventing kidney stones, this is a good thing. Putting more water into your body leads to more water coming out — more dilute urine — which means it’s harder for stones to form.

But for all that other stuff? The wellness, the skin tone, and so on? It just doesn’t make much sense. If you drink an extra liter of water, you pee an extra liter of water. Net net? Zero.

Some folks will argue that the extra pee gets rid of extra toxins or something like that, but — sorry, kidney doctor Perry here again — that’s not how pee works. The clearance of toxins from the blood happens way upstream of where your urine is diluted or concentrated.

If you drink more, the same toxins come out, just with more water around them. In fact, one of the largest studies in this JAMA Network Open review assessed whether increasing water consumption in people with chronic kidney disease would improve kidney function. It didn’t.

I am left, then, with only a bit more confidence than when I began. Beyond that, it seems reasonable to trust the millions of years of evolution that have made water homeostasis central to life itself. Give yourself access to water. Drink when you’re thirsty. Drink a bit more if you’d like. But no need to push it. Your kidneys won’t let you anyway.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

It’s just about the easiest, safest medical advice you can give: “Drink more water.” You have a headache? Drink more water. Tired? Drink more water. Cold coming on? Drink more water. Tom Brady famously attributed his QB longevity to water drinking, among some other less ordinary practices.

I’m a nephrologist — a kidney doctor. I think about water all the time. I can tell you how your brain senses how much water is in your body and exactly how it communicates that information to your kidneys to control how dilute your urine is. I can explain the miraculous ability of the kidney to concentrate urine across a range from 50 mOsm/L to 1200 mOsm/L and the physiology that makes it all work.

But I can’t really tell you how much water you’re supposed to drink. And believe me, I get asked all the time.

I’m sure of a couple of things when it comes to water: You need to drink some. Though some animals, such as kangaroo rats, can get virtually all the water they need from the food they eat, we are not such animals. Without water, we die. I’m also sure that you can die from drinking too much water. Drinking excessive amounts of water dilutes the sodium in your blood, which messes with the electrical system in your brain and heart. I actually had a patient who went on a “water cleanse” and gave herself a seizure.

But, to be fair, assuming your kidneys are working reasonably well and you’re otherwise healthy, you’d need to drink around 20 liters of water a day to get into mortal trouble. The dose is the poison, as they say.

So, somewhere between zero and 20 liters of water is the amount you should be drinking in a day. That much I’m sure of.

But the evidence on where in that range you should target is actually pretty skimpy. You wouldn’t think so if you look at the online wellness influencers, with their Stanleys and their strict water intake regimens. You’d think the evidence for the benefits of drinking extra water is overwhelming.

The venerated National Academy of Medicine suggests that men drink thirteen 8 oz cups a day (that’s about 3 liters) and women drink nine 8 oz cups a day (a bit more than 2 liters). From what I can tell, this recommendation — like the old “8 cups of water per day” recommendation — is pulled out of thin air.

I’m not arguing that we shouldn’t drink water. Of course, water is important. I’m just wondering what data there are to really prove that drinking more water is better.

Fortunately, a team from UCSF has finally done the legwork for us. They break down the actual evidence in this paper, appearing in JAMA Network Open.

The team scoured the medical literature for randomized controlled trials of water intake. This is critical; we don’t want anecdotes about how clear someone’s skin became after they increased their water intake. We want icy cold, clear data. Randomized trials take a group of people and, at random, assign some to the intervention — in this case, drinking more water — and others to keep doing what they would normally do.

The team reviewed nearly 1500 papers but only 18 (!) met the rigorous criteria to be included in the analysis, as you can see from this flow chart.

This is the first important finding; not many high-quality studies have investigated how much water we should drink. Of course, water isn’t a prescription product, so funding is likely hard to come by. Can we do a trial of Dasani?

In any case, these 18 trials all looked at different outcomes of interest. Four studies looked at the impact of drinking more water on weight loss, two on fasting blood glucose, two on headache, two on urinary tract infection, two on kidney stones, and six studies on various other outcomes. None of the studies looked at energy, skin tone, or overall wellness, though one did measure a quality-of-life score.

And if I could sum up all these studies in a word, that word would be “meh.”

One of four weight loss studies showed that increasing water intake had no effect on weight loss. Two studies showed an effect, but drinking extra water was combined with a low-calorie diet, so that feels a bit like cheating to me. One study randomized participants to drink half a liter of water before meals, and that group did lose more weight than the control group — about a kilogram more over 12 weeks. That’s not exactly Ozempic.

For fasting blood glucose, although one trial suggested that higher premeal water intake lowered glucose levels, the other study (which looked just at increasing water overall) didn’t.

For headache — and, cards on the table here, I’m a big believer in water for headaches — one study showed nothing. The other showed that increasing water intake by 1.5 liters per day improved migraine-related quality of life but didn’t change the number of headache days per month.

For urinary tract infections, one positive trial and one negative one.

The best evidence comes from the kidney stone trials. Increasing water intake to achieve more than two liters of urine a day was associated with a significant reduction in kidney stone recurrence. I consider this a positive finding, more or less. You would be hard-pressed to find a kidney doctor who doesn’t think that people with a history of kidney stones should drink more water.

What about that quality-of-life study? They randomized participants to either drink 1.5 liters of extra water per day (intervention group) or not (control group). Six months later, the scores on the quality-of-life survey were no different between those two groups.

Thirsty yet?

So, what’s going on here? There are a few possibilities.

First, I need to point out that clinical trials are really hard. All the studies in this review were relatively small, with most enrolling fewer than 100 people. The effect of extra water would need to be pretty potent to detect it with those small samples.

I can’t help but point out that our bodies are actually exquisitely tuned to manage how much water we carry. As we lose water throughout the day from sweat and exhalation, our blood becomes a tiny bit more concentrated — the sodium level goes up. Our brains detect that and create a sensation we call thirst. Thirst is one of the most powerful drives we have. Animals, including humans, when thirsty, will choose water over food, over drugs, and over sex. It is incredibly hard to resist, and assuming that we have ready access to water, there is no need to resist it. We drink when we are thirsty. And that may be enough.

Of course, pushing beyond thirst is possible. We are sapient beings who can drink more than we want to. But what we can’t do, assuming our kidneys work, is hold onto that water. It passes right through us. In the case of preventing kidney stones, this is a good thing. Putting more water into your body leads to more water coming out — more dilute urine — which means it’s harder for stones to form.

But for all that other stuff? The wellness, the skin tone, and so on? It just doesn’t make much sense. If you drink an extra liter of water, you pee an extra liter of water. Net net? Zero.

Some folks will argue that the extra pee gets rid of extra toxins or something like that, but — sorry, kidney doctor Perry here again — that’s not how pee works. The clearance of toxins from the blood happens way upstream of where your urine is diluted or concentrated.

If you drink more, the same toxins come out, just with more water around them. In fact, one of the largest studies in this JAMA Network Open review assessed whether increasing water consumption in people with chronic kidney disease would improve kidney function. It didn’t.

I am left, then, with only a bit more confidence than when I began. Beyond that, it seems reasonable to trust the millions of years of evolution that have made water homeostasis central to life itself. Give yourself access to water. Drink when you’re thirsty. Drink a bit more if you’d like. But no need to push it. Your kidneys won’t let you anyway.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Connecticut. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

We Haven’t Kicked Our Pandemic Drinking Habit

This transcript has been edited for clarity.

You’re stuck in your house. Work is closed or you’re working remotely. Your kids’ school is closed or is offering an hour or two a day of Zoom-based instruction. You have a bit of cabin fever which, you suppose, is better than the actual fever that comes with COVID infections, which are running rampant during the height of the pandemic. But still — it’s stressful. What do you do?

We all coped in our own way. We baked sourdough bread. We built that tree house we’d been meaning to build. We started podcasts. And ... we drank. Quite a bit, actually.

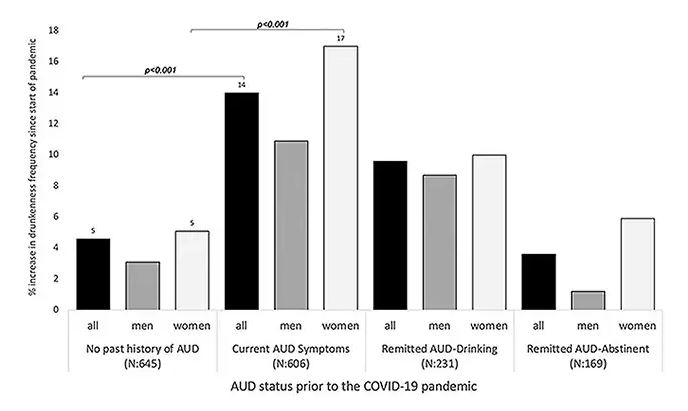

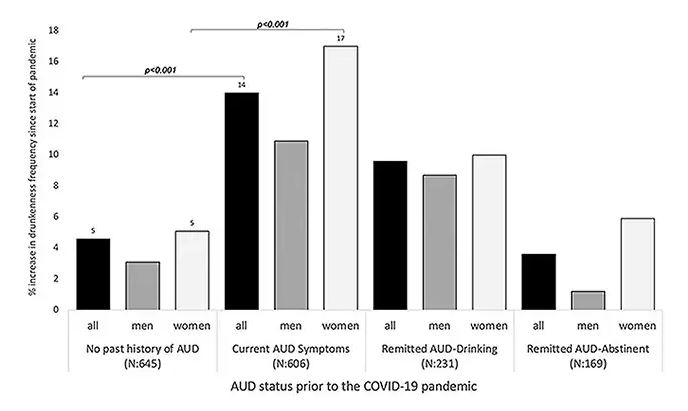

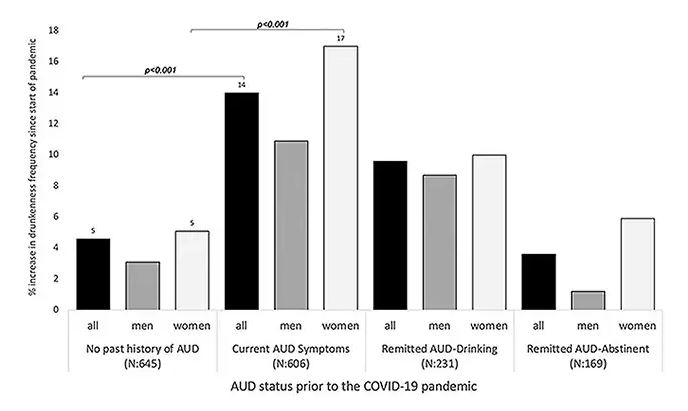

During the first year of the pandemic, alcohol sales increased 3%, the largest year-on-year increase in more than 50 years. There was also an increase in drunkenness across the board, though it was most pronounced in those who were already at risk from alcohol use disorder.

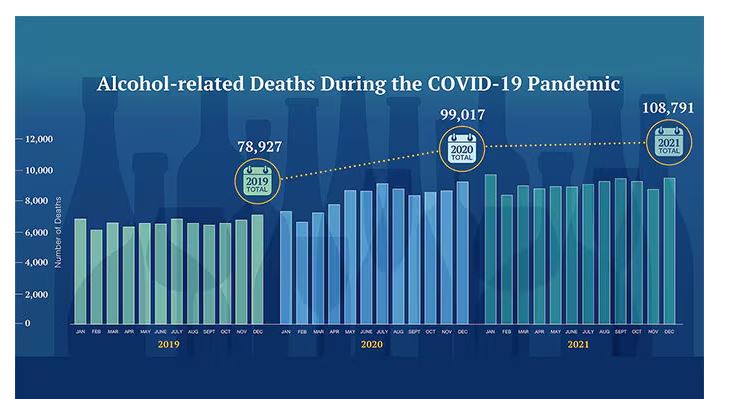

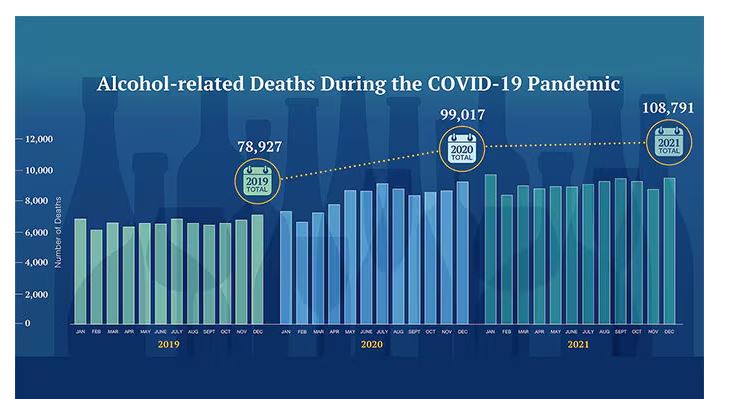

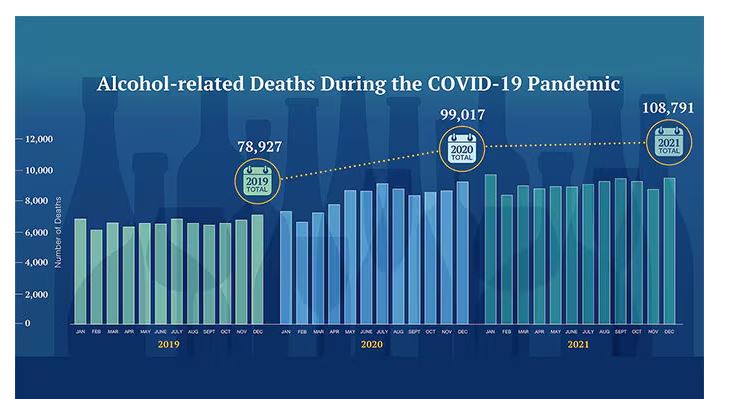

Alcohol-associated deaths increased by around 10% from 2019 to 2020. Obviously, this is a small percentage of COVID-associated deaths, but it is nothing to sneeze at.

But look, we were anxious. And say what you will about alcohol as a risk factor for liver disease, heart disease, and cancer — not to mention traffic accidents — it is an anxiolytic, at least in the short term.

But as the pandemic waned, as society reopened, as we got back to work and reintegrated into our social circles and escaped the confines of our houses and apartments, our drinking habits went back to normal, right?

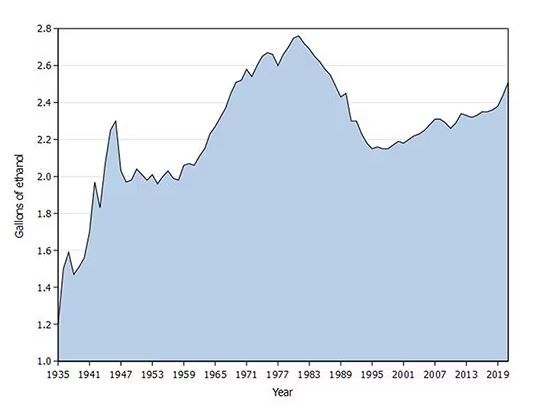

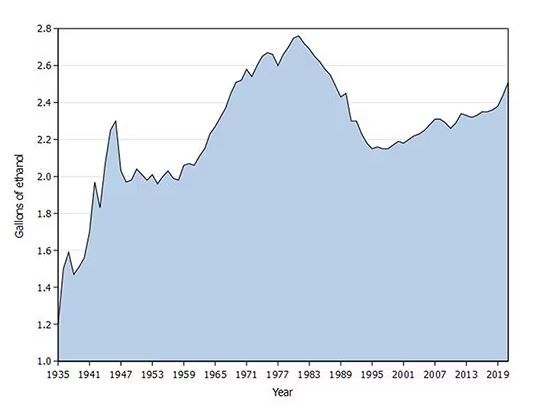

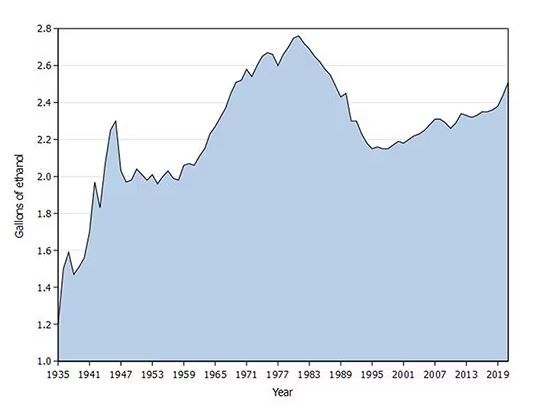

Americans’ love affair with alcohol has been a torrid one, as this graph showing gallons of ethanol consumed per capita over time shows you.

What you see is a steady increase in alcohol consumption from the end of prohibition in 1933 to its peak in the heady days of the early 1980s, followed by a steady decline until the mid-1990s. Since then, there has been another increase with, as you will note, a notable uptick during the early part of the COVID pandemic.

What came across my desk this week was updated data, appearing in a research letter in Annals of Internal Medicine, that compared alcohol consumption in 2020 — the first year of the COVID pandemic — with that in 2022 (the latest available data). And it looks like not much has changed.

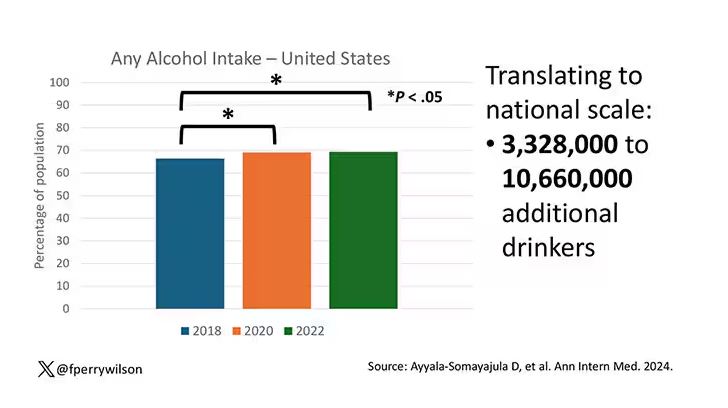

This was a population-based survey study leveraging the National Health Interview Survey, including around 80,000 respondents from 2018, 2020, and 2022.

They created two main categories of drinking: drinking any alcohol at all and heavy drinking.

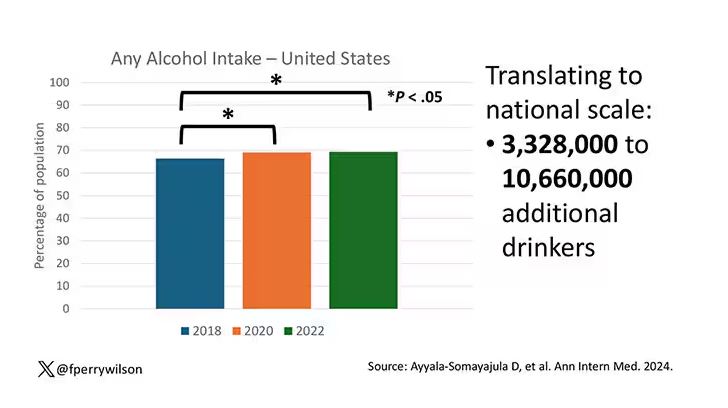

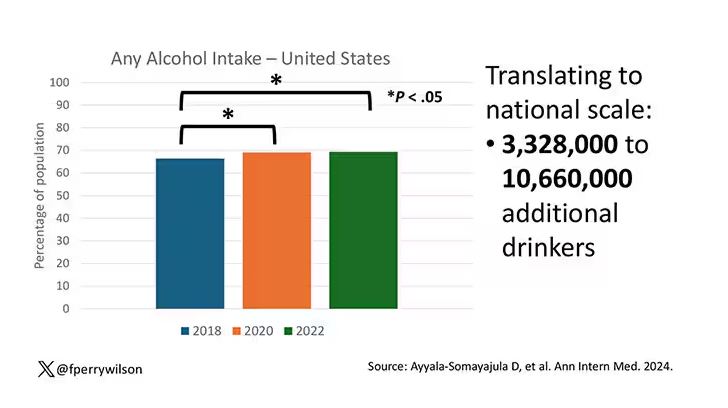

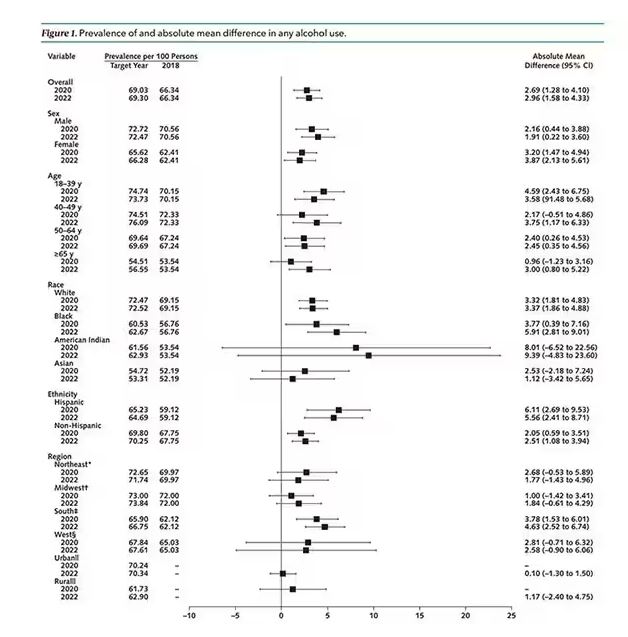

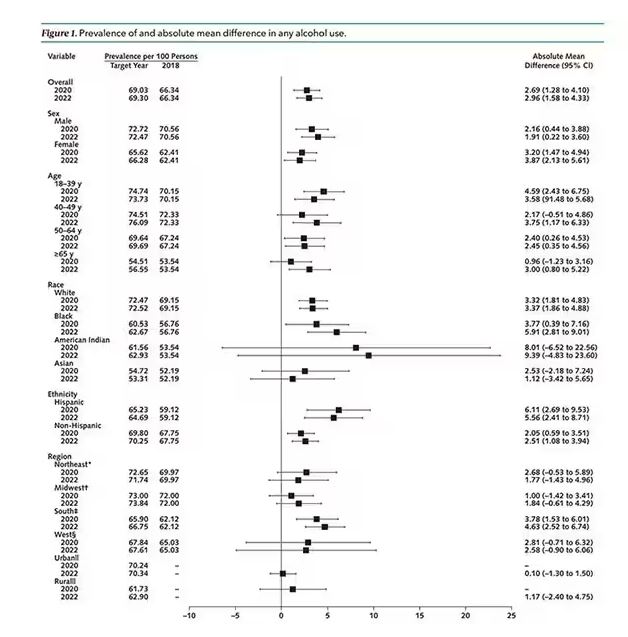

In 2018, 66% of Americans reported drinking any alcohol. That had risen to 69% by 2020, and it stayed at that level even after the lockdown had ended, as you can see here. This may seem like a small increase, but this was a highly significant result. Translating into absolute numbers, it suggests that we have added between 3,328,000 and 10,660,000 net additional drinkers to the population over this time period.

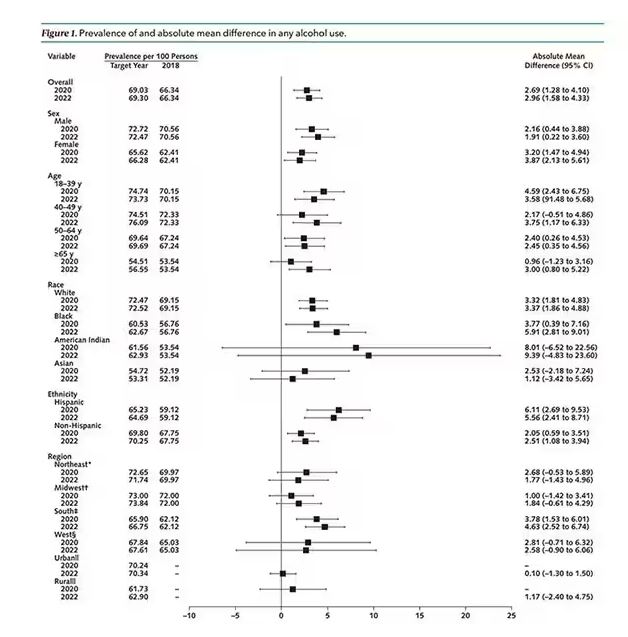

This trend was seen across basically every demographic group, with some notably larger increases among Black and Hispanic individuals, and marginally higher rates among people under age 30.

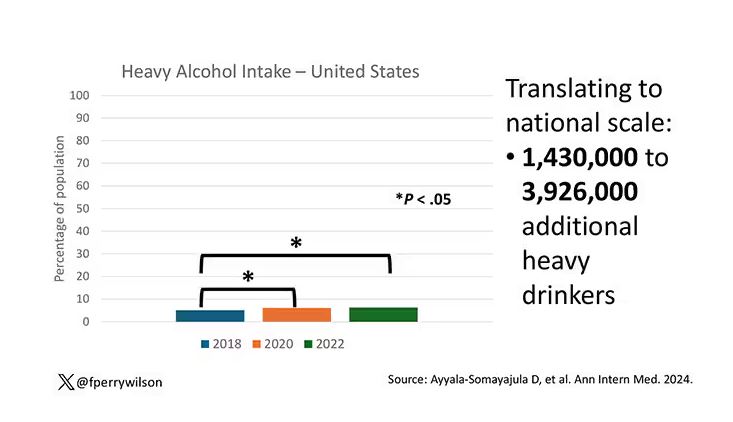

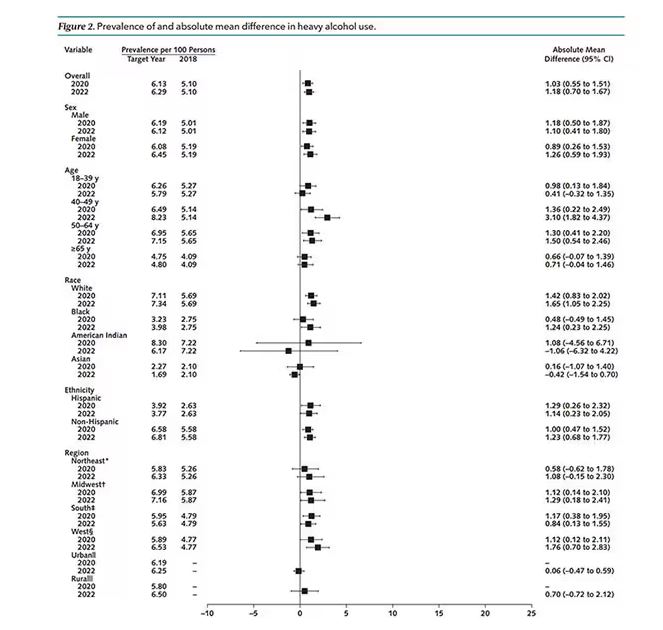

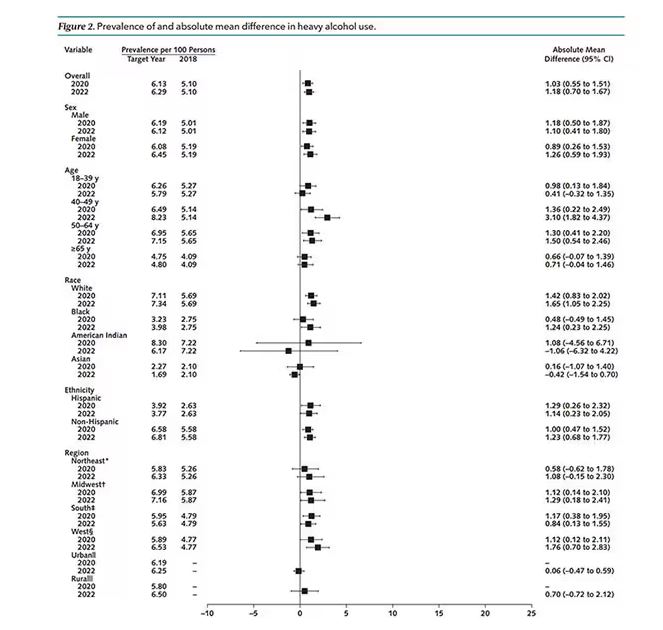

But far be it from me to deny someone a tot of brandy on a cold winter’s night. More interesting is the rate of heavy alcohol use reported in the study. For context, the definitions of heavy alcohol use appear here. For men, it’s any one day with five or more drinks or 15 or more drinks per week. For women it’s four or more drinks on a given day or eight drinks or more per week.

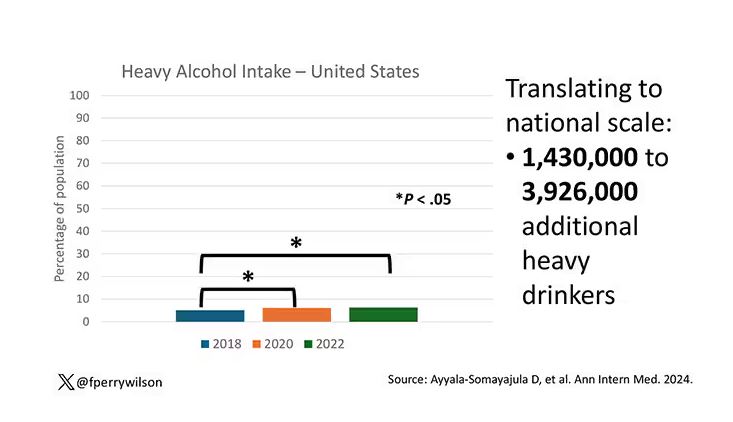

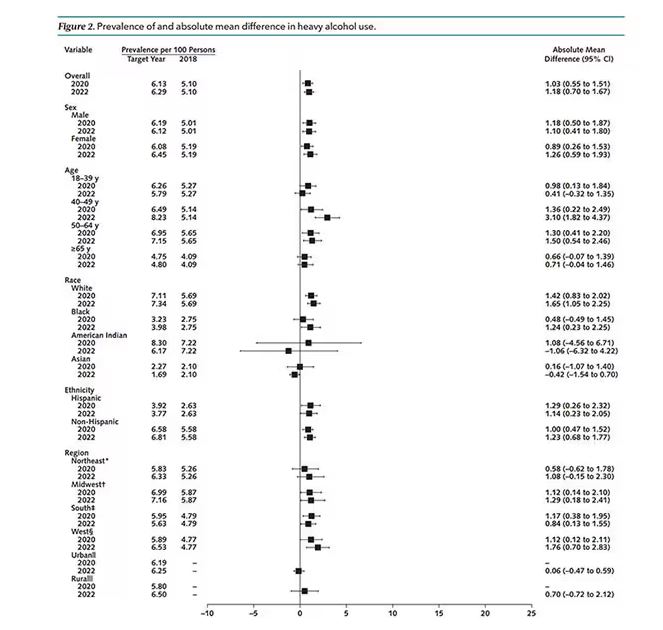

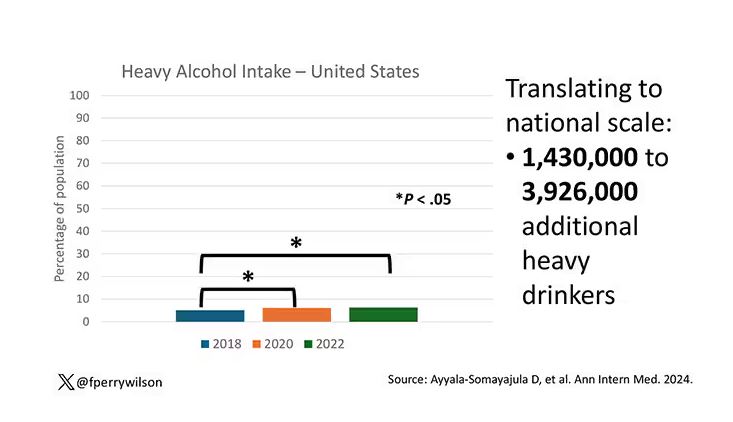

The overall rate of heavy drinking was about 5.1% in 2018 before the start of the pandemic. That rose to more than 6% in 2020 and it rose a bit more into 2022. The net change here, on a population level, is from 1,430,000 to 3,926,000 new heavy drinkers. That’s a number that rises to the level of an actual public health issue.

Again, this trend was fairly broad across demographic groups. Although in this case, the changes were a bit larger among White people and those in the 40- to 49-year age group. This is my cohort, I guess. Cheers.

The information we have from this study is purely descriptive. It tells us that people are drinking more since the pandemic. It doesn’t tell us why, or the impact that this excess drinking will have on subsequent health outcomes, although other studies would suggest that it will contribute to certain chronic conditions, both physical and mental.

Maybe more important is that it reminds us that habits are sticky. Once we become accustomed to something — that glass of wine or two with dinner, and before bed — it has a tendency to stay with us. There’s an upside to that phenomenon as well, of course; it means that we can train good habits too. And those, once they become ingrained, can be just as hard to break. We just need to be mindful of the habits we pick. New Year 2025 is just around the corner. Start brainstorming those resolutions now.

Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

You’re stuck in your house. Work is closed or you’re working remotely. Your kids’ school is closed or is offering an hour or two a day of Zoom-based instruction. You have a bit of cabin fever which, you suppose, is better than the actual fever that comes with COVID infections, which are running rampant during the height of the pandemic. But still — it’s stressful. What do you do?

We all coped in our own way. We baked sourdough bread. We built that tree house we’d been meaning to build. We started podcasts. And ... we drank. Quite a bit, actually.

During the first year of the pandemic, alcohol sales increased 3%, the largest year-on-year increase in more than 50 years. There was also an increase in drunkenness across the board, though it was most pronounced in those who were already at risk from alcohol use disorder.

Alcohol-associated deaths increased by around 10% from 2019 to 2020. Obviously, this is a small percentage of COVID-associated deaths, but it is nothing to sneeze at.

But look, we were anxious. And say what you will about alcohol as a risk factor for liver disease, heart disease, and cancer — not to mention traffic accidents — it is an anxiolytic, at least in the short term.

But as the pandemic waned, as society reopened, as we got back to work and reintegrated into our social circles and escaped the confines of our houses and apartments, our drinking habits went back to normal, right?