User login

Helicobacter pylori May Shift Gastric Cancer Earlier

Helicobacter pylori May Shift Gastric Cancer Earlier

ORLANDO, Fl — , new data suggested.

H pylori infection is a leading risk factor for gastric carcinoma, accounting for as many as 90% of cases. As the new data show, failure to screen routinely for the bacteria could be leading to younger people developing easily preventable forms of gastric cancer, experts said.

“The most concerning and the most interesting finding for us was we found higher prevalence” of gastric cancer linked to H pylori in the younger group, Neel Patel, MD, MPH, with the Department of Pathology at Staten Island University Hospital in Staten Island, New York, told GI & Hepatology News.

“This does not mean most patients are young. Rather, it means H pylori increases the likelihood of gastric cancer appearing earlier in life compared with non-H pylori cases.”

For the study, Patel and his colleagues, who presented their findings at the annual meeting of the College of American Pathologists (CAP) 2025, used 2016-2020 data from the Nationwide Inpatient Sample, which included records for adults with primary diagnoses of gastric cancer. They looked at outcomes of those whose cancer was associated with H pylori compared with the non-H pylori group.

Among 91,670 adult hospitalizations, 1830 (2%) had gastric cancer linked to H pylori (2016-2020). Patel said the low percentage resulted from focusing solely on diagnostic codes for primary diagnoses of gastric cancer and excluding secondary diagnoses.

These cancers were twice as prevalent in patients aged 18-49 years (3.97%) as in those older than 65 years (1.65%).

Septicemia Odds Higher in H pylori Group

Patients in the H pylori group also had a higher burden of comorbidities such as anemia, chronic blood loss, and metastatic cancer, according to the data. The researcher found these patients also had significantly higher odds of septicemia (odds ratio, 1.62; 95% CI, 1.17-2.24; P = .003) and spent an average of 8 days in the hospital — two more than those with cancers not associated with the infection.

Dipti M. Karamchandani, MD, a professor of pathology at the University of Texas Southwestern Medical Center in Dallas, who was not part of the study, said the longer hospital stays and greater risk for septicemia may be related to increased comorbidities among people who get H pylori infection in general. The infection often is caused by unsanitary conditions, and the groups infected may also be more likely to experience malnutrition, anemia, or lower body reserves, for example, she said.

“Also, H pylori often causes gastric ulcers, even before causing cancer, and those patients may be prone to chronic blood loss,” Karamchandani said. “These are all reasons that these patients may be more prone to longer hospital stay.”

US Guidelines Lacking

H pylori infection is a strong predictor of gastric cancer, but it often goes undetected. “Sometimes we ignore the symptoms,” Patel said.

“There are no standard guidelines for screening for H pylori,” he added. “We need to stop the transition from H pylori to gastric cancer.”

“This abstract highlights an important issue: Gastric cancer is rising among younger adults in the US, particularly in noncardia gastric cancer, which is most often associated with Helicobacter pylori infection,” said Chul S. Hyun, MD, PhD, MPH, director of the Gastric Cancer Prevention and Screening Program at Yale School of Medicine in New Haven, Connecticut.

Hyun said the 2% of patients in the study diagnosed with gastric cancer associated with H pylori likely reflected undercoding and “incomplete capture” in the database and noted that subgroup comparisons “become difficult to interpret reliably.” By extension, the findings also underscore, “We are not adequately capturing H pylori in routine US coding and claims.”

“What we do know is that H pylori is the central, modifiable driver of risk, and that prevention efforts should focus on high prevalence populations — including Asian, Hispanic, and immigrant communities — where systematic H pylori screening remains a major unmet need,” said Hyun, who was not involved in the new research.

Currently no US society guideline recommends systematic screening, Hyun said. “Other high-incidence countries, such as Japan and Korea, already incorporate H pylori and gastroscopy screening into national policy,” he said. “For these reasons, guidelines urgently need to evolve to recommend targeted H pylori screening in high prevalence groups.”

Patel, Karamchandani, and Hyun reported having no relevant financial conflicts of interest.

A version of this article appeared on Medscape.com.

ORLANDO, Fl — , new data suggested.

H pylori infection is a leading risk factor for gastric carcinoma, accounting for as many as 90% of cases. As the new data show, failure to screen routinely for the bacteria could be leading to younger people developing easily preventable forms of gastric cancer, experts said.

“The most concerning and the most interesting finding for us was we found higher prevalence” of gastric cancer linked to H pylori in the younger group, Neel Patel, MD, MPH, with the Department of Pathology at Staten Island University Hospital in Staten Island, New York, told GI & Hepatology News.

“This does not mean most patients are young. Rather, it means H pylori increases the likelihood of gastric cancer appearing earlier in life compared with non-H pylori cases.”

For the study, Patel and his colleagues, who presented their findings at the annual meeting of the College of American Pathologists (CAP) 2025, used 2016-2020 data from the Nationwide Inpatient Sample, which included records for adults with primary diagnoses of gastric cancer. They looked at outcomes of those whose cancer was associated with H pylori compared with the non-H pylori group.

Among 91,670 adult hospitalizations, 1830 (2%) had gastric cancer linked to H pylori (2016-2020). Patel said the low percentage resulted from focusing solely on diagnostic codes for primary diagnoses of gastric cancer and excluding secondary diagnoses.

These cancers were twice as prevalent in patients aged 18-49 years (3.97%) as in those older than 65 years (1.65%).

Septicemia Odds Higher in H pylori Group

Patients in the H pylori group also had a higher burden of comorbidities such as anemia, chronic blood loss, and metastatic cancer, according to the data. The researcher found these patients also had significantly higher odds of septicemia (odds ratio, 1.62; 95% CI, 1.17-2.24; P = .003) and spent an average of 8 days in the hospital — two more than those with cancers not associated with the infection.

Dipti M. Karamchandani, MD, a professor of pathology at the University of Texas Southwestern Medical Center in Dallas, who was not part of the study, said the longer hospital stays and greater risk for septicemia may be related to increased comorbidities among people who get H pylori infection in general. The infection often is caused by unsanitary conditions, and the groups infected may also be more likely to experience malnutrition, anemia, or lower body reserves, for example, she said.

“Also, H pylori often causes gastric ulcers, even before causing cancer, and those patients may be prone to chronic blood loss,” Karamchandani said. “These are all reasons that these patients may be more prone to longer hospital stay.”

US Guidelines Lacking

H pylori infection is a strong predictor of gastric cancer, but it often goes undetected. “Sometimes we ignore the symptoms,” Patel said.

“There are no standard guidelines for screening for H pylori,” he added. “We need to stop the transition from H pylori to gastric cancer.”

“This abstract highlights an important issue: Gastric cancer is rising among younger adults in the US, particularly in noncardia gastric cancer, which is most often associated with Helicobacter pylori infection,” said Chul S. Hyun, MD, PhD, MPH, director of the Gastric Cancer Prevention and Screening Program at Yale School of Medicine in New Haven, Connecticut.

Hyun said the 2% of patients in the study diagnosed with gastric cancer associated with H pylori likely reflected undercoding and “incomplete capture” in the database and noted that subgroup comparisons “become difficult to interpret reliably.” By extension, the findings also underscore, “We are not adequately capturing H pylori in routine US coding and claims.”

“What we do know is that H pylori is the central, modifiable driver of risk, and that prevention efforts should focus on high prevalence populations — including Asian, Hispanic, and immigrant communities — where systematic H pylori screening remains a major unmet need,” said Hyun, who was not involved in the new research.

Currently no US society guideline recommends systematic screening, Hyun said. “Other high-incidence countries, such as Japan and Korea, already incorporate H pylori and gastroscopy screening into national policy,” he said. “For these reasons, guidelines urgently need to evolve to recommend targeted H pylori screening in high prevalence groups.”

Patel, Karamchandani, and Hyun reported having no relevant financial conflicts of interest.

A version of this article appeared on Medscape.com.

ORLANDO, Fl — , new data suggested.

H pylori infection is a leading risk factor for gastric carcinoma, accounting for as many as 90% of cases. As the new data show, failure to screen routinely for the bacteria could be leading to younger people developing easily preventable forms of gastric cancer, experts said.

“The most concerning and the most interesting finding for us was we found higher prevalence” of gastric cancer linked to H pylori in the younger group, Neel Patel, MD, MPH, with the Department of Pathology at Staten Island University Hospital in Staten Island, New York, told GI & Hepatology News.

“This does not mean most patients are young. Rather, it means H pylori increases the likelihood of gastric cancer appearing earlier in life compared with non-H pylori cases.”

For the study, Patel and his colleagues, who presented their findings at the annual meeting of the College of American Pathologists (CAP) 2025, used 2016-2020 data from the Nationwide Inpatient Sample, which included records for adults with primary diagnoses of gastric cancer. They looked at outcomes of those whose cancer was associated with H pylori compared with the non-H pylori group.

Among 91,670 adult hospitalizations, 1830 (2%) had gastric cancer linked to H pylori (2016-2020). Patel said the low percentage resulted from focusing solely on diagnostic codes for primary diagnoses of gastric cancer and excluding secondary diagnoses.

These cancers were twice as prevalent in patients aged 18-49 years (3.97%) as in those older than 65 years (1.65%).

Septicemia Odds Higher in H pylori Group

Patients in the H pylori group also had a higher burden of comorbidities such as anemia, chronic blood loss, and metastatic cancer, according to the data. The researcher found these patients also had significantly higher odds of septicemia (odds ratio, 1.62; 95% CI, 1.17-2.24; P = .003) and spent an average of 8 days in the hospital — two more than those with cancers not associated with the infection.

Dipti M. Karamchandani, MD, a professor of pathology at the University of Texas Southwestern Medical Center in Dallas, who was not part of the study, said the longer hospital stays and greater risk for septicemia may be related to increased comorbidities among people who get H pylori infection in general. The infection often is caused by unsanitary conditions, and the groups infected may also be more likely to experience malnutrition, anemia, or lower body reserves, for example, she said.

“Also, H pylori often causes gastric ulcers, even before causing cancer, and those patients may be prone to chronic blood loss,” Karamchandani said. “These are all reasons that these patients may be more prone to longer hospital stay.”

US Guidelines Lacking

H pylori infection is a strong predictor of gastric cancer, but it often goes undetected. “Sometimes we ignore the symptoms,” Patel said.

“There are no standard guidelines for screening for H pylori,” he added. “We need to stop the transition from H pylori to gastric cancer.”

“This abstract highlights an important issue: Gastric cancer is rising among younger adults in the US, particularly in noncardia gastric cancer, which is most often associated with Helicobacter pylori infection,” said Chul S. Hyun, MD, PhD, MPH, director of the Gastric Cancer Prevention and Screening Program at Yale School of Medicine in New Haven, Connecticut.

Hyun said the 2% of patients in the study diagnosed with gastric cancer associated with H pylori likely reflected undercoding and “incomplete capture” in the database and noted that subgroup comparisons “become difficult to interpret reliably.” By extension, the findings also underscore, “We are not adequately capturing H pylori in routine US coding and claims.”

“What we do know is that H pylori is the central, modifiable driver of risk, and that prevention efforts should focus on high prevalence populations — including Asian, Hispanic, and immigrant communities — where systematic H pylori screening remains a major unmet need,” said Hyun, who was not involved in the new research.

Currently no US society guideline recommends systematic screening, Hyun said. “Other high-incidence countries, such as Japan and Korea, already incorporate H pylori and gastroscopy screening into national policy,” he said. “For these reasons, guidelines urgently need to evolve to recommend targeted H pylori screening in high prevalence groups.”

Patel, Karamchandani, and Hyun reported having no relevant financial conflicts of interest.

A version of this article appeared on Medscape.com.

Helicobacter pylori May Shift Gastric Cancer Earlier

Helicobacter pylori May Shift Gastric Cancer Earlier

VIP Boot Camp: Expanding the Impact of VA Primary Care Mental Health With a Transdiagnostic Modular Group Program

VIP Boot Camp: Expanding the Impact of VA Primary Care Mental Health With a Transdiagnostic Modular Group Program

Since 2007, Primary Care Mental Health Integration (PCMHI) at the Veterans Health Administration (VHA) has improved access to mental health care services for veterans by directly embedding mental health care professionals (HCPs) within primary care teams.1 Veterans referred to PCMHI often have co-occurring physical and mental health disorders.2 Untreated chronic physical and mental comorbidities can diminish the effectiveness of medical and mental health interventions. Growing evidence suggests that treatment of mental health conditions can improve physical health outcomes and management of physical conditions can improve mental health outcomes.2,3

Chronic pain and sleep disorders are common reasons patients present to primary care, and often coexist together with mental health comorbidities.4 Sleep disorders affect 50% to 88% of patients with chronic pain, and 40% of patients with sleep disorders report chronic pain.4 Research has found that chronic pain and sleep disorders increase the risk of suicide attempts and deaths by suicide. Addressing suicide prevention simultaneously with treating chronic pain and insomnia is encouraged.5

Background

PCMHI treats physical and mental health comorbidities with a collaborative framework and a biopsychosocial integrative model.6 PCMHI staff provide mental health services as members of primary care teams. An interdisciplinary PCMHI team can include, but is not limited to, psychologists, mental health social workers, psychiatrists, nurse practitioners, clinical pharmacists, and mental health nurses. Quality of care within this model is elevated, as mental and physical health are recognized as interconnected. Collaboration between primary care and mental health benefits veterans and the VHA by increasing access to mental health care, decreasing stigma associated with mental health treatment, improving health outcomes, and enhancing the likelihood of recovery, resulting in high patient satisfaction.6-8

In the existing PCMHI model, HCPs are encouraged to use short-term, evidence-based psychotherapies (EBPs).9 Veterans referred to PCMHI from primary care are typically able to attend 1 to 6 brief sessions of mental health treatment, often 20 to 30 minutes long. Most EBPs in PCMHI are disorder- specific, providing interventions focused on a single presenting problem (eg, insomnia, chronic pain, or posttraumatic stress disorder [PTSD]). For veterans with a single issue, this model can be very effective. 1,10 However, the high rate of co-occurrence of mental and physical health issues can make it difficult to fully treat interrelated problems if the focus is on 1 specific diagnosis. Veterans with a need for additional (more comprehensive or intensive) mental health treatment are frequently referred to a higher, more resource-intensive level of mental health care, either in the VHA or the community. Examples of higher levels of mental health care include the longer term behavioral health interdisciplinary program (BHIP), sometimes called a mental health clinic (MHC), or a specialty mental health program such as a PTSD clinic.

As PCMHI continues to grow, new challenges have emerged related to staffing shortages and gaps in the clinical delivery of mental health treatment within the VHA. At the same time, demand for VHA mental health treatment has increased. However, a mental health professional shortage severely limits the ability of the VHA to meet this demand. In many systems, this shortage may result in more referrals being made to a higher level of mental health care because of fewer resources to provide comprehensive treatment in a less intensive PCMHI setting.8,10,11 This referral pattern can overburden higher level care, often with long wait times for treatment and lengthy lag times between appointments. Furthermore, these gaps in the clinical delivery of care cannot be effectively addressed by hiring additional mental health professionals. This strain on resources can impede access to care and negatively affect outcomes.10

Recent congressional reports highlight these issues, noting that demand for mental health services continues to outpace the capacity of both PCMHI and higher levels of mental health care, leading to delays in treatment that may negatively affect outcomes.8,10,11 These delays can be particularly detrimental for individuals with conditions requiring timely intervention.8,11 Some veterans are willing to engage with PCMHI in a primary care setting but may be reluctant to engage in general mental health treatment. These veterans might not receive the mental health care they need without PCMHI.

Group Psychotherapy

A group psychotherapy format can address gaps in care delivery and provide advantages for patients, mental health professionals, and the VHA. Group psychotherapy aligns with the US Department of Veterans Affairs (VA) 2018 Blueprint for Excellence and 2018 to 2024 strategic plan, underscoring the need for more timely and efficient mental health services.12,13

Benefits of group psychotherapy include reductions in symptoms, decreased feelings of isolation, increased social support, decreased emotional suppression, and enhanced satisfaction with overall quality of life.14-17 Studies of veterans with PTSD have found less attrition among those who chose group therapy compared with individual therapy.14,18 Group psychotherapy improves access to care by enabling delivery to more patients.14 When compared with individual therapy, the group format allows for a large number of patients to be treated simultaneously, maximizing resources and reducing costs.3,19-21

VISN 9 CRH Innovation

The VA provides care to veterans through regionally distinct administrative systems known as Veterans Integrated Service Networks (VISNs). Clinical resource hubs (CRH) are VISN-based programs created to cover VA staffing shortages by virtually deploying HCPs into local VA systems until vacancies are filled. The national CRH vision of effectively using resources and innovative technologies to meet veterans’ health care needs, along with the above-referenced clinical gaps in the delivery of care, inspired the development of VIP Boot Camp within the VISN 9 CRH.22

Program Description

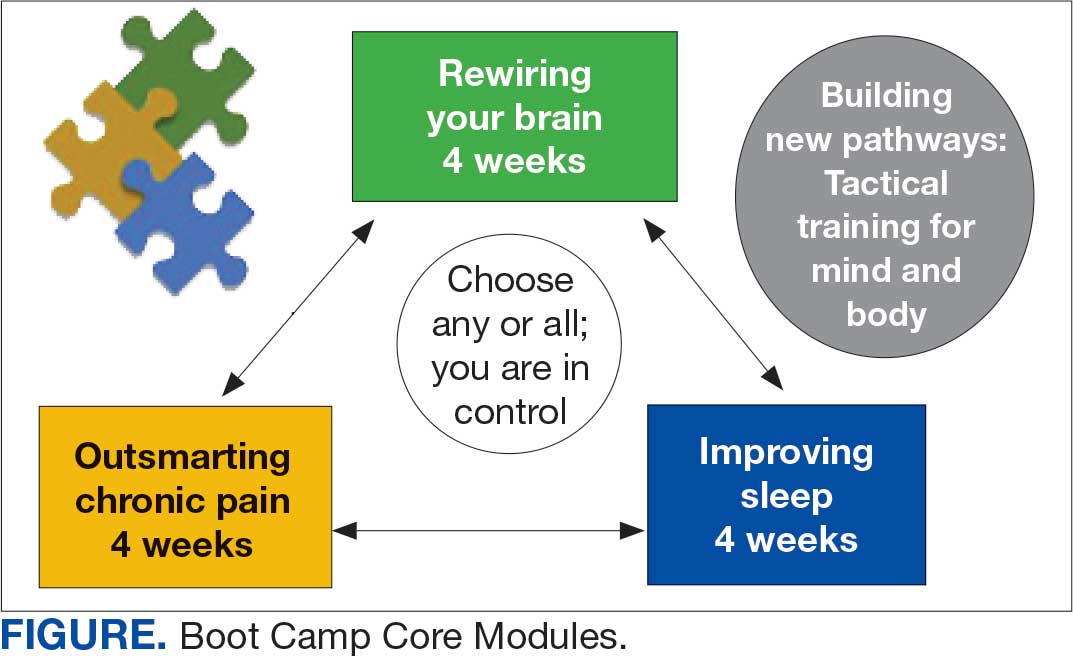

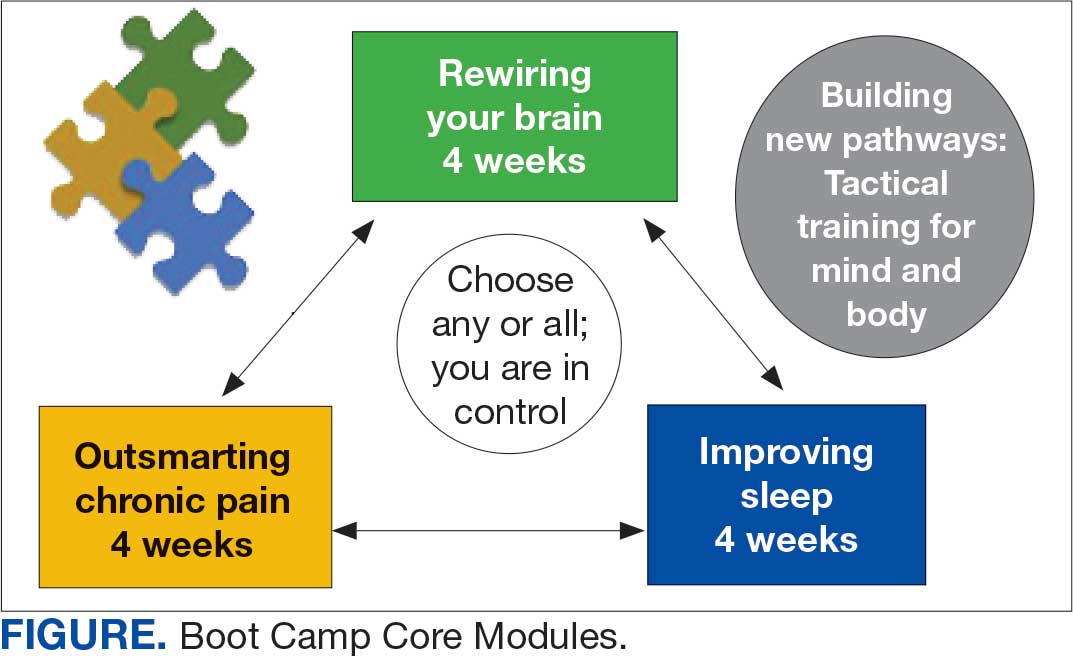

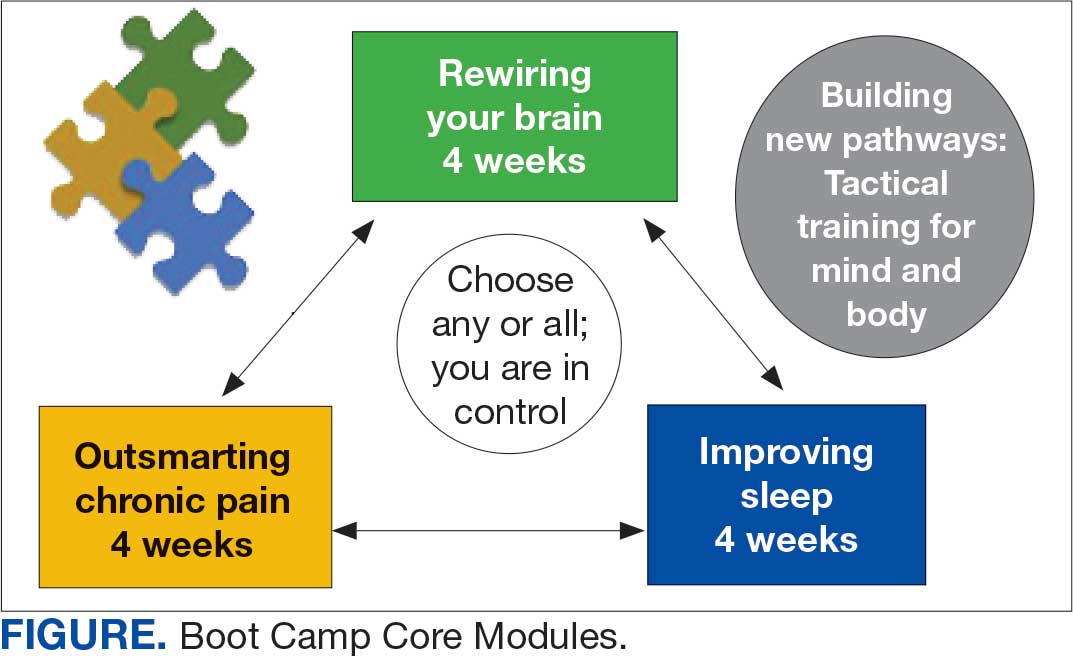

VIP Boot Camp is an evidence-informed group psychotherapy program designed to provide timely, brief, and comprehensive mental health treatment for veterans. VIP Boot Camp was developed to address the needs of veterans accessing PCMHI services who experience ≥ 1 of the often overlapping problems of anxiety/emotion regulation/stress, sleep difficulties, and chronic pain (Figure). VIP Boot Camp uses an integrative approach to highlight interconnections and similarities among these difficulties and their treatment. A primary vision of the program is to provide this comprehensive treatment within PCMHI (upstream) so additional referrals to higher levels of mental health care (downstream) may not be needed.

This design is intentional because it increases the number of individuals who can be treated upstream with comprehensive, preventive, and proactive care within PCMHI which, over time, frees up resources in the BHIP for individuals requiring higher levels of care. This approach also aligns with the importance of early treatment for chronic pain and sleep disturbances, which are linked to increased risk of suicide attempts and deaths by suicide for veterans.5 National interest for VIP Boot Camp grew during fiscal year 2024 after it received the Gold Medal Recognition for Most Adoptable and Greatest Potential for Impact during VHA National Access Sprint Wave 3—Mental Health Call of Champions.

History

VIP Boot Camp began in August 2021 at VISN 9 as a 6-week virtual group for veterans with chronic pain. It was established to assist a large VA medical center experiencing PCMHI staffing shortages and lacking available PCMHI groups. Many veterans in the chronic pain group discussed co-occurring issues such as sleep disturbances, anxiety, and stress. The CRH team considered launching 2 separate groups to address these additional PCMHI-level issues; however, in developing the group material which drew from multiple clinical approaches, the team recognized significant overlapping and interconnected themes.

The team discussed EBPs within the VHA and how certain interventions within these treatments could be helpful across many other co-occurring disorders. Integrated tactics (clinical interventions) were drawn from cognitive-behavioral therapy (for depression, insomnia, or chronic pain), acceptance and commitment therapy, prolonged exposure, cognitive processing therapy, dialectical behavior therapy, unified protocol, pain reprocessing therapy, emotional awareness and expression therapy, interpersonal neurobiology, and mindfulness. We collaborated with veterans during VIP Boot Camp groups to determine how to present and discuss complex interventions in ways that were clinically accurate, understandable, relatable, and relevant to their experiences.

To address accessibility issues, the chronic pain group was reduced to 4 weeks. A second 4-week module for anxiety, emotion regulation, and stress was developed, mirroring the tactics, language, and integrative approach of the revised chronic pain module. A similar integrative approach led to the development of the third and final 4-week module for sleep disturbances.

Current Program

The VIP Boot Camp consists of three 4-week integrated modules, each highlighting a critical area: sleep disturbances (Improving Sleep), chronic pain difficulties (Outsmarting Chronic Pain), and emotion regulation difficulties (Rewiring Your Brain). VIP Boot Camp is designed for veterans who are at the PCMHI level of care. Referrals are accepted for patients receiving treatment from primary care or PCMHI.

Guidelines for participation in VIP Boot Camp may differ across sites or VISNs. For example, a veteran who has been referred to the BHIP for medication management only or to a specialty MHC such as a pain clinic or PTSD clinic might also be appropriate and eligible for VIP Boot Camp.

Given the interconnectedness of foundational themes, elements, and practices across the VIP Boot Camp modules, the modules are offered in a rolling format with a veteran-centric “choose your own adventure” approach. Tactics are presented in the modules in a way that allows patients to begin with any 1 of the 3 modules and receive treatment that will help in the other areas. Participants choose their core module and initial treatment focus based on their values, needs, and goals. Individuals who complete a core module can end their VIP Boot Camp experience or continue to the next 4-week module for up to 3 modules.

The group is open to new individuals at the start of any 4-week module and closed for the remainder of its 4-week duration. This innovative rolling modular approach combines elements of open- and closed-group format, allowing for the flexibility and accessibility of an open group with the stability and peer support of a closed group.

Given the complicated and overlapping nature of chronic pain, emotion regulation/ stress, and sleep disturbances, VIP Boot Camp acknowledges that everything is interconnected and difficulties in 1 area may impact other areas. The 3 interconnected modules with repeating themes provide coherence and consistency. Veterans learn how interconnections across difficulties can be leveraged so that tactics learned and practiced in 1 area can assist in other areas, changing the cycle of suffering into a cycle of growth.

VIP Boot Camp sessions are 90 minutes long, once weekly for 4 weeks, with 2 mental health professionals trained to lead a dynamic group psychotherapy experience that aims to be fun for participants. VIP Boot Camp synthesizes evidence-based and evidence-informed interventions, as well as techniques from VHA complementary and integrative health programs, psychoeducation, and interpersonal interventions that model connection, playfulness, and healthy boundaries. These varied strategies combine to equip veterans with practical tactics for self-management outside of sessions, a process described as “finding puzzle pieces.” VIP Boot Camp is built on the idea that people are more likely to adopt and practice any tactic after being taught why that tactic is important, and how it fits into their larger interconnected puzzle. After each session, participants are provided with additional asynchronous educational material to help reinforce their learnings and practices.

Although individuals may hesitate to participate in a group setting, they often find the experience of community enhances and accelerates their treatment and gains. This involvement is highlighted in a core aspect of a VIP Boot Camp session called wins, during which participants learn how others on their Boot Camp team are implementing new skills and moving toward their personal values and objectives in a stepwise manner. Through these shared experiences, veterans discover how tactics working for others may serve as a model for their own personal objectives and plans for practice. The sense of relief described by many upon realizing they are not alone in their experiences, along with the satisfaction felt in discovering their ability to support others in Boot Camp, is described by many participants as deeply meaningful and in line with their personal values.

While developed as a fully virtual group program, VIP Boot Camp can also be conducted in person. The virtual program has been successful and continues to spread across VISN 9. There are 8 virtual VIP Boot Camps running in VISN 9, with plans for continued expansion. In the VISN 9 CRH, Boot Camps typically have 10 to 12 participants. Additionally, as VIP Boot Camp grows within a location there are frequently sufficient referrals to support a second rolling group, which enables staggering of the module offerings to allow for even more timely treatment.

Training Program

VISN 9 CRH also developed a VIP Boot Camp 3-day intensive training program for PCMHI HCPs that consists of learning and practicing VIP Boot Camp material for chronic pain, emotion regulation/ stress, sleep disturbances, mindfulness, and guided imagery, along with gaining experience as a VIP Boot Camp coleader. Feedback received from PCMHI HCPs who completed training has been positive. There is also a private Microsoft Teams channel for HCPs, which allows for resource sharing and community building among coleaders. More than 75 PCMHI HCPs have completed VIP Boot Camp training and > 25 VIP Boot Camps have been established at 4 additional VISNs.

The VISN 9 CRH VIP Boot Camp program initiated an implementation and effectiveness project with the Michael E. DeBakey VA Medical Center and the South Central Mental Illness Research, Education and Clinical Center. The focus of this collaboration is support for implementation and treatment effectiveness research with reports, articles, and a white paper on findings and best practices, alongside continued dissemination of the VIP Boot Camp program and training.

Conclusions

VIP Boot Camp is a PCMHI group program offering readily available, comprehensive, and integrative group psychotherapy services to veterans experiencing . 1 of the following: chronic pain, emotion regulation/ stress, and sleep disturbances. It was launched at the VISN 9 CRH with a goal of addressing clinical gaps in the delivery of mental health care, by increasing the number of patients treated within PCMHI. The VIP Boot Camp model provides veterans the opportunity to transform cycles of suffering into cycles of growth through a single approach that can address multiple presenting and interconnected issues.

A 3-day VIP Boot Camp training program provides a quick and effective path for a PCMHI program to train HCPs to launch a VIP Boot Camp. The VISN 9 CRH will continue to champion VIP Boot Camp as a model for the successful provision of comprehensive and integrative mental health treatment within PCMHI at the VA. Through readily available access to comprehensive mental health treatment in an environment that promotes participant empowerment and social engagement, VIP Boot Camp represents an integrative and innovative model of mental health treatment that offers benefits to veteran participants, HCPs, and the VHA.

- Leung LB, Yoon J, Escarce JJ, et al. Primary care-mental health integration in the VA: shifting mental health services for common mental illnesses to primary care. Psychiatr Serv. 2018;69:403-409. doi:10.1176/appi.ps.201700190

- Zhang A, Park S, Sullivan JE, et al. The effectiveness of problem-solving therapy for primary care patients’ depressive and/or anxiety disorders: a systematic review and meta-analysis. J Am Board Fam Med. 2018;31:139-150. doi:10.3122/jabfm.2018.01.170270

- Hundt NE, Barrera TL, Robinson A, et al. A systematic review of cognitive behavioral therapy for depression in veterans. Mil Med. 2014;179:942-949. doi:10.7205/milmed-d-14-00128

- Jank R, Gallee A, Boeckle M, et al. Chronic pain and sleep disorders in primary care. Pain Res Treat. 2017;2017:1-9. doi:10.1155/2017/9081802

- Ashrafioun L, Bishop TM, Pigeon WR. The relationship between pain severity, insomnia, and suicide attempts among a national veteran sample initiating pain care. Psychosom Med. 2021;83:733- 738. doi:10.1097/psy.0000000000000975

- Ramanuj P, Ferenchik E, Docherty M, et al. Evolving models of integrated behavioral health and primary care. Curr Psychiatry Rep. 2019;21:1. doi:10.1007/s11920-019-0985-4

- Post EP, Metzger M, Dumas P, et al. Integrating mental health into primary care within the Veterans Health Administration. Fam Syst Health. 2010;28:83-90. doi:10.1037/a0020130

- Smith TL, Kim B, Benzer JK, et al. FLOW: early results from a clinical demonstration project to improve the transition of patients with mental health disorders back to primary care. Psychol Serv. 2021;18:23-32. doi:10.1037/ser0000336

- Kearney LK, Post EP, Pomerantz AS, et al. Applying the interprofessional patient aligned care team in the department of veterans affairs transforming primary care. Am Psychol. 2014;69(4):399-408. doi:10.1037/a0035909

- US Government Accountability Office. Veterans health care: staffing challenges persist for fully integrating mental health and primary care services. December 15, 2022. Accessed July 9, 2025. https://www.gao.gov/products/gao-23-105372

- National Academies of Science and Engineering. Evaluation of the Department of Veterans Affairs Mental Health Services. National Academies Press; 2018. Accessed July 9, 2025. https://nap.nationalacademies.org/catalog/24915/evaluation-of-the-department-of-veterans-affairs-mental-health-services

- US Department of Veterans Affairs. Blueprint for excellence: achieving veterans’ excellence. October 6, 2014. Accessed July 9, 2025. https://www.volunteer.va.gov/docs/blueprintforexcellence_factsheet.PDF

- US Department of Veterans Affairs. Department of Veterans Affairs FY 2018-2024 strategic plan. Accessed July 9, 2025. https://www.calvet.ca.gov/Regulations/USDVA%20Strategic%20Plan%202018-2024.pdf

- Sripada RK, Bohnert KM, Ganoczy D, et al. Initial group versus individual therapy for posttraumatic stress disorder and subsequent follow-up treatment adequacy. Psychol Serv. 2016;13:349-355. doi:10.1037/ser0000077

- Burnett-Zeigler IE, Pfeiffer P, Zivin K, et al. Psychotherapy utilization for acute depression within the Veterans Affairs health care system. Psychol Serv. 2012;9:325-335. doi:10.1037/a0027957

- Kim JS, Prins A, Hirschhorn EW, et al. Preliminary investigation into the effectiveness of group webSTAIR for trauma-exposed veterans in primary care. Mil Med. 2024;189:e1403-e1408. doi:10.1093/milmed/usae052

- Jakupcak M, Blais RK, Grossbard J, et al. “Toughness” in association with mental health symptoms among Iraq and Afghanistan war veterans seeking Veterans Affairs health care. Psychol Men Masc. 2014;15:100-104. doi:10.1037/a0031508

- Stoycos SA, Berzenski SR, Beck JG, et al. Predictors of treatment completion in group psychotherapy for male veterans with posttraumatic stress disorder. J Trauma Stress. 2023;36:346-358. doi:10.1002/jts.22915

- Possemato K. The current state of intervention research for posttraumatic stress disorder within the primary care setting. J Clin Psychol Med Settings. 2011;18:268-280. doi:10.1007/s10880-011-9237-4

- Hunt MG, Rosenheck RA. Psychotherapy in mental health clinics of the Department of Veterans Affairs. J Clin Psychol. 2011;67:561-573. doi:10.1002/jclp.20788

- Khatri N, Marziali E, Tchernikov I, et al. Comparing telehealth-based and clinic-based group cognitive behavioral therapy for adults with depression and anxiety: a pilot study. Clin Interv Aging. 2014;9:765. doi:10.2147/cia.s57832

- Dangel J. Clinical resource hub increases veterans' access to care. VA News. January 12, 2025. Accessed September 3, 2025. https://news.va.gov/137439/clinical-resource-hub-increases-access-to-care/

Since 2007, Primary Care Mental Health Integration (PCMHI) at the Veterans Health Administration (VHA) has improved access to mental health care services for veterans by directly embedding mental health care professionals (HCPs) within primary care teams.1 Veterans referred to PCMHI often have co-occurring physical and mental health disorders.2 Untreated chronic physical and mental comorbidities can diminish the effectiveness of medical and mental health interventions. Growing evidence suggests that treatment of mental health conditions can improve physical health outcomes and management of physical conditions can improve mental health outcomes.2,3

Chronic pain and sleep disorders are common reasons patients present to primary care, and often coexist together with mental health comorbidities.4 Sleep disorders affect 50% to 88% of patients with chronic pain, and 40% of patients with sleep disorders report chronic pain.4 Research has found that chronic pain and sleep disorders increase the risk of suicide attempts and deaths by suicide. Addressing suicide prevention simultaneously with treating chronic pain and insomnia is encouraged.5

Background

PCMHI treats physical and mental health comorbidities with a collaborative framework and a biopsychosocial integrative model.6 PCMHI staff provide mental health services as members of primary care teams. An interdisciplinary PCMHI team can include, but is not limited to, psychologists, mental health social workers, psychiatrists, nurse practitioners, clinical pharmacists, and mental health nurses. Quality of care within this model is elevated, as mental and physical health are recognized as interconnected. Collaboration between primary care and mental health benefits veterans and the VHA by increasing access to mental health care, decreasing stigma associated with mental health treatment, improving health outcomes, and enhancing the likelihood of recovery, resulting in high patient satisfaction.6-8

In the existing PCMHI model, HCPs are encouraged to use short-term, evidence-based psychotherapies (EBPs).9 Veterans referred to PCMHI from primary care are typically able to attend 1 to 6 brief sessions of mental health treatment, often 20 to 30 minutes long. Most EBPs in PCMHI are disorder- specific, providing interventions focused on a single presenting problem (eg, insomnia, chronic pain, or posttraumatic stress disorder [PTSD]). For veterans with a single issue, this model can be very effective. 1,10 However, the high rate of co-occurrence of mental and physical health issues can make it difficult to fully treat interrelated problems if the focus is on 1 specific diagnosis. Veterans with a need for additional (more comprehensive or intensive) mental health treatment are frequently referred to a higher, more resource-intensive level of mental health care, either in the VHA or the community. Examples of higher levels of mental health care include the longer term behavioral health interdisciplinary program (BHIP), sometimes called a mental health clinic (MHC), or a specialty mental health program such as a PTSD clinic.

As PCMHI continues to grow, new challenges have emerged related to staffing shortages and gaps in the clinical delivery of mental health treatment within the VHA. At the same time, demand for VHA mental health treatment has increased. However, a mental health professional shortage severely limits the ability of the VHA to meet this demand. In many systems, this shortage may result in more referrals being made to a higher level of mental health care because of fewer resources to provide comprehensive treatment in a less intensive PCMHI setting.8,10,11 This referral pattern can overburden higher level care, often with long wait times for treatment and lengthy lag times between appointments. Furthermore, these gaps in the clinical delivery of care cannot be effectively addressed by hiring additional mental health professionals. This strain on resources can impede access to care and negatively affect outcomes.10

Recent congressional reports highlight these issues, noting that demand for mental health services continues to outpace the capacity of both PCMHI and higher levels of mental health care, leading to delays in treatment that may negatively affect outcomes.8,10,11 These delays can be particularly detrimental for individuals with conditions requiring timely intervention.8,11 Some veterans are willing to engage with PCMHI in a primary care setting but may be reluctant to engage in general mental health treatment. These veterans might not receive the mental health care they need without PCMHI.

Group Psychotherapy

A group psychotherapy format can address gaps in care delivery and provide advantages for patients, mental health professionals, and the VHA. Group psychotherapy aligns with the US Department of Veterans Affairs (VA) 2018 Blueprint for Excellence and 2018 to 2024 strategic plan, underscoring the need for more timely and efficient mental health services.12,13

Benefits of group psychotherapy include reductions in symptoms, decreased feelings of isolation, increased social support, decreased emotional suppression, and enhanced satisfaction with overall quality of life.14-17 Studies of veterans with PTSD have found less attrition among those who chose group therapy compared with individual therapy.14,18 Group psychotherapy improves access to care by enabling delivery to more patients.14 When compared with individual therapy, the group format allows for a large number of patients to be treated simultaneously, maximizing resources and reducing costs.3,19-21

VISN 9 CRH Innovation

The VA provides care to veterans through regionally distinct administrative systems known as Veterans Integrated Service Networks (VISNs). Clinical resource hubs (CRH) are VISN-based programs created to cover VA staffing shortages by virtually deploying HCPs into local VA systems until vacancies are filled. The national CRH vision of effectively using resources and innovative technologies to meet veterans’ health care needs, along with the above-referenced clinical gaps in the delivery of care, inspired the development of VIP Boot Camp within the VISN 9 CRH.22

Program Description

VIP Boot Camp is an evidence-informed group psychotherapy program designed to provide timely, brief, and comprehensive mental health treatment for veterans. VIP Boot Camp was developed to address the needs of veterans accessing PCMHI services who experience ≥ 1 of the often overlapping problems of anxiety/emotion regulation/stress, sleep difficulties, and chronic pain (Figure). VIP Boot Camp uses an integrative approach to highlight interconnections and similarities among these difficulties and their treatment. A primary vision of the program is to provide this comprehensive treatment within PCMHI (upstream) so additional referrals to higher levels of mental health care (downstream) may not be needed.

This design is intentional because it increases the number of individuals who can be treated upstream with comprehensive, preventive, and proactive care within PCMHI which, over time, frees up resources in the BHIP for individuals requiring higher levels of care. This approach also aligns with the importance of early treatment for chronic pain and sleep disturbances, which are linked to increased risk of suicide attempts and deaths by suicide for veterans.5 National interest for VIP Boot Camp grew during fiscal year 2024 after it received the Gold Medal Recognition for Most Adoptable and Greatest Potential for Impact during VHA National Access Sprint Wave 3—Mental Health Call of Champions.

History

VIP Boot Camp began in August 2021 at VISN 9 as a 6-week virtual group for veterans with chronic pain. It was established to assist a large VA medical center experiencing PCMHI staffing shortages and lacking available PCMHI groups. Many veterans in the chronic pain group discussed co-occurring issues such as sleep disturbances, anxiety, and stress. The CRH team considered launching 2 separate groups to address these additional PCMHI-level issues; however, in developing the group material which drew from multiple clinical approaches, the team recognized significant overlapping and interconnected themes.

The team discussed EBPs within the VHA and how certain interventions within these treatments could be helpful across many other co-occurring disorders. Integrated tactics (clinical interventions) were drawn from cognitive-behavioral therapy (for depression, insomnia, or chronic pain), acceptance and commitment therapy, prolonged exposure, cognitive processing therapy, dialectical behavior therapy, unified protocol, pain reprocessing therapy, emotional awareness and expression therapy, interpersonal neurobiology, and mindfulness. We collaborated with veterans during VIP Boot Camp groups to determine how to present and discuss complex interventions in ways that were clinically accurate, understandable, relatable, and relevant to their experiences.

To address accessibility issues, the chronic pain group was reduced to 4 weeks. A second 4-week module for anxiety, emotion regulation, and stress was developed, mirroring the tactics, language, and integrative approach of the revised chronic pain module. A similar integrative approach led to the development of the third and final 4-week module for sleep disturbances.

Current Program

The VIP Boot Camp consists of three 4-week integrated modules, each highlighting a critical area: sleep disturbances (Improving Sleep), chronic pain difficulties (Outsmarting Chronic Pain), and emotion regulation difficulties (Rewiring Your Brain). VIP Boot Camp is designed for veterans who are at the PCMHI level of care. Referrals are accepted for patients receiving treatment from primary care or PCMHI.

Guidelines for participation in VIP Boot Camp may differ across sites or VISNs. For example, a veteran who has been referred to the BHIP for medication management only or to a specialty MHC such as a pain clinic or PTSD clinic might also be appropriate and eligible for VIP Boot Camp.

Given the interconnectedness of foundational themes, elements, and practices across the VIP Boot Camp modules, the modules are offered in a rolling format with a veteran-centric “choose your own adventure” approach. Tactics are presented in the modules in a way that allows patients to begin with any 1 of the 3 modules and receive treatment that will help in the other areas. Participants choose their core module and initial treatment focus based on their values, needs, and goals. Individuals who complete a core module can end their VIP Boot Camp experience or continue to the next 4-week module for up to 3 modules.

The group is open to new individuals at the start of any 4-week module and closed for the remainder of its 4-week duration. This innovative rolling modular approach combines elements of open- and closed-group format, allowing for the flexibility and accessibility of an open group with the stability and peer support of a closed group.

Given the complicated and overlapping nature of chronic pain, emotion regulation/ stress, and sleep disturbances, VIP Boot Camp acknowledges that everything is interconnected and difficulties in 1 area may impact other areas. The 3 interconnected modules with repeating themes provide coherence and consistency. Veterans learn how interconnections across difficulties can be leveraged so that tactics learned and practiced in 1 area can assist in other areas, changing the cycle of suffering into a cycle of growth.

VIP Boot Camp sessions are 90 minutes long, once weekly for 4 weeks, with 2 mental health professionals trained to lead a dynamic group psychotherapy experience that aims to be fun for participants. VIP Boot Camp synthesizes evidence-based and evidence-informed interventions, as well as techniques from VHA complementary and integrative health programs, psychoeducation, and interpersonal interventions that model connection, playfulness, and healthy boundaries. These varied strategies combine to equip veterans with practical tactics for self-management outside of sessions, a process described as “finding puzzle pieces.” VIP Boot Camp is built on the idea that people are more likely to adopt and practice any tactic after being taught why that tactic is important, and how it fits into their larger interconnected puzzle. After each session, participants are provided with additional asynchronous educational material to help reinforce their learnings and practices.

Although individuals may hesitate to participate in a group setting, they often find the experience of community enhances and accelerates their treatment and gains. This involvement is highlighted in a core aspect of a VIP Boot Camp session called wins, during which participants learn how others on their Boot Camp team are implementing new skills and moving toward their personal values and objectives in a stepwise manner. Through these shared experiences, veterans discover how tactics working for others may serve as a model for their own personal objectives and plans for practice. The sense of relief described by many upon realizing they are not alone in their experiences, along with the satisfaction felt in discovering their ability to support others in Boot Camp, is described by many participants as deeply meaningful and in line with their personal values.

While developed as a fully virtual group program, VIP Boot Camp can also be conducted in person. The virtual program has been successful and continues to spread across VISN 9. There are 8 virtual VIP Boot Camps running in VISN 9, with plans for continued expansion. In the VISN 9 CRH, Boot Camps typically have 10 to 12 participants. Additionally, as VIP Boot Camp grows within a location there are frequently sufficient referrals to support a second rolling group, which enables staggering of the module offerings to allow for even more timely treatment.

Training Program

VISN 9 CRH also developed a VIP Boot Camp 3-day intensive training program for PCMHI HCPs that consists of learning and practicing VIP Boot Camp material for chronic pain, emotion regulation/ stress, sleep disturbances, mindfulness, and guided imagery, along with gaining experience as a VIP Boot Camp coleader. Feedback received from PCMHI HCPs who completed training has been positive. There is also a private Microsoft Teams channel for HCPs, which allows for resource sharing and community building among coleaders. More than 75 PCMHI HCPs have completed VIP Boot Camp training and > 25 VIP Boot Camps have been established at 4 additional VISNs.

The VISN 9 CRH VIP Boot Camp program initiated an implementation and effectiveness project with the Michael E. DeBakey VA Medical Center and the South Central Mental Illness Research, Education and Clinical Center. The focus of this collaboration is support for implementation and treatment effectiveness research with reports, articles, and a white paper on findings and best practices, alongside continued dissemination of the VIP Boot Camp program and training.

Conclusions

VIP Boot Camp is a PCMHI group program offering readily available, comprehensive, and integrative group psychotherapy services to veterans experiencing . 1 of the following: chronic pain, emotion regulation/ stress, and sleep disturbances. It was launched at the VISN 9 CRH with a goal of addressing clinical gaps in the delivery of mental health care, by increasing the number of patients treated within PCMHI. The VIP Boot Camp model provides veterans the opportunity to transform cycles of suffering into cycles of growth through a single approach that can address multiple presenting and interconnected issues.

A 3-day VIP Boot Camp training program provides a quick and effective path for a PCMHI program to train HCPs to launch a VIP Boot Camp. The VISN 9 CRH will continue to champion VIP Boot Camp as a model for the successful provision of comprehensive and integrative mental health treatment within PCMHI at the VA. Through readily available access to comprehensive mental health treatment in an environment that promotes participant empowerment and social engagement, VIP Boot Camp represents an integrative and innovative model of mental health treatment that offers benefits to veteran participants, HCPs, and the VHA.

Since 2007, Primary Care Mental Health Integration (PCMHI) at the Veterans Health Administration (VHA) has improved access to mental health care services for veterans by directly embedding mental health care professionals (HCPs) within primary care teams.1 Veterans referred to PCMHI often have co-occurring physical and mental health disorders.2 Untreated chronic physical and mental comorbidities can diminish the effectiveness of medical and mental health interventions. Growing evidence suggests that treatment of mental health conditions can improve physical health outcomes and management of physical conditions can improve mental health outcomes.2,3

Chronic pain and sleep disorders are common reasons patients present to primary care, and often coexist together with mental health comorbidities.4 Sleep disorders affect 50% to 88% of patients with chronic pain, and 40% of patients with sleep disorders report chronic pain.4 Research has found that chronic pain and sleep disorders increase the risk of suicide attempts and deaths by suicide. Addressing suicide prevention simultaneously with treating chronic pain and insomnia is encouraged.5

Background

PCMHI treats physical and mental health comorbidities with a collaborative framework and a biopsychosocial integrative model.6 PCMHI staff provide mental health services as members of primary care teams. An interdisciplinary PCMHI team can include, but is not limited to, psychologists, mental health social workers, psychiatrists, nurse practitioners, clinical pharmacists, and mental health nurses. Quality of care within this model is elevated, as mental and physical health are recognized as interconnected. Collaboration between primary care and mental health benefits veterans and the VHA by increasing access to mental health care, decreasing stigma associated with mental health treatment, improving health outcomes, and enhancing the likelihood of recovery, resulting in high patient satisfaction.6-8

In the existing PCMHI model, HCPs are encouraged to use short-term, evidence-based psychotherapies (EBPs).9 Veterans referred to PCMHI from primary care are typically able to attend 1 to 6 brief sessions of mental health treatment, often 20 to 30 minutes long. Most EBPs in PCMHI are disorder- specific, providing interventions focused on a single presenting problem (eg, insomnia, chronic pain, or posttraumatic stress disorder [PTSD]). For veterans with a single issue, this model can be very effective. 1,10 However, the high rate of co-occurrence of mental and physical health issues can make it difficult to fully treat interrelated problems if the focus is on 1 specific diagnosis. Veterans with a need for additional (more comprehensive or intensive) mental health treatment are frequently referred to a higher, more resource-intensive level of mental health care, either in the VHA or the community. Examples of higher levels of mental health care include the longer term behavioral health interdisciplinary program (BHIP), sometimes called a mental health clinic (MHC), or a specialty mental health program such as a PTSD clinic.

As PCMHI continues to grow, new challenges have emerged related to staffing shortages and gaps in the clinical delivery of mental health treatment within the VHA. At the same time, demand for VHA mental health treatment has increased. However, a mental health professional shortage severely limits the ability of the VHA to meet this demand. In many systems, this shortage may result in more referrals being made to a higher level of mental health care because of fewer resources to provide comprehensive treatment in a less intensive PCMHI setting.8,10,11 This referral pattern can overburden higher level care, often with long wait times for treatment and lengthy lag times between appointments. Furthermore, these gaps in the clinical delivery of care cannot be effectively addressed by hiring additional mental health professionals. This strain on resources can impede access to care and negatively affect outcomes.10

Recent congressional reports highlight these issues, noting that demand for mental health services continues to outpace the capacity of both PCMHI and higher levels of mental health care, leading to delays in treatment that may negatively affect outcomes.8,10,11 These delays can be particularly detrimental for individuals with conditions requiring timely intervention.8,11 Some veterans are willing to engage with PCMHI in a primary care setting but may be reluctant to engage in general mental health treatment. These veterans might not receive the mental health care they need without PCMHI.

Group Psychotherapy

A group psychotherapy format can address gaps in care delivery and provide advantages for patients, mental health professionals, and the VHA. Group psychotherapy aligns with the US Department of Veterans Affairs (VA) 2018 Blueprint for Excellence and 2018 to 2024 strategic plan, underscoring the need for more timely and efficient mental health services.12,13

Benefits of group psychotherapy include reductions in symptoms, decreased feelings of isolation, increased social support, decreased emotional suppression, and enhanced satisfaction with overall quality of life.14-17 Studies of veterans with PTSD have found less attrition among those who chose group therapy compared with individual therapy.14,18 Group psychotherapy improves access to care by enabling delivery to more patients.14 When compared with individual therapy, the group format allows for a large number of patients to be treated simultaneously, maximizing resources and reducing costs.3,19-21

VISN 9 CRH Innovation

The VA provides care to veterans through regionally distinct administrative systems known as Veterans Integrated Service Networks (VISNs). Clinical resource hubs (CRH) are VISN-based programs created to cover VA staffing shortages by virtually deploying HCPs into local VA systems until vacancies are filled. The national CRH vision of effectively using resources and innovative technologies to meet veterans’ health care needs, along with the above-referenced clinical gaps in the delivery of care, inspired the development of VIP Boot Camp within the VISN 9 CRH.22

Program Description

VIP Boot Camp is an evidence-informed group psychotherapy program designed to provide timely, brief, and comprehensive mental health treatment for veterans. VIP Boot Camp was developed to address the needs of veterans accessing PCMHI services who experience ≥ 1 of the often overlapping problems of anxiety/emotion regulation/stress, sleep difficulties, and chronic pain (Figure). VIP Boot Camp uses an integrative approach to highlight interconnections and similarities among these difficulties and their treatment. A primary vision of the program is to provide this comprehensive treatment within PCMHI (upstream) so additional referrals to higher levels of mental health care (downstream) may not be needed.

This design is intentional because it increases the number of individuals who can be treated upstream with comprehensive, preventive, and proactive care within PCMHI which, over time, frees up resources in the BHIP for individuals requiring higher levels of care. This approach also aligns with the importance of early treatment for chronic pain and sleep disturbances, which are linked to increased risk of suicide attempts and deaths by suicide for veterans.5 National interest for VIP Boot Camp grew during fiscal year 2024 after it received the Gold Medal Recognition for Most Adoptable and Greatest Potential for Impact during VHA National Access Sprint Wave 3—Mental Health Call of Champions.

History

VIP Boot Camp began in August 2021 at VISN 9 as a 6-week virtual group for veterans with chronic pain. It was established to assist a large VA medical center experiencing PCMHI staffing shortages and lacking available PCMHI groups. Many veterans in the chronic pain group discussed co-occurring issues such as sleep disturbances, anxiety, and stress. The CRH team considered launching 2 separate groups to address these additional PCMHI-level issues; however, in developing the group material which drew from multiple clinical approaches, the team recognized significant overlapping and interconnected themes.

The team discussed EBPs within the VHA and how certain interventions within these treatments could be helpful across many other co-occurring disorders. Integrated tactics (clinical interventions) were drawn from cognitive-behavioral therapy (for depression, insomnia, or chronic pain), acceptance and commitment therapy, prolonged exposure, cognitive processing therapy, dialectical behavior therapy, unified protocol, pain reprocessing therapy, emotional awareness and expression therapy, interpersonal neurobiology, and mindfulness. We collaborated with veterans during VIP Boot Camp groups to determine how to present and discuss complex interventions in ways that were clinically accurate, understandable, relatable, and relevant to their experiences.

To address accessibility issues, the chronic pain group was reduced to 4 weeks. A second 4-week module for anxiety, emotion regulation, and stress was developed, mirroring the tactics, language, and integrative approach of the revised chronic pain module. A similar integrative approach led to the development of the third and final 4-week module for sleep disturbances.

Current Program

The VIP Boot Camp consists of three 4-week integrated modules, each highlighting a critical area: sleep disturbances (Improving Sleep), chronic pain difficulties (Outsmarting Chronic Pain), and emotion regulation difficulties (Rewiring Your Brain). VIP Boot Camp is designed for veterans who are at the PCMHI level of care. Referrals are accepted for patients receiving treatment from primary care or PCMHI.

Guidelines for participation in VIP Boot Camp may differ across sites or VISNs. For example, a veteran who has been referred to the BHIP for medication management only or to a specialty MHC such as a pain clinic or PTSD clinic might also be appropriate and eligible for VIP Boot Camp.

Given the interconnectedness of foundational themes, elements, and practices across the VIP Boot Camp modules, the modules are offered in a rolling format with a veteran-centric “choose your own adventure” approach. Tactics are presented in the modules in a way that allows patients to begin with any 1 of the 3 modules and receive treatment that will help in the other areas. Participants choose their core module and initial treatment focus based on their values, needs, and goals. Individuals who complete a core module can end their VIP Boot Camp experience or continue to the next 4-week module for up to 3 modules.

The group is open to new individuals at the start of any 4-week module and closed for the remainder of its 4-week duration. This innovative rolling modular approach combines elements of open- and closed-group format, allowing for the flexibility and accessibility of an open group with the stability and peer support of a closed group.

Given the complicated and overlapping nature of chronic pain, emotion regulation/ stress, and sleep disturbances, VIP Boot Camp acknowledges that everything is interconnected and difficulties in 1 area may impact other areas. The 3 interconnected modules with repeating themes provide coherence and consistency. Veterans learn how interconnections across difficulties can be leveraged so that tactics learned and practiced in 1 area can assist in other areas, changing the cycle of suffering into a cycle of growth.

VIP Boot Camp sessions are 90 minutes long, once weekly for 4 weeks, with 2 mental health professionals trained to lead a dynamic group psychotherapy experience that aims to be fun for participants. VIP Boot Camp synthesizes evidence-based and evidence-informed interventions, as well as techniques from VHA complementary and integrative health programs, psychoeducation, and interpersonal interventions that model connection, playfulness, and healthy boundaries. These varied strategies combine to equip veterans with practical tactics for self-management outside of sessions, a process described as “finding puzzle pieces.” VIP Boot Camp is built on the idea that people are more likely to adopt and practice any tactic after being taught why that tactic is important, and how it fits into their larger interconnected puzzle. After each session, participants are provided with additional asynchronous educational material to help reinforce their learnings and practices.

Although individuals may hesitate to participate in a group setting, they often find the experience of community enhances and accelerates their treatment and gains. This involvement is highlighted in a core aspect of a VIP Boot Camp session called wins, during which participants learn how others on their Boot Camp team are implementing new skills and moving toward their personal values and objectives in a stepwise manner. Through these shared experiences, veterans discover how tactics working for others may serve as a model for their own personal objectives and plans for practice. The sense of relief described by many upon realizing they are not alone in their experiences, along with the satisfaction felt in discovering their ability to support others in Boot Camp, is described by many participants as deeply meaningful and in line with their personal values.

While developed as a fully virtual group program, VIP Boot Camp can also be conducted in person. The virtual program has been successful and continues to spread across VISN 9. There are 8 virtual VIP Boot Camps running in VISN 9, with plans for continued expansion. In the VISN 9 CRH, Boot Camps typically have 10 to 12 participants. Additionally, as VIP Boot Camp grows within a location there are frequently sufficient referrals to support a second rolling group, which enables staggering of the module offerings to allow for even more timely treatment.

Training Program

VISN 9 CRH also developed a VIP Boot Camp 3-day intensive training program for PCMHI HCPs that consists of learning and practicing VIP Boot Camp material for chronic pain, emotion regulation/ stress, sleep disturbances, mindfulness, and guided imagery, along with gaining experience as a VIP Boot Camp coleader. Feedback received from PCMHI HCPs who completed training has been positive. There is also a private Microsoft Teams channel for HCPs, which allows for resource sharing and community building among coleaders. More than 75 PCMHI HCPs have completed VIP Boot Camp training and > 25 VIP Boot Camps have been established at 4 additional VISNs.

The VISN 9 CRH VIP Boot Camp program initiated an implementation and effectiveness project with the Michael E. DeBakey VA Medical Center and the South Central Mental Illness Research, Education and Clinical Center. The focus of this collaboration is support for implementation and treatment effectiveness research with reports, articles, and a white paper on findings and best practices, alongside continued dissemination of the VIP Boot Camp program and training.

Conclusions

VIP Boot Camp is a PCMHI group program offering readily available, comprehensive, and integrative group psychotherapy services to veterans experiencing . 1 of the following: chronic pain, emotion regulation/ stress, and sleep disturbances. It was launched at the VISN 9 CRH with a goal of addressing clinical gaps in the delivery of mental health care, by increasing the number of patients treated within PCMHI. The VIP Boot Camp model provides veterans the opportunity to transform cycles of suffering into cycles of growth through a single approach that can address multiple presenting and interconnected issues.

A 3-day VIP Boot Camp training program provides a quick and effective path for a PCMHI program to train HCPs to launch a VIP Boot Camp. The VISN 9 CRH will continue to champion VIP Boot Camp as a model for the successful provision of comprehensive and integrative mental health treatment within PCMHI at the VA. Through readily available access to comprehensive mental health treatment in an environment that promotes participant empowerment and social engagement, VIP Boot Camp represents an integrative and innovative model of mental health treatment that offers benefits to veteran participants, HCPs, and the VHA.

- Leung LB, Yoon J, Escarce JJ, et al. Primary care-mental health integration in the VA: shifting mental health services for common mental illnesses to primary care. Psychiatr Serv. 2018;69:403-409. doi:10.1176/appi.ps.201700190

- Zhang A, Park S, Sullivan JE, et al. The effectiveness of problem-solving therapy for primary care patients’ depressive and/or anxiety disorders: a systematic review and meta-analysis. J Am Board Fam Med. 2018;31:139-150. doi:10.3122/jabfm.2018.01.170270

- Hundt NE, Barrera TL, Robinson A, et al. A systematic review of cognitive behavioral therapy for depression in veterans. Mil Med. 2014;179:942-949. doi:10.7205/milmed-d-14-00128

- Jank R, Gallee A, Boeckle M, et al. Chronic pain and sleep disorders in primary care. Pain Res Treat. 2017;2017:1-9. doi:10.1155/2017/9081802

- Ashrafioun L, Bishop TM, Pigeon WR. The relationship between pain severity, insomnia, and suicide attempts among a national veteran sample initiating pain care. Psychosom Med. 2021;83:733- 738. doi:10.1097/psy.0000000000000975

- Ramanuj P, Ferenchik E, Docherty M, et al. Evolving models of integrated behavioral health and primary care. Curr Psychiatry Rep. 2019;21:1. doi:10.1007/s11920-019-0985-4

- Post EP, Metzger M, Dumas P, et al. Integrating mental health into primary care within the Veterans Health Administration. Fam Syst Health. 2010;28:83-90. doi:10.1037/a0020130

- Smith TL, Kim B, Benzer JK, et al. FLOW: early results from a clinical demonstration project to improve the transition of patients with mental health disorders back to primary care. Psychol Serv. 2021;18:23-32. doi:10.1037/ser0000336

- Kearney LK, Post EP, Pomerantz AS, et al. Applying the interprofessional patient aligned care team in the department of veterans affairs transforming primary care. Am Psychol. 2014;69(4):399-408. doi:10.1037/a0035909

- US Government Accountability Office. Veterans health care: staffing challenges persist for fully integrating mental health and primary care services. December 15, 2022. Accessed July 9, 2025. https://www.gao.gov/products/gao-23-105372

- National Academies of Science and Engineering. Evaluation of the Department of Veterans Affairs Mental Health Services. National Academies Press; 2018. Accessed July 9, 2025. https://nap.nationalacademies.org/catalog/24915/evaluation-of-the-department-of-veterans-affairs-mental-health-services

- US Department of Veterans Affairs. Blueprint for excellence: achieving veterans’ excellence. October 6, 2014. Accessed July 9, 2025. https://www.volunteer.va.gov/docs/blueprintforexcellence_factsheet.PDF

- US Department of Veterans Affairs. Department of Veterans Affairs FY 2018-2024 strategic plan. Accessed July 9, 2025. https://www.calvet.ca.gov/Regulations/USDVA%20Strategic%20Plan%202018-2024.pdf

- Sripada RK, Bohnert KM, Ganoczy D, et al. Initial group versus individual therapy for posttraumatic stress disorder and subsequent follow-up treatment adequacy. Psychol Serv. 2016;13:349-355. doi:10.1037/ser0000077

- Burnett-Zeigler IE, Pfeiffer P, Zivin K, et al. Psychotherapy utilization for acute depression within the Veterans Affairs health care system. Psychol Serv. 2012;9:325-335. doi:10.1037/a0027957

- Kim JS, Prins A, Hirschhorn EW, et al. Preliminary investigation into the effectiveness of group webSTAIR for trauma-exposed veterans in primary care. Mil Med. 2024;189:e1403-e1408. doi:10.1093/milmed/usae052

- Jakupcak M, Blais RK, Grossbard J, et al. “Toughness” in association with mental health symptoms among Iraq and Afghanistan war veterans seeking Veterans Affairs health care. Psychol Men Masc. 2014;15:100-104. doi:10.1037/a0031508

- Stoycos SA, Berzenski SR, Beck JG, et al. Predictors of treatment completion in group psychotherapy for male veterans with posttraumatic stress disorder. J Trauma Stress. 2023;36:346-358. doi:10.1002/jts.22915

- Possemato K. The current state of intervention research for posttraumatic stress disorder within the primary care setting. J Clin Psychol Med Settings. 2011;18:268-280. doi:10.1007/s10880-011-9237-4

- Hunt MG, Rosenheck RA. Psychotherapy in mental health clinics of the Department of Veterans Affairs. J Clin Psychol. 2011;67:561-573. doi:10.1002/jclp.20788

- Khatri N, Marziali E, Tchernikov I, et al. Comparing telehealth-based and clinic-based group cognitive behavioral therapy for adults with depression and anxiety: a pilot study. Clin Interv Aging. 2014;9:765. doi:10.2147/cia.s57832

- Dangel J. Clinical resource hub increases veterans' access to care. VA News. January 12, 2025. Accessed September 3, 2025. https://news.va.gov/137439/clinical-resource-hub-increases-access-to-care/

- Leung LB, Yoon J, Escarce JJ, et al. Primary care-mental health integration in the VA: shifting mental health services for common mental illnesses to primary care. Psychiatr Serv. 2018;69:403-409. doi:10.1176/appi.ps.201700190

- Zhang A, Park S, Sullivan JE, et al. The effectiveness of problem-solving therapy for primary care patients’ depressive and/or anxiety disorders: a systematic review and meta-analysis. J Am Board Fam Med. 2018;31:139-150. doi:10.3122/jabfm.2018.01.170270

- Hundt NE, Barrera TL, Robinson A, et al. A systematic review of cognitive behavioral therapy for depression in veterans. Mil Med. 2014;179:942-949. doi:10.7205/milmed-d-14-00128

- Jank R, Gallee A, Boeckle M, et al. Chronic pain and sleep disorders in primary care. Pain Res Treat. 2017;2017:1-9. doi:10.1155/2017/9081802

- Ashrafioun L, Bishop TM, Pigeon WR. The relationship between pain severity, insomnia, and suicide attempts among a national veteran sample initiating pain care. Psychosom Med. 2021;83:733- 738. doi:10.1097/psy.0000000000000975

- Ramanuj P, Ferenchik E, Docherty M, et al. Evolving models of integrated behavioral health and primary care. Curr Psychiatry Rep. 2019;21:1. doi:10.1007/s11920-019-0985-4

- Post EP, Metzger M, Dumas P, et al. Integrating mental health into primary care within the Veterans Health Administration. Fam Syst Health. 2010;28:83-90. doi:10.1037/a0020130

- Smith TL, Kim B, Benzer JK, et al. FLOW: early results from a clinical demonstration project to improve the transition of patients with mental health disorders back to primary care. Psychol Serv. 2021;18:23-32. doi:10.1037/ser0000336

- Kearney LK, Post EP, Pomerantz AS, et al. Applying the interprofessional patient aligned care team in the department of veterans affairs transforming primary care. Am Psychol. 2014;69(4):399-408. doi:10.1037/a0035909

- US Government Accountability Office. Veterans health care: staffing challenges persist for fully integrating mental health and primary care services. December 15, 2022. Accessed July 9, 2025. https://www.gao.gov/products/gao-23-105372

- National Academies of Science and Engineering. Evaluation of the Department of Veterans Affairs Mental Health Services. National Academies Press; 2018. Accessed July 9, 2025. https://nap.nationalacademies.org/catalog/24915/evaluation-of-the-department-of-veterans-affairs-mental-health-services

- US Department of Veterans Affairs. Blueprint for excellence: achieving veterans’ excellence. October 6, 2014. Accessed July 9, 2025. https://www.volunteer.va.gov/docs/blueprintforexcellence_factsheet.PDF

- US Department of Veterans Affairs. Department of Veterans Affairs FY 2018-2024 strategic plan. Accessed July 9, 2025. https://www.calvet.ca.gov/Regulations/USDVA%20Strategic%20Plan%202018-2024.pdf

- Sripada RK, Bohnert KM, Ganoczy D, et al. Initial group versus individual therapy for posttraumatic stress disorder and subsequent follow-up treatment adequacy. Psychol Serv. 2016;13:349-355. doi:10.1037/ser0000077

- Burnett-Zeigler IE, Pfeiffer P, Zivin K, et al. Psychotherapy utilization for acute depression within the Veterans Affairs health care system. Psychol Serv. 2012;9:325-335. doi:10.1037/a0027957

- Kim JS, Prins A, Hirschhorn EW, et al. Preliminary investigation into the effectiveness of group webSTAIR for trauma-exposed veterans in primary care. Mil Med. 2024;189:e1403-e1408. doi:10.1093/milmed/usae052

- Jakupcak M, Blais RK, Grossbard J, et al. “Toughness” in association with mental health symptoms among Iraq and Afghanistan war veterans seeking Veterans Affairs health care. Psychol Men Masc. 2014;15:100-104. doi:10.1037/a0031508

- Stoycos SA, Berzenski SR, Beck JG, et al. Predictors of treatment completion in group psychotherapy for male veterans with posttraumatic stress disorder. J Trauma Stress. 2023;36:346-358. doi:10.1002/jts.22915

- Possemato K. The current state of intervention research for posttraumatic stress disorder within the primary care setting. J Clin Psychol Med Settings. 2011;18:268-280. doi:10.1007/s10880-011-9237-4

- Hunt MG, Rosenheck RA. Psychotherapy in mental health clinics of the Department of Veterans Affairs. J Clin Psychol. 2011;67:561-573. doi:10.1002/jclp.20788

- Khatri N, Marziali E, Tchernikov I, et al. Comparing telehealth-based and clinic-based group cognitive behavioral therapy for adults with depression and anxiety: a pilot study. Clin Interv Aging. 2014;9:765. doi:10.2147/cia.s57832

- Dangel J. Clinical resource hub increases veterans' access to care. VA News. January 12, 2025. Accessed September 3, 2025. https://news.va.gov/137439/clinical-resource-hub-increases-access-to-care/

VIP Boot Camp: Expanding the Impact of VA Primary Care Mental Health With a Transdiagnostic Modular Group Program

VIP Boot Camp: Expanding the Impact of VA Primary Care Mental Health With a Transdiagnostic Modular Group Program

Healthy Diet, Exercise Cut Liver Death Risk in Drinkers

Following a healthy diet and engaging in a high level of physical activity can significantly lower the risk for alcohol-related liver mortality, even among all drinking patterns, including heavy and binge drinking, according to a new study from Indiana University researchers.

Notably, any amount of daily alcohol intake or binge drinking increases the liver mortality risk, the researchers found. However, that risk can be reduced somewhat with healthy dietary patterns and increased physical activity.

Although previous studies suggested that one or two drinks per day could be associated with lower risks for cardiovascular disease, cancer, or liver-related outcomes, other confounders and unmeasured lifestyle behaviors could vary significantly between consumers and influence their health risks, the researchers said.

“A significant knowledge gap exists regarding the interplay of dietary patterns and physical activity with alcohol-attributable liver-specific mortality,” said senior author Naga Chalasani, MD, AGAF, professor of gastroenterology and hepatology at the Indiana University School of Medicine in Indianapolis.